Efficiently training massive language models is now a central challenge for organizations building advanced AI systems. As models grow larger and datasets expand into the trillions of tokens, the need for speed and memory efficiency becomes critical.

NVIDIA’s NVFP4 format is designed to address this demand by delivering the resource savings of 4-bit precision without sacrificing the accuracy typically expected from higher-precision formats. This innovation holds the potential to reshape large-scale AI infrastructure and training pipelines.

The Importance of Efficient Precision

Modern AI factories face mounting pressure to maximize token throughput while maintaining model stability and accuracy. Historically, training has relied on 16-bit formats like FP16 or BF16, which ensure reliable convergence but require significant compute and memory resources. With NVFP4, organizations can operate at 4 bits per weight and activation, dramatically reducing resource consumption and paving the way for even larger models and datasets.

Challenges of 4-Bit Quantization

Moving to 4-bit quantization greatly compresses model data, but it also introduces significant challenges. Maintaining accuracy at such low precision involves overcoming issues like gradient instability, limited dynamic range, and increased quantization error.

NVIDIA tackles these hurdles with advanced techniques including specialized scaling and rounding algorithms, to maintain both convergence and performance, even at this narrow precision.

NVFP4’s Training Innovations

The NVFP4 training recipe incorporates several targeted methods to ensure reliable, accurate results:

- Micro-block scaling: By grouping 16 elements under a shared scaling factor, this approach reduces outlier effects and enables more nuanced value representation.

- High-precision block encoding: NVFP4 uses E4M3 scale factors, offering finer granularity than previous power-of-two techniques, which leads to lower quantization error.

- Reshaping tensor distributions: Hadamard transforms are applied to activations and gradients, smoothing outliers and preparing data for more effective low-bit quantization.

- Quantization fidelity and consistency: Selective 2D block-based quantization ensures that both forward and backward passes remain aligned, minimizing signal distortion and supporting stable convergence.

- Stochastic rounding: This probabilistic rounding technique maintains gradient flow and reduces bias, which is essential for achieving high training stability and accuracy.

Hardware and Software Synergy with Blackwell GPUs

NVIDIA’s latest Blackwell GPUs including the GB200 and GB300, feature native FP4 support, delivering up to a sevenfold improvement in matrix multiplication speed. This tight hardware-software integration ensures that the benefits of NVFP4 are fully realized in production settings, enabling the training of unprecedentedly large models with improved efficiency.

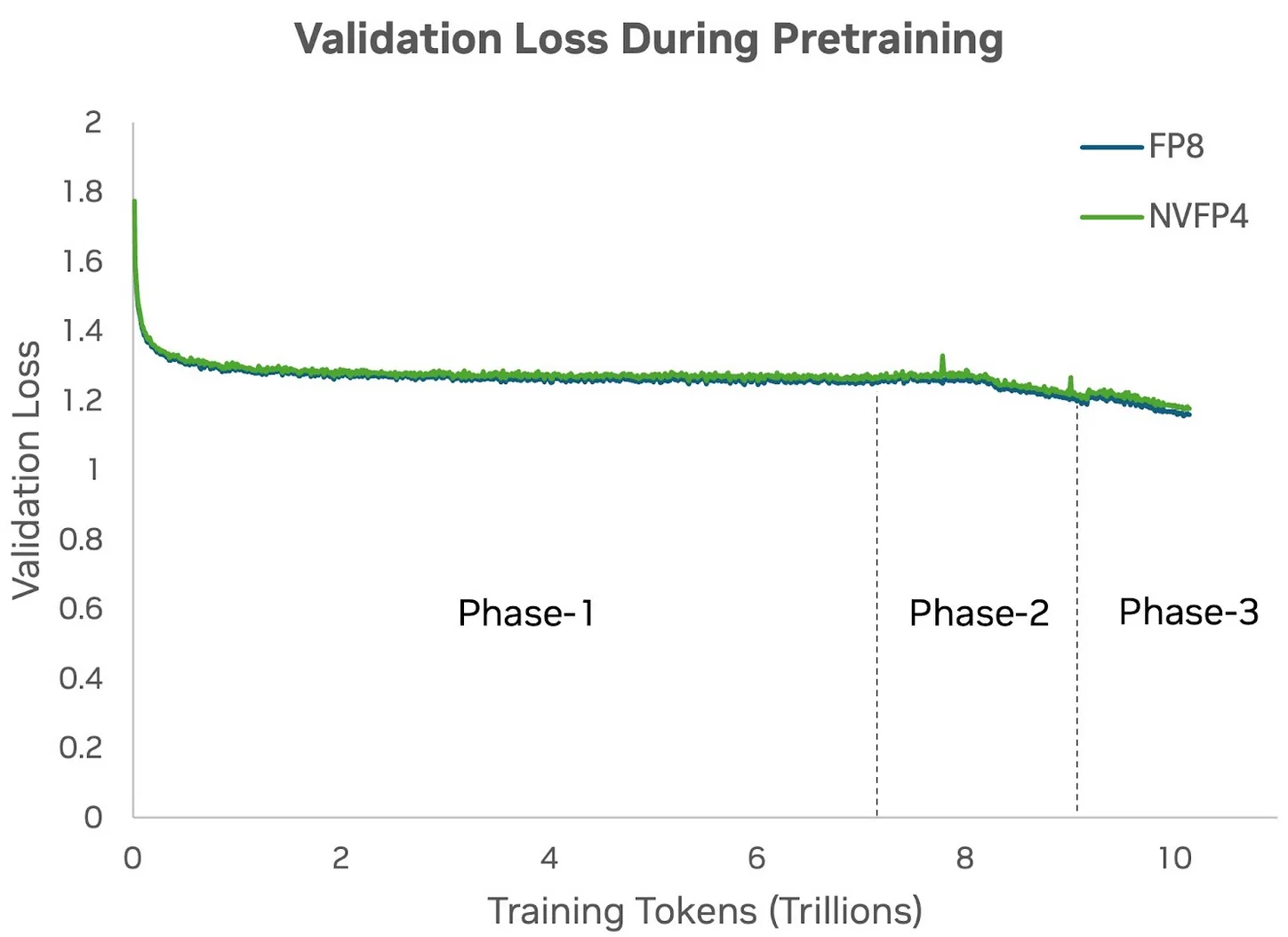

Figure 3. Validation loss comparison during pretraining of a 12B Hybrid Mamba-Transformer model using FP8 and NVFP4 precisions over 10T tokens shows NVFP4 loss curve closely aligns with the FP8 loss curve (baseline) throughout training. Credit: NVIDIA

Accuracy at Trillion-Token Scale

NVFP4’s capabilities are validated through rigorous experimentation. Training a 12-billion parameter Hybrid Mamba-Transformer model on 10 trillion tokens, NVFP4 achieved accuracy and convergence comparable to established 8-bit FP8 baselines.

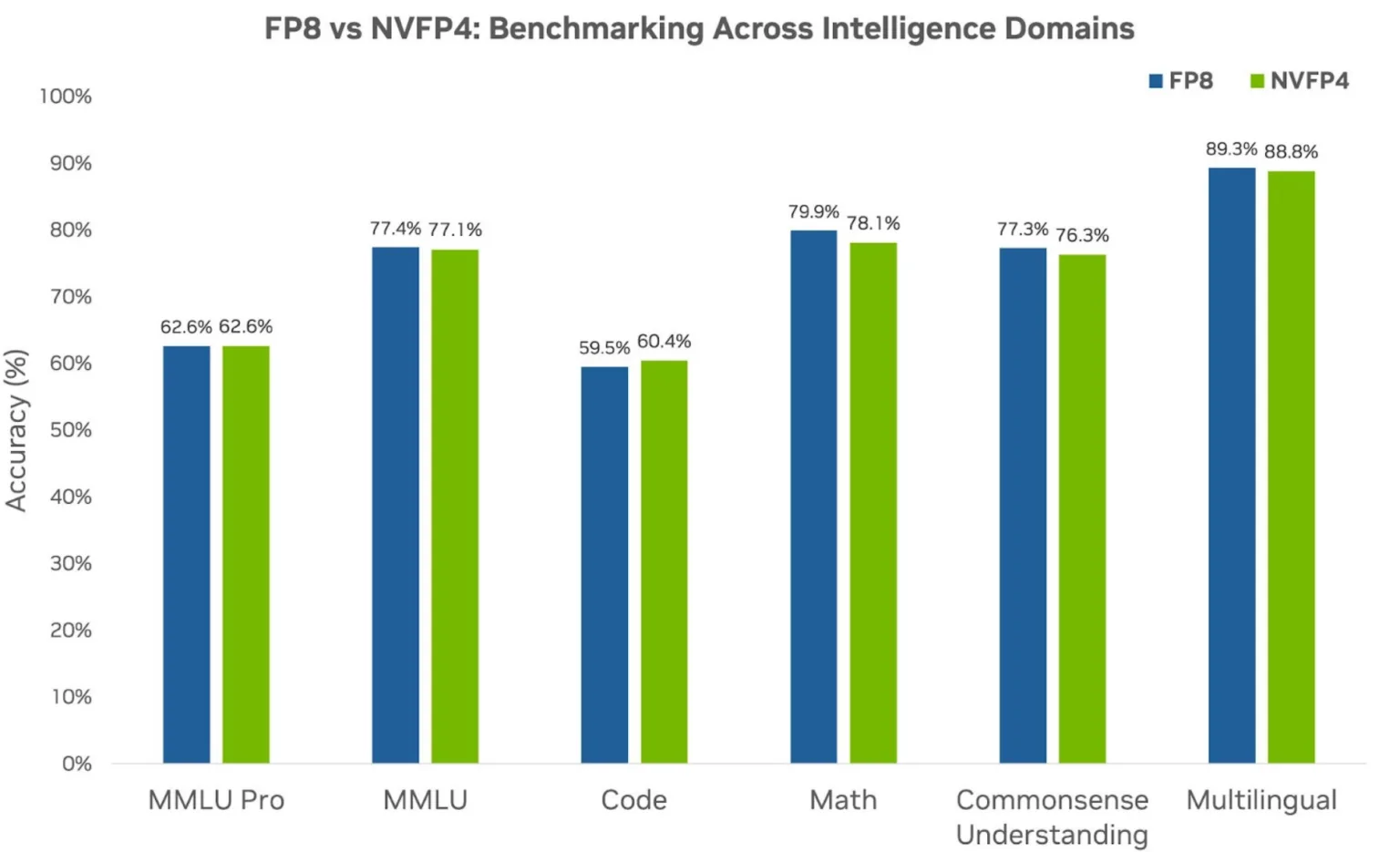

Benchmarks spanning language understanding, code, and mathematics reveal that NVFP4 delivers real-world performance on par with higher-precision techniques, confirming its suitability for state-of-the-art AI applications.

Figure 4. Benchmarking downstream task accuracy scores on pretraining the 12B Hybrid Mamba-Transformer model using FP8 precision (baseline) and NVFP4 precision. Pretraining with NVFP4 achieves accuracy comparable with higher precision formats. Credit: NVIDIA

Smarter, Scalable AI Training

NVFP4 sets a new benchmark for efficiency in AI training. By enabling accurate training at 4-bit precision, NVIDIA’s innovation allows organizations to scale up models, accelerate research, and manage costs and energy use more effectively. As NVFP4 is adopted, the future of AI development looks both faster and more sustainable.

NVFP4 Is Transforming AI Training: 4-Bit Precision Meets High Performance