The OctoTools framework, developed by a team from Stanford University, integrates various tools for task execution without additional training. It features a planner for action refinement and an executor for command generation, ultimately summarizing results from a structured context. The framework optimizes tool selection for improved task performance. The author's claim to improve on other frameworks using similar tools by ~10%.

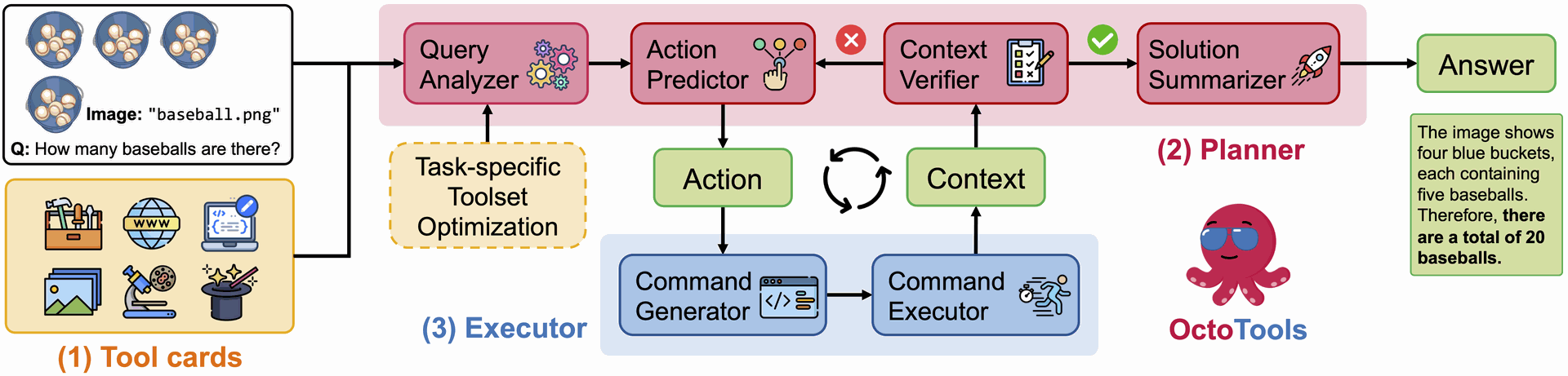

Figure 1. The framework of OctoTools. (1) Tool cards define tool-usage metadata and encapsulate tools, enabling training-free integration of new tools without additional training or framework refinement. (2) The planner governs both high-level and low-level planning to address the global objective and refine actions step by step. (3) The executor instantiates tool calls by generating executable commands and save structured results in the context. The final answer is summarized from the full trajectory in the context. Furthermore, the task-specific toolset optimization algorithm learns to select a beneficial subset of tools for downstream tasks.

Tool based Ai agents are gaining popularity over the last year as they enable deep research, complex reasoning and more accurate responses. These "agents" are able to call a plethora of resources to create code, make computations, pull information from the web or data stores, and much more.

This feature is supposed to work like a human's toolbox, where the right tool for the job is selected when we need it, however they have run into issues with tool selection, continuity and accuracy. The Octo Tools framework looks to improve on tool use by creating a simple way to define and consume tools within the reasoning chain.

The Problem with Tools

Tool creation and consumption in similar agent frameworks relies on extensive supervised data and fine-tuning, and those limited to one specialized domain of tools. The author's note that while these agents can perform well on specialized tasks, their ability to be generalists is hindered by their configuration. In addition, integrating tools can be time consuming and specialized activity in and of itself.

Octo Tools, by Stanford Ai Research, addresses this issue by providing "tool cards" , which act as standard wrappers around APIs, Python code, and any other resource you can build to interact with such as web parsers and other LLMs. The "tool card" brings along OctoTools metadata that defines formats, usage, and best practices for the LLM to review before selecting the tool.

This simple tool definition allows developers to easily extend the tool ecosystem for their model to choose from, allowing improved generalization as well as specialization performance.

The Octo Tool Multi-Agent Workflow

The Octo Tools team address areas of accurate tool use and responses by implementing a multi stage agent workflow which is similar to the Chain of Agents method recently published by Google AI Research.

In this method, a series of "workers" is spawned by the LLM and takes actions on the query to create a response that is collected and reviewed by a supervisor agent which then determines if the answer is ready for the user. In Octo Tools this is split into Query Analyzer, Action Predictor, Context verifier, and Solution summarizer.

The workflow consists of three stages: Tool Cards, Executor and Planner. The stages work together to craft a final answer for the user. The framework's planner-executor paradigm separates strategic planning from command generation, reducing errors and increasing transparency.

- Tool Cards: Provides the tool-usage meta data to the planner to select from.

- Planner: Governs both high-level and low-level planning to address the global objective and refine actions step by step. When ready with a final answer it summarizes the final answer from the full trajectory in the context.

- Executor: Instantiates tool calls by generating executable commands and saves structured results in the context for the planner to review.

Octo Tools Performance Metrics

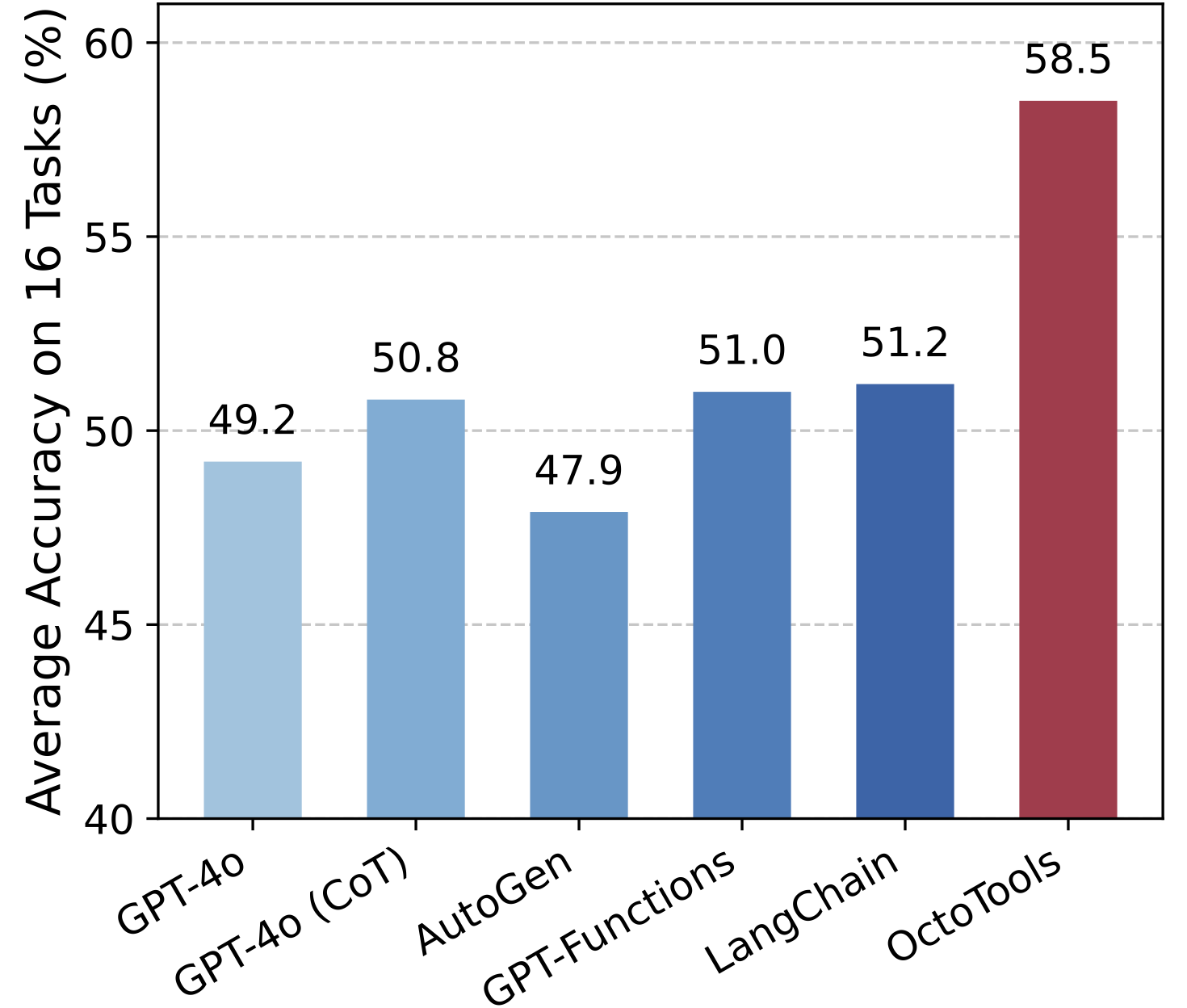

OctoTools substantially outperforms other base lines, achieving an average accuracy gain of 9.3% over zero shot prompting by GPT-4o and 7.7% over chain-of-thought (CoT) prompting, as well as up to 10.6% improvement compared to existing agentic frameworks ... AutoGen, 2024; OpenAI, 2023a; LangChain, 2024. (paper)

Figure 2. Performance comparison across 16 benchmarks. OctoTools framework achieves an average accuracy gain of 9.3% over GPT-4o without function plugins and 7.3% over LangChain, using the same tools under the same configuration. (paper)

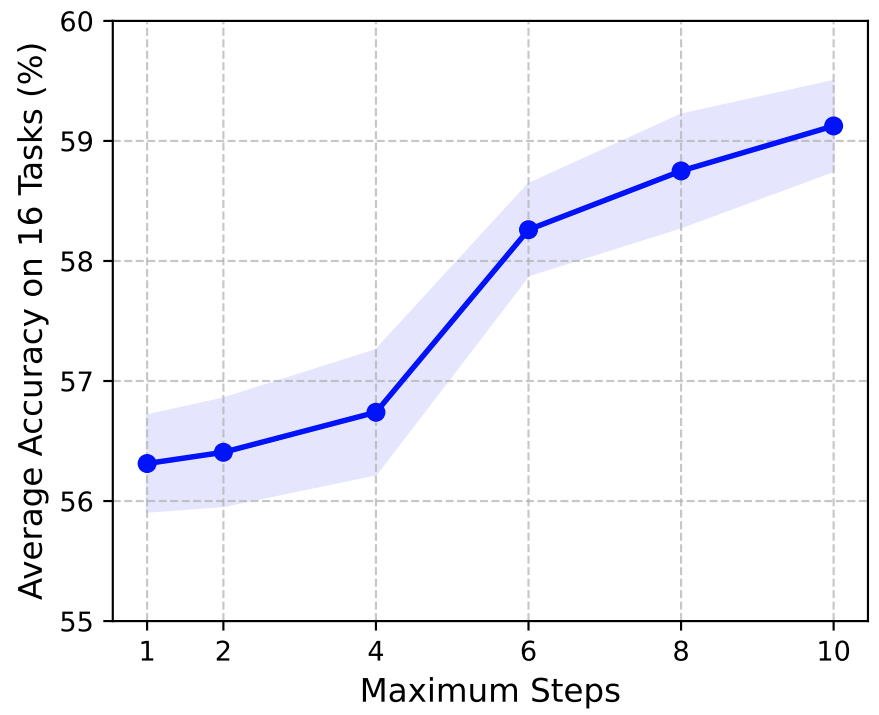

Multi-agent workflows utilize a feedback cycle to iteratively improve the context and ultimately the answer. The idea is that as tools add pertinent information to the context the summarizer can produce a more informed answer. It follows that we will see improved accuracy as the agent collaborate for longer periods. Overall, the average accuracy of Octo Tools tends to improve as the maximum number of steps increases as shown in Figure 7.

Figure 7. Average accuracy across 16 benchmarks with respect to maximum allowed reasoning steps in OctoTools. (paper)

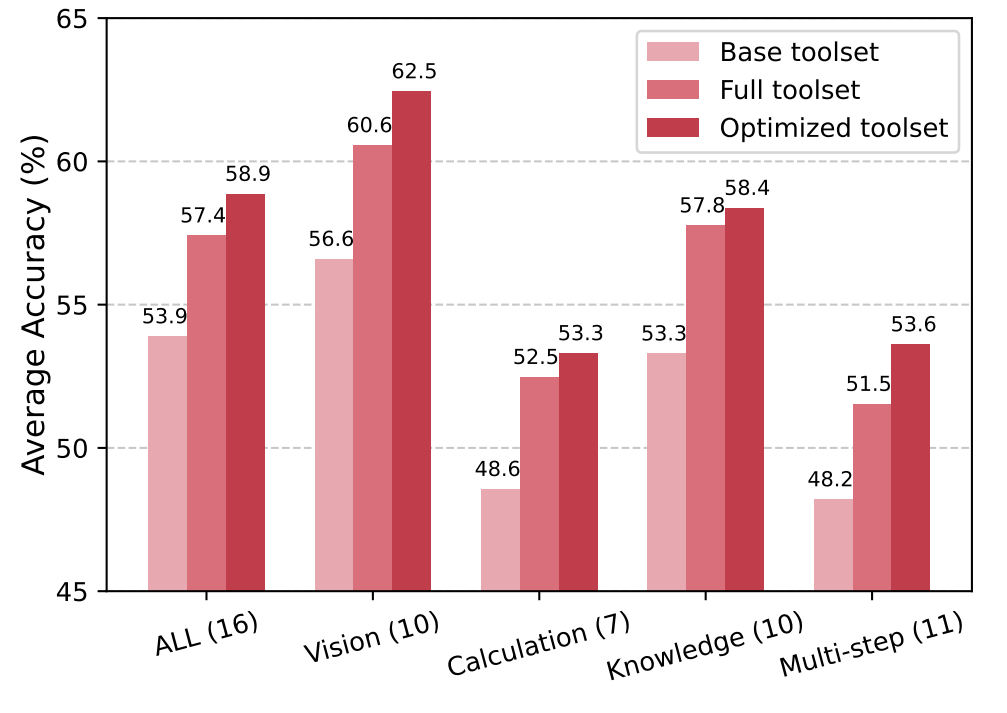

Additionally, the team finds that all task benchmarks are improved by optimizing the tools available before the task is started. "Optimization" of the tools in this instance is simply deactivating tools that would not be useful for the specified task. This provides a significant performance increase (as measured by response accuracy) over the base toolset (only the oct agent) and a slightly better performance over the complete toolset.

The improvement seen when optimizing tool selection lead to the development of a toolset optimization algorithm to select a beneficial subset of tools for specific tasks, improving both accuracy and efficiency.

"Our findings suggest that external feedback (e.g., performance on a validation set or expert insights) helps identify when a certain tool improves performance. Additionally, selectively enabling tools avoids unnecessary overhead and improves efficiency. A promising next step is to explore a query-based approach that dynamically refines the toolset for each query." (paper)

Figure 8. Performance under three toolset strategies in OctoTools across all 16 tasks and various categories (the number in parentheses indicates the number of tasks in each category). (paper)

The results of the experiments demonstrate that OctoTools significantly outperforms other agentic frameworks and baseline prompting methods. Across 16 diverse benchmarks, OctoTools achieves an average accuracy gain of 9.3% over GPT-4o and up to 10.6% over other frameworks like AutoGen, GPT-Functions, and LangChain.

The ablation studies reveal that the average accuracy improves as the maximum number of steps increases, highlighting the benefits of multi-step reasoning. The optimized toolset configuration slightly enhances performance, with OctoTools achieving 58.9% accuracy compared to 57.4% when all tools are enabled. These findings underscore the effectiveness of OctoTools in combining multi-step planning and specialized tool usage to solve complex reasoning tasks.

Summary

Octo Tools significantly reduces the implementation complexity for tools while strengthening logic and reasoning abilities. This lowers the barrier to use with new LLMs and tools, enabling developers to innovate faster. The framework provides experiments only with the rather limited GPT-40 LLM. While GPT-40 was state of the art at the time of the experiments there are much better models now available including Claude 3.7 Sonnet, GPT-03, and Grok3 which may produce even further improvements upon current benchmarks. It will be interesting to experiment with the framework to see how the model choice, number of steps, compute power and other experimental metrics will affect the response accuracy.

Try the Demo on HuggingFace: https://huggingface.co/spaces/OctoTools/octotools

Review the Code: https://github.com/octotools/octotools

Stanford Research Introduces Octo Tools: Easily Extensible Tool Based Open Source Agent Framework

OctoTools: An Agentic Framework with Extensible Tools for Complex Reasoning