In the rapidly evolving landscape of artificial intelligence, search technologies powered by large language models (LLMs) have become increasingly sophisticated, offering users more contextually relevant and up-to-date information than ever before.

However, much of this advancement has been concentrated within proprietary, closed-source solutions developed by major tech companies like Google, Microsoft (Bing), OpenAI, and Perplexity AI.

This dominance by closed systems poses challenges related to transparency, limits the pace of innovation, and hinders entrepreneurial efforts in the field.

This research introduces Open Deep Search (ODS) as a crucial step towards democratizing search AI, aiming to bridge the performance gap between these proprietary systems and their open-source counterparts by augmenting open-source LLMs with advanced reasoning agents capable of effectively utilizing web search tools.

Key Takeaways

- ODS is an open-source framework designed to enhance the search and reasoning capabilities of any chosen base LLM, whether open-source or accessed via API.

- It consists of two main components: the Open Search Tool, which provides high-quality information retrieval from the web, and the Open Reasoning Agent, which interprets queries and orchestrates tools to find answers.

- The Open Search Tool employs sophisticated methods like query rephrasing, enhanced retrieval formatting, and passage augmentation to improve the quality and diversity of retrieved information compared to simpler approaches.

- The Open Reasoning Agent uses agentic frameworks, specifically ReAct (Reasoning and Action) and CodeAct (using code for actions), enabling LLMs to perform multi-step reasoning and judiciously decide when and how to use tools like web search and a calculator.

- ODS, particularly ODS-v2 using the CodeAct agent with DeepSeek-R1, achieves state-of-the-art performance on factuality evaluation benchmarks like FRAMES and SimpleQA, in some cases surpassing leading closed-source solutions like GPT-4o Search Preview and Perplexity Sonar Reasoning Pro.

- The open-source nature of ODS fosters community involvement, encourages innovation, and provides a transparent alternative to proprietary systems, potentially helping to mitigate risks associated with generative search like bias and hallucination.

Overview

Search AIs, also referred to as search engine-augmented LLMs, integrate the retrieval-augmented generation (RAG) capabilities of Large Language Models with the ability to access and incorporate real-time information from search engines.

This integration is vital because it overcomes the limitation of LLMs having a static knowledge base, allowing them to provide current and contextually relevant responses. Techniques that feed Search Engine Result Page (SERP) APIs directly as context to an LLM have been shown to outperform older methods like self-ask.

Despite these advancements, the field has been largely dominated by closed-source proprietary solutions. This lack of transparency restricts the potential for broader innovation and limits developers and entrepreneurs.

To counter this, Open Deep Search (ODS) is introduced as an open-source framework. ODS is designed to be "plug-and-play," meaning a user can select their base LLM, whether it's an open-source model like Llama3.1-70B or DeepSeek-R1, or a closed-source one accessed via API, and integrate it with ODS's capabilities.

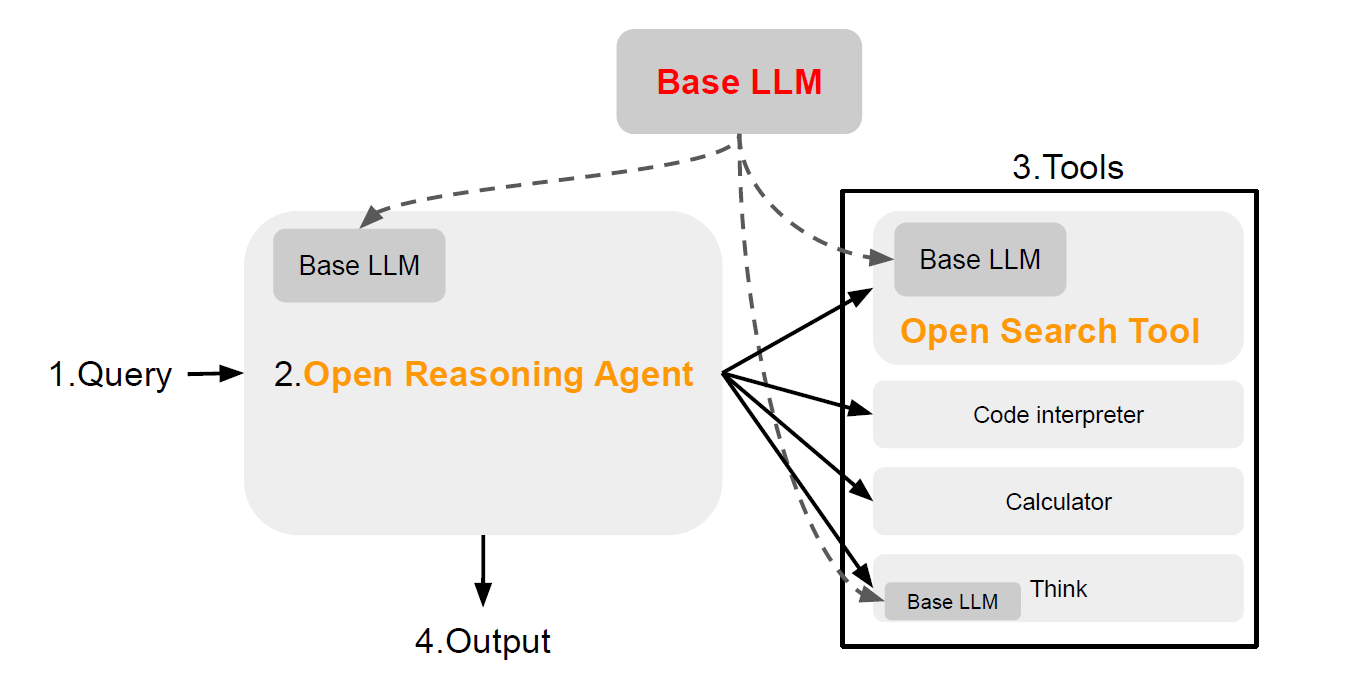

The ODS framework is composed of two principal parts that interact with the chosen base LLM: the Open Search Tool and the Open Reasoning Agent. Figure 1 from the source illustrates this architecture:

Figure 1: ODS Framework Architecture

Figure 1: A user can choose to plug in any base LLM of their choice and harness the benefits of the open-source framework of Open Deep Search (ODS), which consists of two components: Open Search Tool and Open Reasoning Agent. A query is first fed into Open Reasoning Agent which orchestrates the available set of tools to interpret and answer the query. The most important tool is the Open Search Tool that we design, which provides a high quality context from multiple retrieved sources from the web. In our experiments we use Llama3.1-70B and DeepSeek-R1 as our base model.

Figure 1 shows that a user query goes to the Open Reasoning Agent. The Open Reasoning Agent uses the Base LLM and orchestrates various tools, including the Open Search Tool and potentially a Code interpreter, Calculator, and a 'Think' tool (using the Base LLM), to generate an output. Both the Open Reasoning Agent and the Open Search Tool utilize the Base LLM.

The Open Search Tool is a newly designed web search tool intended to improve upon existing open-source alternatives like OpenPerplex and Perplexica. While those tools often pass raw SERP results to the LLM, Open Search Tool employs a more refined process.

This process includes query rephrasing to capture implicit context and diversify search queries, retrieving results from a SERP API and formatting them with metadata like title, URL, description, and date, and augmenting the LLM's context by scraping relevant passages from the top retrieved webpages, chunking, and re-ranking them for relevance. The quality of the output from the Open Search Tool is highlighted as critical to ODS's success.

The Open Reasoning Agent is responsible for interpreting the user's query, evaluating retrieved information, and deciding which tools to use to arrive at an answer. The research presents two versions of this agent:

- ODS-v1 (ReAct Agent): Based on the ReAct (Reasoning and Action) framework, which interleaves reasoning steps (Thoughts) with action execution. This version integrates Chain-of-Thought (CoT) reasoning, few-shot prompting with dynamically selected examples, and tool integration. The ReAct agent follows a structured format of <Question>, <Thought>, <Action>, <Action Input>, and <Observation>, iterating until a <Final_Answer> is reached. The available tools for ODS-v1 include the Open Search Tool (Web Search), a calculator (using Wolfram Alpha API), and a "Continue Thinking" tool using the base LLM for complex query decomposition. If the ReAct agent fails to answer, ODS-v1 can fall back to Chain-of-Thought Self-Consistency (CoT-SC), which samples multiple reasoning paths and selects the most consistent answer.

- ODS-v2 (CodeAct Agent): Based on Chain-of-Code (CoC) and CodeAct, which leverages the LLM's ability to generate and execute code for solving algorithmic, numeric, and symbolic problems. This approach treats tool calling as executable Python code, which is argued to be more natural and composable for LLMs than JSON-based methods. ODS-v2 adapts the CodeAgent framework from SmolAgents and provides it with access to the Open Search Tool and potentially other tools like a code interpreter and calculator.

These two components work together to empower open-source LLMs with advanced search and reasoning capabilities previously more common in proprietary systems.

Why it's Important

The dominance of proprietary search AI solutions creates several limitations. Closed-source systems lack transparency, making it difficult to understand how results are generated, what biases might be present, or how information is prioritized.

This opacity can impact user trust and makes auditing for fairness or accuracy challenging. Furthermore, proprietary systems limit external innovation and entrepreneurship as the underlying mechanisms and advancements are not shared, preventing others from building upon them.

Open Deep Search addresses these issues by providing a high-performing, open-source alternative. An open framework allows developers and researchers to inspect, understand, and build upon the technology, fostering community growth and harnessing collective talent. This collaborative environment can accelerate innovation in search AI and lead to the development of new applications and improvements.

Moreover, the open-source approach offers a potential path to address concerns associated with generative search engines, such as susceptibility to bias, hallucination (generating false information), surveillance risks, and the potential to provide dangerous information. By making the system's workings transparent, the community can work together to identify and mitigate these risks more effectively than with opaque, closed systems.

The plug-and-play nature of ODS is also significant. It allows users to integrate the framework with the latest powerful LLMs as they are released, ensuring that ODS can continuously leverage advancements in underlying language models to improve its performance. This adaptability is a key advantage in a field where LLM capabilities are rapidly progressing.

Summary of Results

The research evaluates ODS on two popular factuality benchmarks: SimpleQA and FRAMES.

SimpleQA consists of short-form questions designed to have a single, indisputable answer.

FRAMES contains challenging multi-hop questions requiring the integration of information from multiple sources. Both benchmarks were used to evaluate the accuracy of search AIs with Internet access.

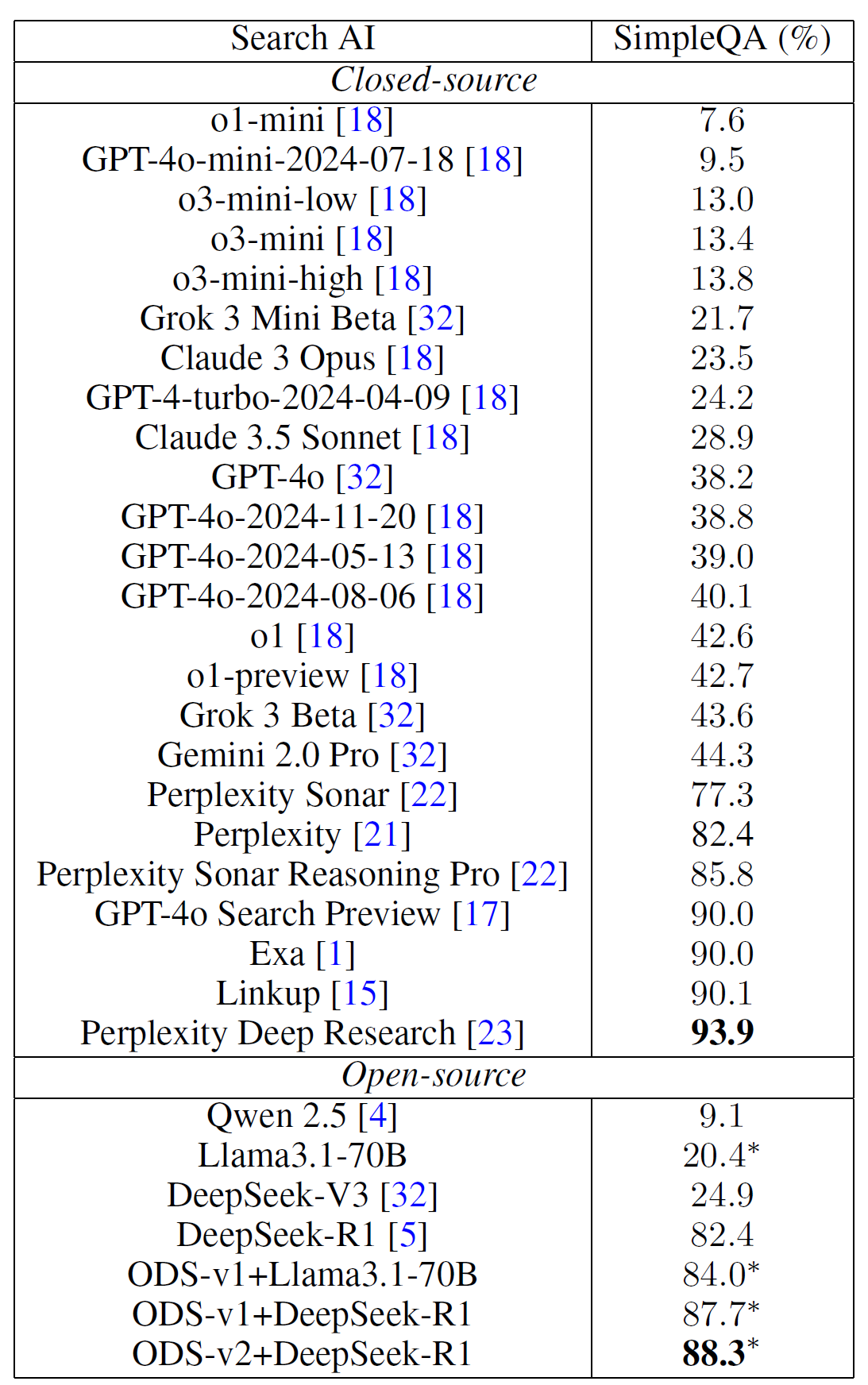

Table 1 presents a comparison of ODS versions (using DeepSeek-R1 as the base LLM unless otherwise specified) against various baselines, including base LLMs without web access and leading closed-source search AIs:

Table 1: Performance Comparison on SimpleQA and FRAMES Benchmarks

Table 1 demonstrates that base LLMs alone have limited performance on these search-dependent tasks, although models like DeepSeek-R1 show strong underlying reasoning capabilities.

- Closed-source search AIs like Perplexity and Perplexity Sonar Reasoning Pro show significantly better performance than base LLMs.

- ODS-v2+DeepSeek-R1 achieves the highest accuracy on the FRAMES benchmark (75.3%), notably surpassing GPT-4o Search Preview (65.6%) by 9.7%.

- On the SimpleQA benchmark, ODS-v2+DeepSeek-R1 (88.3%) nearly matches the performance of GPT-4o Search Preview (90.0%) and outperforms both Perplexity (82.4%) and Perplexity Sonar Reasoning Pro (85.8%).

Table 3 provides a broader comparison on SimpleQA, including other published results and placing ODS-v2+DeepSeek-R1 (88.3%) as the best-performing open-source solution presented, although some newer closed-source solutions like Linkup (90.1%) and Perplexity Deep Research (93.9%) report slightly higher scores. The authors note that ODS significantly narrows this gap.

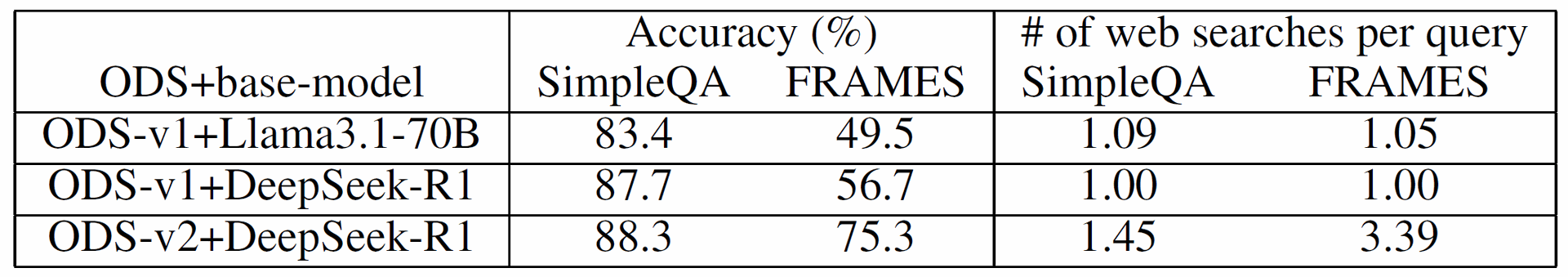

The research also analyzed the agentic behavior of ODS, specifically how often it chooses to perform web searches. Table 2 shows the average number of web searches per query for different ODS configurations:

Table 2: Average Web Searches per Query

Table 2 indicates that ODS adapts its search behavior based on the complexity of the task and the capability of the base model.

- ODS-v2 uses significantly more searches on the more complex FRAMES benchmark (3.39 searches/query) compared to SimpleQA (1.45 searches/query). This demonstrates the agent's ability to judiciously use search when multiple sources or reasoning steps are required.

- ODS-v1 with the weaker Llama3.1-70B model uses slightly more searches than with DeepSeek-R1, suggesting it attempts to compensate for the base model's reasoning limitations by gathering more information. This adaptive search approach is highlighted as more efficient than methods that use a fixed number of searches regardless of the query's difficulty.

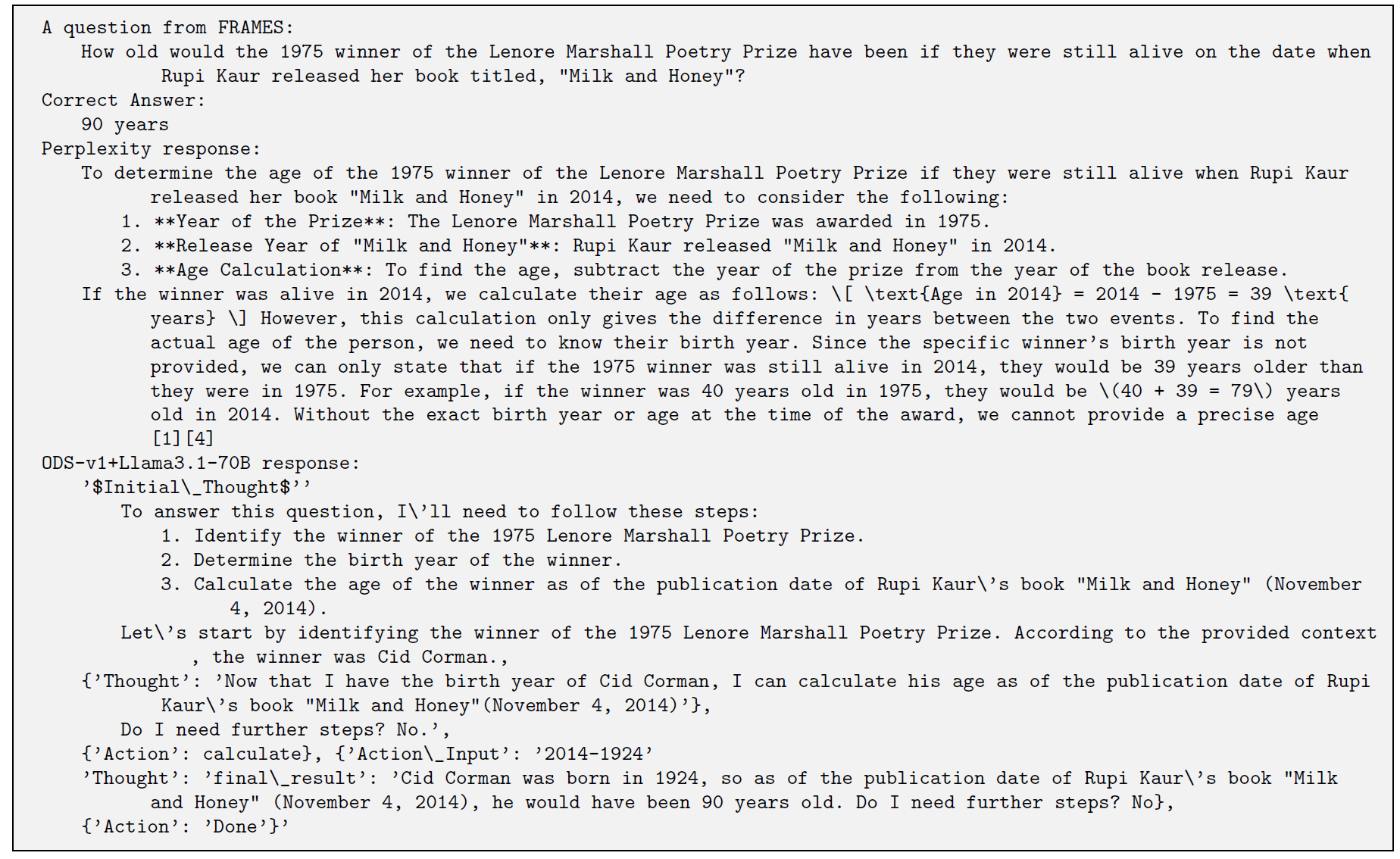

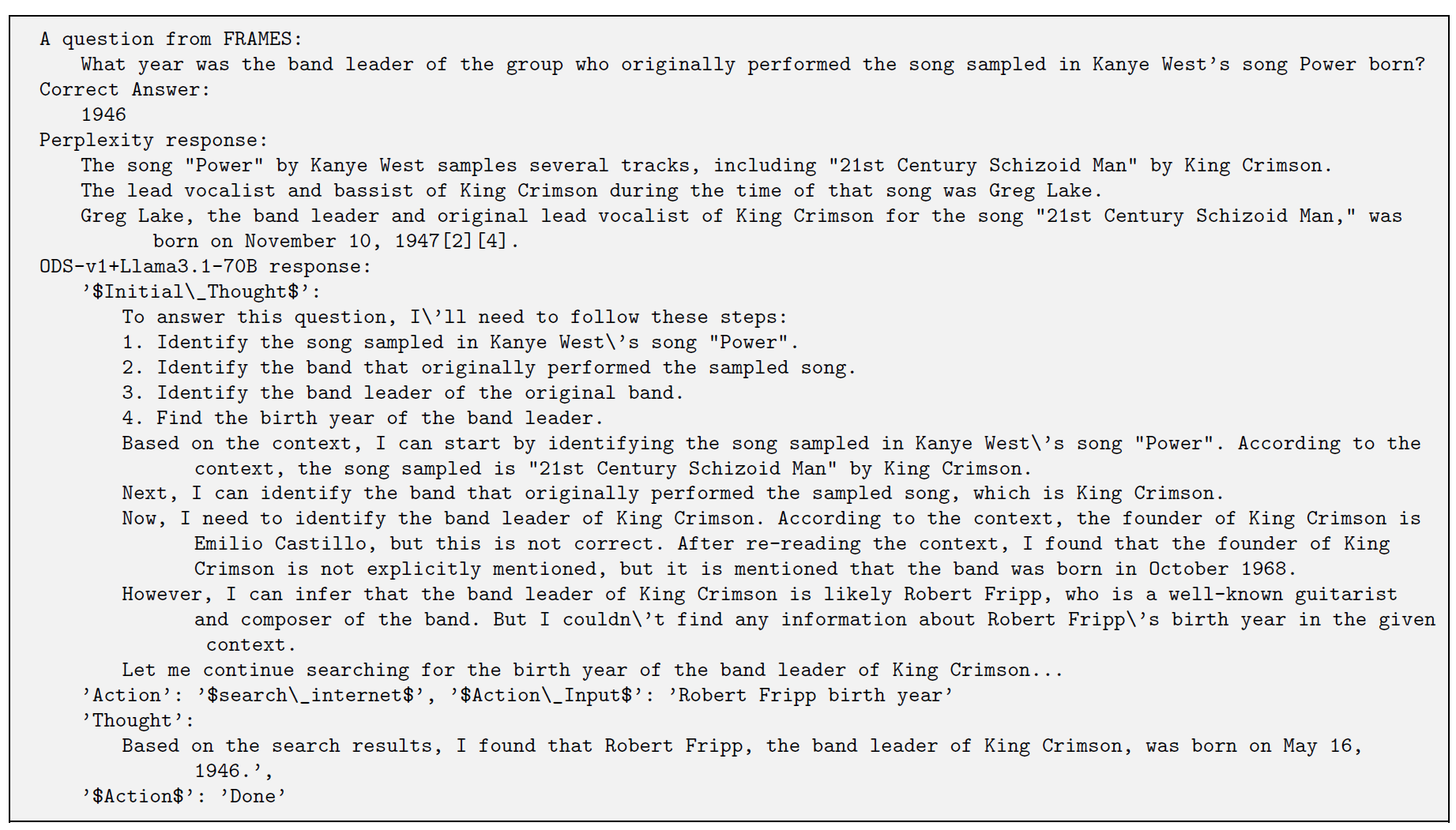

The researchers provide several examples illustrating the ODS agent's reasoning and search capabilities compared to proprietary solutions.

Figure 3 shows a FRAMES example where ODS-v1+Llama3.1-70B correctly calculates the age difference using the Wolfram Alpha tool, resolving a question about a poetry prize winner's age relative to a book release date, while Perplexity Sonar Reasoning Pro gets confused and provides an incorrect age.

Figure 6 demonstrates ODS-v1+Llama3.1-70B recognizing the need for a second search to find the birth year of a band leader (Robert Fripp) after the initial context was insufficient, successfully answering a complex multi-hop question from FRAMES that Perplexity failed to answer. This exemplifies the agent's adaptive search strategy.

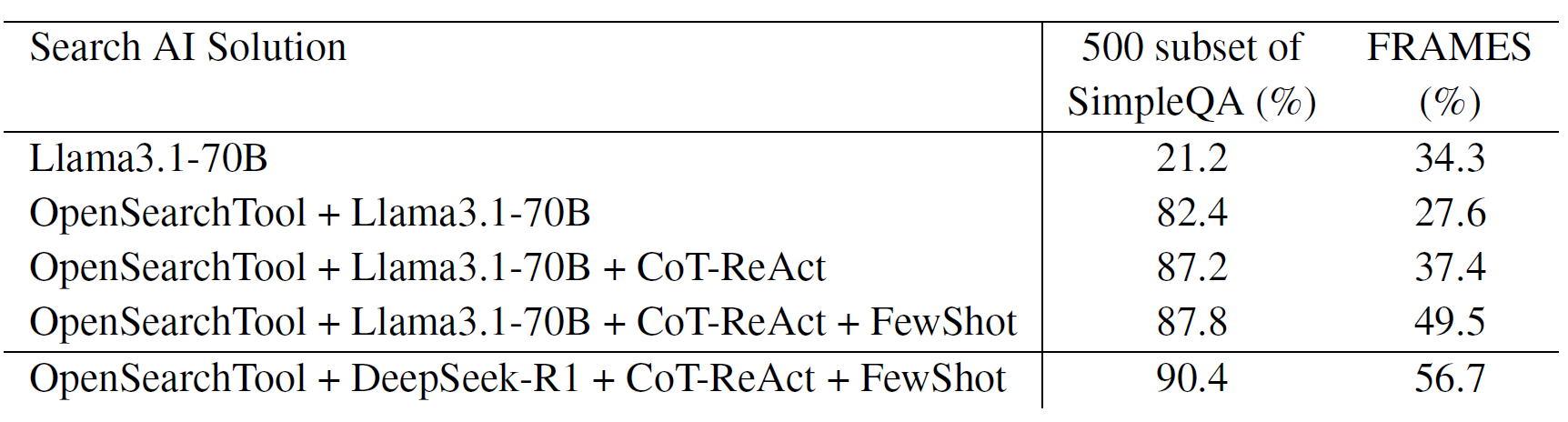

An ablation study on a subset of SimpleQA and FRAMES evaluates the contribution of different components of ODS-v1+Llama3.1-70B. Table 4 shows the results:

Table 4: Ablation Study of ODS-v1 Components

The ablation study reveals that the Base LLM alone (Llama3.1-70B) performs poorly on SimpleQA but better on FRAMES, likely due to some reasoning capability.

- Adding the Open Search Tool dramatically improves SimpleQA performance (from 21.2% to 82.4%), highlighting the critical role of high-quality retrieval for factual questions. Its initial impact on FRAMES is negative, possibly because raw retrieval isn't sufficient for complex reasoning without proper agentic control.

- Adding the CoT-ReAct agent significantly improves performance on both benchmarks (SimpleQA to 87.2%, FRAMES to 37.4%), showing the value of structured reasoning and tool use.

- Adding Few-shot prompting further boosts performance (SimpleQA to 87.8%, FRAMES to 49.5%), demonstrating the benefit of in-context learning for guiding the agent.

- Finally, replacing Llama3.1-70B with the more powerful DeepSeek-R1 as the base model yields the best performance for this configuration (SimpleQA to 90.4% on the subset, FRAMES to 56.7%), confirming that ODS effectively leverages the reasoning capabilities of the underlying LLM.

The ablation study validates that each component of ODS contributes to its overall state-of-the-art performance.

Conclusion

Open Deep Search (ODS) successfully addresses the challenge of democratizing AI search by providing a powerful, open-source framework that can compete with and sometimes exceed the performance of leading proprietary solutions.

By augmenting base LLMs with a sophisticated Open Search Tool for high-quality information retrieval and an Open Reasoning Agent capable of complex, adaptive reasoning and tool orchestration, ODS enables state-of-the-art search capabilities within an open framework.

The significant performance gains demonstrated on benchmarks like FRAMES and SimpleQA, coupled with the transparency and collaborative potential of open source, position ODS as a valuable contribution to the field.

The public release of the ODS implementation invites the community to build upon this foundation, fostering continued innovation and offering a transparent alternative in the evolving landscape of AI-powered search. The adaptive nature of the reasoning agent and the enhanced retrieval process of the search tool are key technical innovations that contribute to ODS's success in tackling complex search and reasoning tasks.

Open Deep Search: An Open-Source Framework for Advanced AI Search

Open Deep Search: Democratizing Search with Open-source Reasoning Agents