What if your smartwatch not only monitored your heart rate, but also explained the reasons behind every spike and dip? This is what SensorLM brings to life, transforming streams of raw sensor data from wearables into clear, natural language insights about our activities and well-being.

The Challenge: Making Sense of Sensor Data

Wearable devices collect a wealth of information such as steps taken, heart rate, and sleep quality. However, they often lack the crucial context explaining why our bodies behave in certain ways.

For users, interpreting rows of numbers can be confusing without meaningful explanations. The main barrier? Building large-scale, annotated datasets that connect sensor signals to human language is time-consuming and difficult to scale.

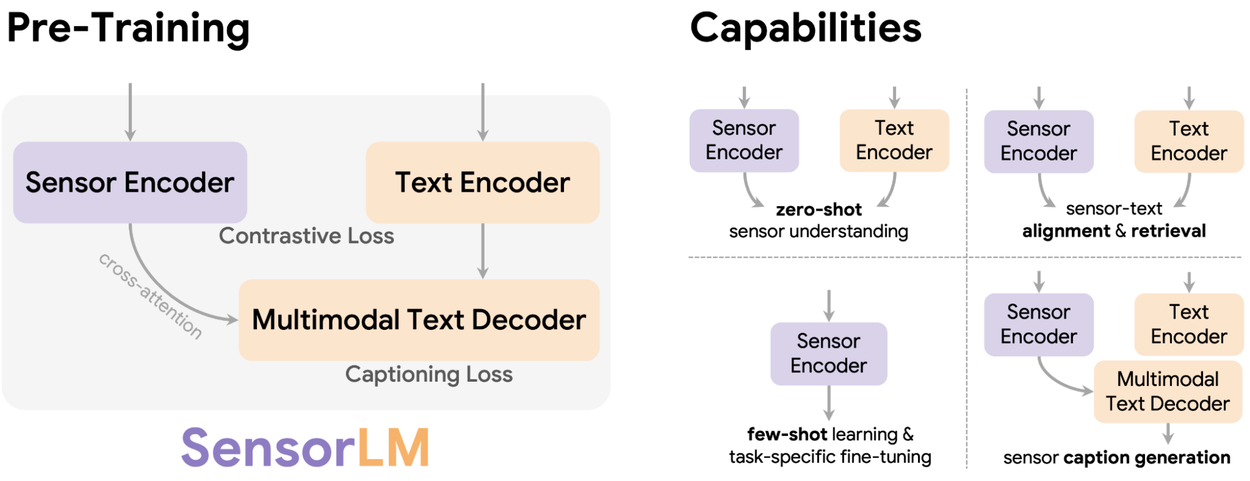

SensorLM pre-training enables new capabilities for personalized insights, such as zero-shot sensor understanding, sensor-text alignment and retrieval, few-shot learning, and sensor caption generation. Credit: Google

SensorLM’s Breakthrough: Data and Language Converge

SensorLM addresses this gap by harnessing a colossal dataset, nearly 60 million hours of de-identified sensor data from over 100,000 users of Fitbit and Pixel Watch devices worldwide. By leveraging this unprecedented resource, SensorLM is able to translate complex sensor streams into easy-to-understand narratives about health and activity.

Automated Annotation at Scale

To bypass the manual annotation bottleneck, the SensorLM team developed an automated pipeline that uses hierarchical processing to generate descriptive captions for sensor data. This pipeline analyzes trends, calculates statistics, and summarizes key events, producing the largest and most richly annotated dataset of its kind.

How SensorLM Works: Multimodal Machine Learning

SensorLM combines two advanced learning techniques for powerful results:

- Contrastive Learning: The model learns to match specific segments of sensor data with accurate textual descriptions, distinguishing subtle differences between activities like “light swimming” and “strength workout.”

- Generative Pre-training: SensorLM is trained to generate natural language captions directly from sensor input, delivering insightful and context-aware explanations.

This holistic framework enables SensorLM to deeply understand and link wearable sensor data with human language.

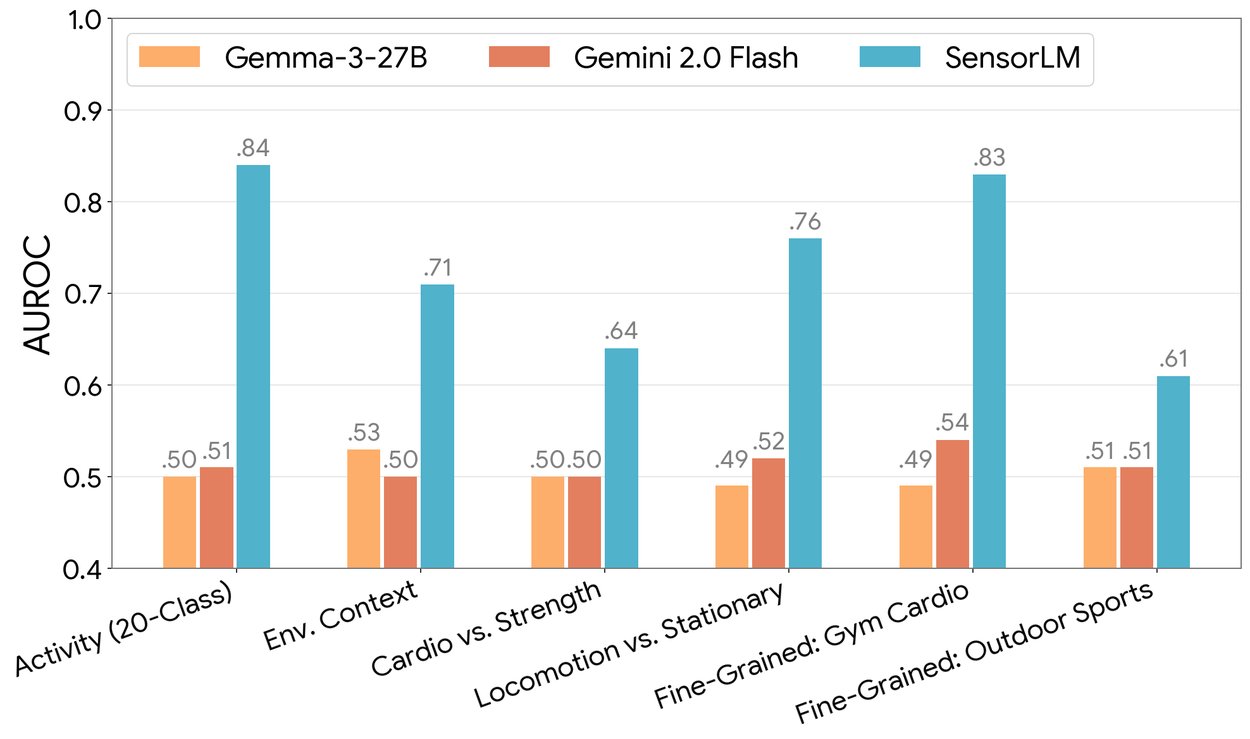

In zero-shot human activity recognition, SensorLM demonstrates strong zero-shot capabilities across tasks (measured by AUROC), while baseline LLMs perform near random. Credit: Google

Performance: From Lab to Real Life

SensorLM excels in several practical applications:

- Zero-Shot Activity Recognition: The model can identify activities it has never encountered during training, removing the need for additional labeled data.

- Few-Shot Learning: With only a handful of examples, SensorLM quickly adapts to new users or activities, ensuring personalized experiences.

- Cross-Modal Retrieval: Users can search for sensor patterns using text or retrieve language descriptions from sensor data, making information accessible for both experts and everyday users.

Compared to standard language models, SensorLM consistently delivers more precise, coherent, and detailed descriptions, as demonstrated by evaluation metrics like BERTScore and AUROC.

Scaling Up and Future Directions

SensorLM’s performance improves as more data, larger models, and greater computational resources become available. This scalability hints at even broader capabilities in the future, with plans to expand into new health domains such as metabolic tracking and advanced sleep analysis.

The Path Forward: Personalized Digital Health

By converting sensor data into actionable language, SensorLM lays the groundwork for intelligent health apps, digital coaches, and clinical tools that communicate naturally with users. The model’s ability to contextualize and explain data could revolutionize how we interact with wearable technology, making health insights more accessible and meaningful than ever before.

Conclusion

SensorLM represents a significant leap toward making wearable data truly comprehensible and useful. By equipping AI with the tools to "speak" the language of our bodies, SensorLM opens the door to more personalized, actionable health information—transforming the way we understand and manage our well-being.

Source: Google Research Blog

SensorLM: Translating Wearable Sensor Data Into Meaningful Language

SensorLM: Learning the Language of Wearable Sensors