As large language models (LLMs) become central to artificial intelligence, the challenge of crafting effective prompts remains a major hurdle. Manual prompt engineering is often slow, resource-intensive, and requires specialized expertise—putting advanced AI out of reach for many innovators. The Promptomatix framework aims to level the playing field by automating and streamlining every step of prompt optimization.

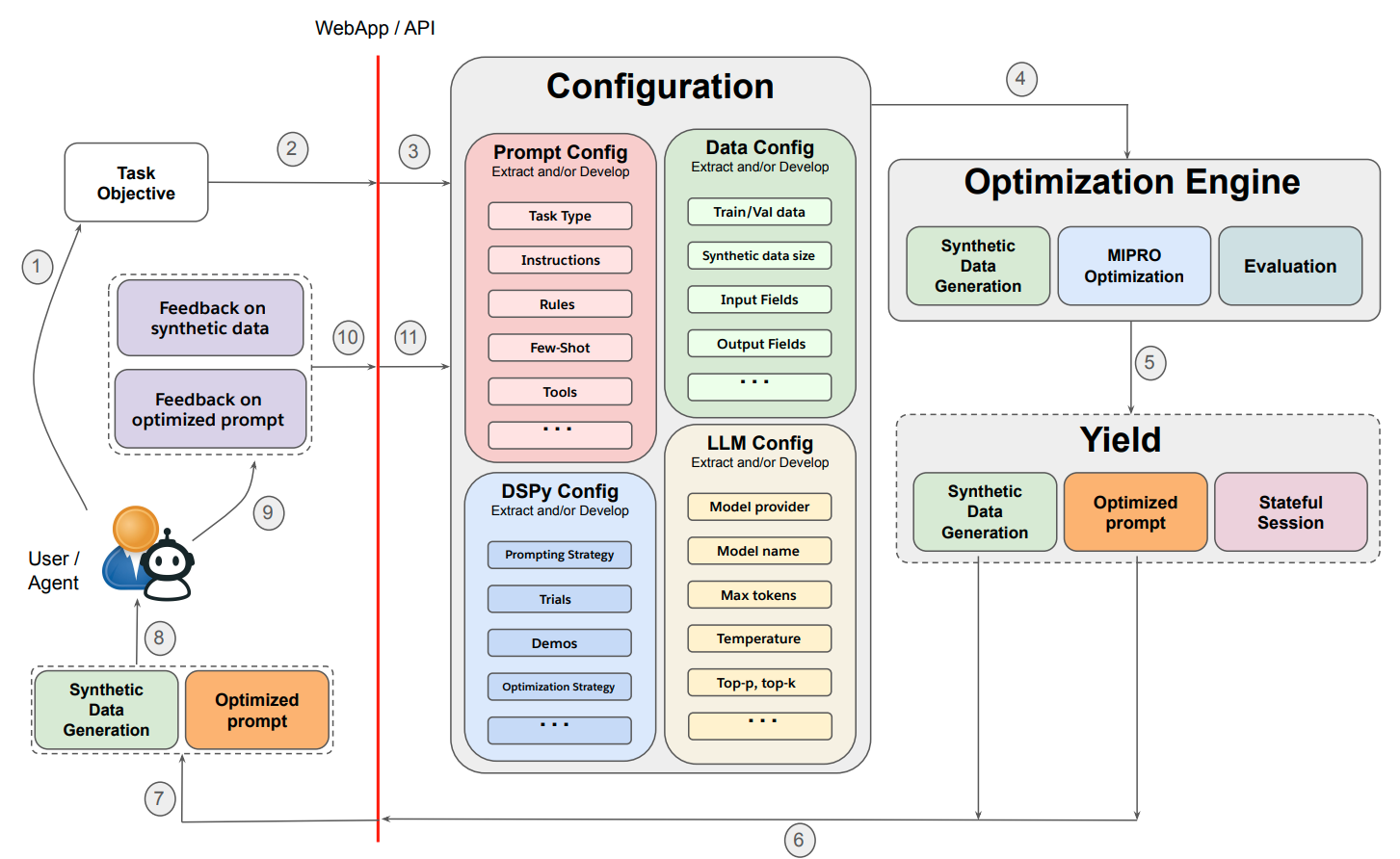

Figure 1: Promptomatix System Architecture: The complete optimization pipeline showing Configuration, Optimization Engine, Yield, and Feedback components. Credit: Paper

What Sets Promptomatix Apart?

- Zero-Configuration Automation: With Promptomatix, users only need to explain their task in plain language. The framework handles prompt optimization from start to finish, including intent analysis and outcome evaluation.

- Automated Synthetic Data: It generates tailored, high-quality datasets without the need for manual collection, accelerating development for niche or emerging use cases.

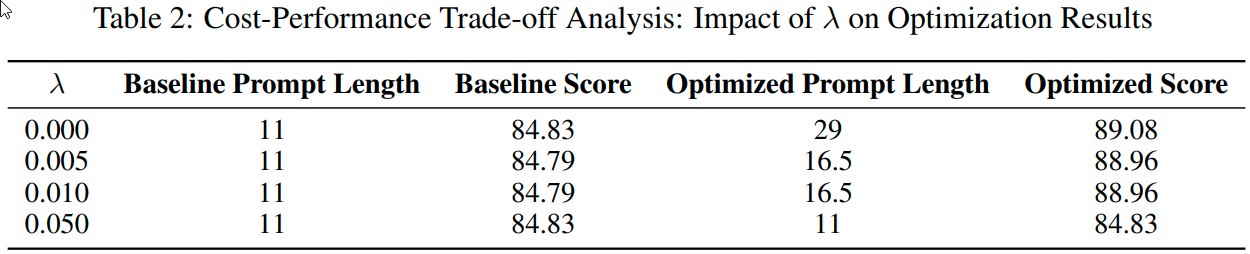

- Cost-Aware Optimization: Promptomatix allows users to balance prompt quality with computational efficiency, providing flexibility for both high-stakes and cost-sensitive scenarios.

- Framework-Agnostic Flexibility: Supporting diverse optimization engines like DSPy and meta-prompting, it adapts to evolving AI technologies and user needs.

- Superior Performance: Rigorous testing shows Promptomatix matches or outperforms traditional approaches while keeping prompts lean and resource use low.

- Broad Accessibility: Thanks to intuitive interfaces and automated workflows, both tech experts and domain specialists can optimize prompts without specialized knowledge.

Eliminating the Roadblocks of Prompt Engineering

Prompt quality directly influences LLM performance, yet traditional engineering methods are laborious and inaccessible to many. Promptomatix tackles these barriers by:

- Automating complex prompt design, so no expert intervention is needed

- Reducing unpredictable results for more reliable outputs

- Minimizing resource consumption to lower operational costs

- Integrating automated evaluation for scalable, task-specific assessment

- Generating synthetic training data to solve the scarcity problem in specialized domains

Inside the Promptomatix Architecture

The framework is built on a modular, user-centric design, with four key components:

- Configuration: Extracts task requirements from minimal input, determining strategies and settings with help from advanced LLMs acting as “teachers.”

- Optimization Engine: Coordinates prompt refinement, data generation, and evaluation, factoring in cost constraints through adjustable parameters.

- Yield: Handles prompt delivery, version tracking, and ongoing performance monitoring for transparency and improvement.

- Feedback: Supports iterative prompt enhancement using both user input and AI-generated suggestions, even when explicit feedback is missing.

Why This Matters for the Future of AI

Promptomatix’s automation is a powerful addition to the developer's toolbox for real-world LLM deployment. It bridges the gap between cutting-edge AI and everyday users, accelerating innovation across industries previously hindered by prompt engineering bottlenecks. Automated synthetic data generation is especially transformative for applications with limited labeled data, enabling faster prototyping and deployment.

Cost-aware optimization is another critical advantage. Organizations can now control the balance between model performance and resource expenditure, making large-scale, economically viable LLM deployments a reality. The modular design ensures Promptomatix stays relevant as new models and optimization strategies emerge, safeguarding investments in AI infrastructure.

Proven Results and Comprehensive Features

Testing on tasks like question answering, math reasoning, text generation, classification, and summarization shows Promptomatix consistently achieves or surpasses results from manual and existing automated methods, all with greater efficiency.

- Performance matches or exceeds best-in-class solutions across key benchmarks.

- The framework enables flexible trade-offs between cost and quality, adaptable to both intensive and constrained environments.

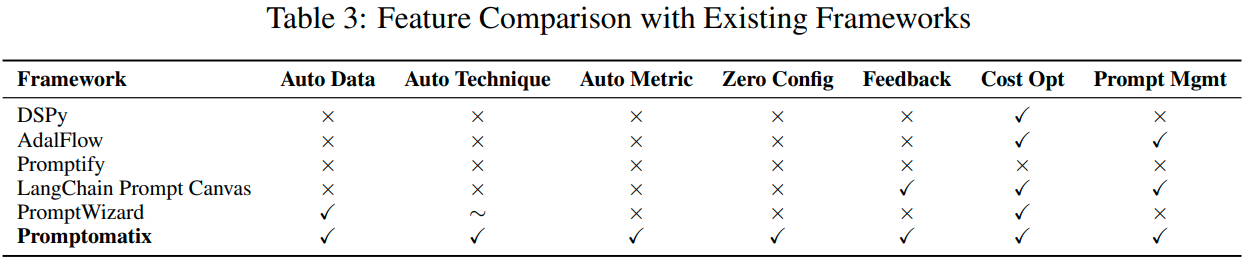

- Feature comparison highlights Promptomatix as the only solution offering end-to-end automation, auto data generation, feedback integration, and cost management in a single package.

Takeaway: Lowering Barriers, Raising Standards

By automating every stage of the prompt optimization pipeline, Promptomatix empowers more users to leverage the capabilities of LLMs. Its blend of intelligent data generation, cost optimization, and accessibility sets a new standard for AI development. As organizations seek scalable, efficient LLM solutions, Promptomatix provides a robust, future-ready platform to accelerate AI-driven innovation.

Source: Original research article on the Promptomatix framework

Promptomatix Automates and Democratizes Prompt Engineering for LLMs

Promptomatix: An Automatic Prompt Optimization Framework for Large Language Models