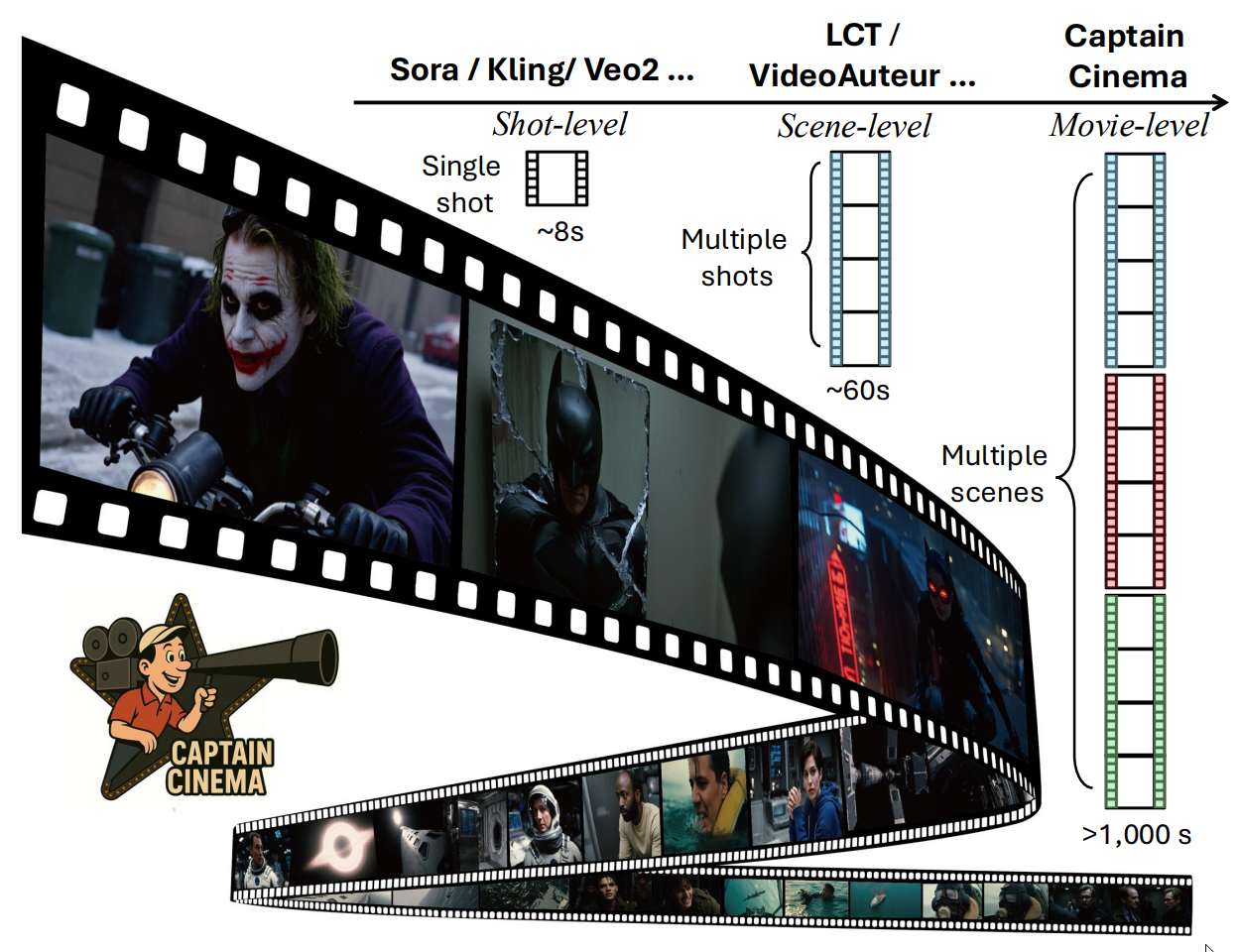

Captain Cinema, an innovative AI framework, is making seamless, story-driven video creation accessible and practical, overcoming the barriers that have limited automated filmmaking. While video generation models have captured the headlines with the ability to only generate 8 seconds at a time, Captain Cinema looks to transform the current state of the art into full length video generation. This could seriously transform the entertainment industry as studios and individuals are empowered with powerful long content generation.

Key Takeaways

- Two-Module Framework: It operates through a unique two-stage process: top-down keyframe planning to outline the narrative and visual appearance, followed by bottom-up video synthesis to generate spatio-temporal dynamics between these keyframes.

- Long-Range Coherence: The framework explicitly addresses the challenge of maintaining long-range coherence in both storyline and visual appearance (e.g., scenes and characters) over multiple scenes and extended durations, a common failing of previous approaches.

- GoldenMem Context Compression: A novel memory mechanism called GoldenMem is introduced to selectively retain and compress contextual visual information from past keyframes. It uses an inverse Fibonacci downsampling strategy based on the golden ratio (φ ≈ 1.618) to maintain a fixed, small memory overhead for a multi-frame history, thereby avoiding issues like "exploding context lengths".

- Semantic-Oriented Context Conditioning: Instead of strict temporal ordering, Captain Cinema retrieves context based on semantic similarity using CLIP (text–image) and T5 (text–text) embeddings, allowing it to better accommodate complex narrative structures such as flashbacks or temporal loops.

- Progressive Finetuning: To ensure stable and efficient training, the model uses a progressive training strategy where it is warmed up with single image generation and then gradually finetuned with growing context lengths of interleaved pairs.

- Dynamic Stride Sampling: This strategy mitigates overfitting on limited movie datasets by dynamically offsetting the sampling stride across epochs, significantly increasing the variety of valid training sequences.

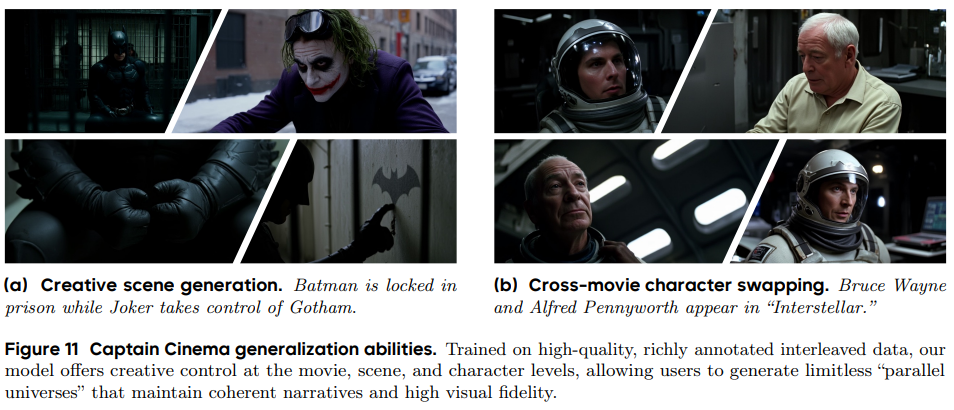

- Generalization Abilities: The model demonstrates impressive generalization, capable of creative scene generation (synthesizing novel scenes by recombining elements) and cross-movie character swapping, allowing for exploration of "parallel universes" while maintaining visual fidelity and narrative plausibility

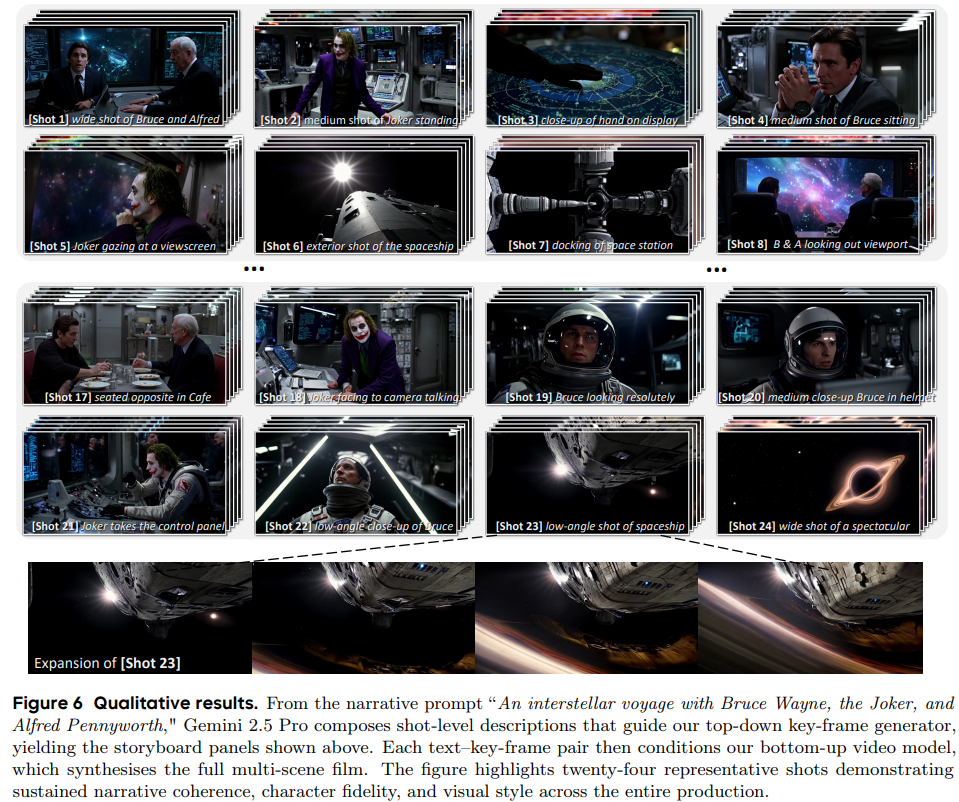

Figure 1 Captain Cinema: ‘‘I can film this all day!‘‘ Captain Cinema bridges top-down interleaved keyframe planning with bottom-up interleaved-conditioning video generation, taking a step toward the first multi-scene, whole-movie generation, preserving high visual consistency in scenes and identities. All the movie frames here are generated. Credit: Paper

How Captain Cinema Mirrors Real Movie-Making

The secret to Captain Cinema’s success is its two-module framework inspired by traditional filmmaking. First, the top-down keyframe planning module uses textual descriptions to sketch out the story as a sequence of keyframes much like a digital storyboard. Next, the bottom-up video synthesis module animates these moments, ensuring smooth transitions and maintaining both visual and narrative consistency throughout extended scenes.

Addressing the Challenge of Long-Range Coherence

Maintaining coherent visuals and stories over time has stumped previous AI video models. Captain Cinema introduces GoldenMem, a memory compression strategy influenced by the golden ratio.

By leveraging inverse Fibonacci downsampling, the model keeps only the most semantically important prior frames, using advanced embeddings such as CLIP and T5 for selection. This not only preserves context efficiently but also enables creative devices like flashbacks and non-linear storytelling, all while sidestepping the computational demands of excessive memory use.

Smart Training for Better Movies

Captain Cinema’s progressive finetuning approach starts simple, generating single images and ramps up to more complex scenes while building model stability. Dynamic stride sampling further diversifies the training process, minimizing overfitting and boosting the AI’s ability to generalize.

The outcome: Captain Cinema can invent entirely new scenes or swap characters between stories without sacrificing coherence or visual quality.

Technical Achievements and Data

The top-down planner relies on a refined text-to-image model and hybrid attention masking for fast, coherent keyframe creation. GoldenMem saves only vital high-resolution frames, keeping operations efficient.

The Seaweed-3B-powered bottom-up synthesis module stitches these frames into fluid videos, respecting scene transitions and boundaries. Training draws from a unique dataset, about 500 hours of annotated movie footage, rich in scene and character detail to teach the AI narrative structure.

Why This Breakthrough Matters

Captain Cinema isn’t just a technical achievement; it’s a democratizing tool for creators. Previous video synthesis focused on short, visually believable clips, but Captain Cinema enables high-quality, long-form storytelling even for those with limited resources.

Beyond movies, this technology could transform education, simulation for robotics, and accessibility. The researchers stress ethics, supporting responsible use through watermarking and controlled access to the model.

Results: Setting a New Benchmark

Benchmarks and user studies show that Captain Cinema outperforms leading alternatives in temporal dynamics, narrative consistency, and visual coherence. Tests like VBench-2.0 and LCT protocols confirm its ability to maintain character and story fidelity even as scenes grow complex, thanks to GoldenMem. Ablation and generalization studies highlight the model’s efficiency, robustness, and creative flexibility including seamless scene blending and character swaps.

Takeaway

With its unique blend of narrative planning and visual synthesis, plus innovations such as GoldenMem and progressive training, Captain Cinema raises the bar for AI-driven video creation. It successfully balances big-picture storytelling with detailed visuals, marking a major step toward fully automated, cinematic AI storytelling.

Captain Cinema: Narrative AI is Revolutionizing Story-Driven Movie Generation

Captain Cinema: Towards Short Movie Generation