For decades, neuroscientists have believed that memories reside primarily in the connections between neurons specifically in the synaptic weights that determine how strongly one neuron influences another.

This foundational assumption has shaped our understanding of learning, memory, and even artificial intelligence architectures. However, groundbreaking research published in Proceedings of the National Academy of Sciences by researchers from MIT and IBM Research, challenges this conventional wisdom with a revolutionary proposal: astrocytes, the brain's most abundant support cells, may serve as sophisticated memory storage and processing units that dramatically enhance the brain's computational capabilities.

The authors demonstrate that when astrocytes are incorporated into neural network models, the resulting system can store exponentially more memories than traditional neuron-only networks. More remarkably, their theoretical framework reveals a direct connection between neuron-astrocyte networks and cutting-edge machine learning architectures, including Dense Associative Memories and Transformer models.

The implications extend far beyond basic neuroscience, potentially inspiring new AI architectures and offering insights into neurological disorders where astrocyte function is compromised.

NOTE: The math behind this this research is extensive and I avoided discussing it in this article since it is simply to time consuming to markup in HTML. I suggest reading the paper if you are interested in how the authors define the mathematical relationships of astrocyte behavior.

Key Takeaways

- Astrocytes may store memories within their internal calcium transport networks, not just in synaptic connections between neurons

- Neuron-astrocyte networks can achieve superior memory scaling, the number of stored memories per computational unit grows linearly with network size, outperforming known biological implementations

- The mathematical framework unifies diverse architectures: by adjusting connectivity patterns, the model can operate as either a Dense Associative Memory or a Transformer

- Each astrocyte can form over one million tripartite synapses, creating a vast computational substrate previously underappreciated in memory theories

- The research provides testable predictions: disrupting calcium diffusion within astrocytes should significantly impair memory recall

- Human astrocytes are significantly larger and more active than those in rodents, suggesting enhanced computational capabilities in human brains

Astrocytes: From Support Cast to Leading Role

Astrocytes have long been relegated to supporting roles in neuroscience such as maintaining brain homeostasis, regulating blood flow, and cleaning up cellular debris. This perspective began changing in recent years as evidence accumulated showing that astrocytes actively participate in synaptic transmission and plasticity.

The cells form what researchers call "tripartite synapses," three-part connections involving a presynaptic neuron, postsynaptic neuron, and an astrocytic process that wraps around the synaptic cleft. These tripartite synapses are ubiquitous throughout the brain.

In the hippocampus, a region critical for memory formation, nearly every synapse is contacted by an astrocyte. A single astrocyte can form over one million such connections, creating an enormous network of cellular processes that detect neurotransmitters, respond with calcium signaling, and release gliotransmitters that modulate synaptic strength. Despite this extensive connectivity, no comprehensive theory had explained how neurons, synapses, and astrocytes might work together as a unified memory system.

The authors address this gap by developing a mathematical framework grounded in the biology of astrocyte calcium signaling. When neurotransmitters are released at a synapse, astrocytic processes detect these chemical signals and respond with increases in intracellular calcium concentration.

This calcium can then diffuse through the astrocyte's internal network, potentially carrying information between distant synapses. The researchers propose that this calcium transport network, described by a mathematical tensor, serves as a novel substrate for memory storage.

Dense Associative Memory Meets Biology

To understand the computational implications of neuron-astrocyte interactions, the authors turned to energy-based associative memory models. These mathematical frameworks, which include the famous Hopfield networks, store memories as stable attractor states in a dynamical system.

When the network receives a partial or corrupted version of a stored memory, the dynamics flow toward the complete correct pattern, much like how we might recall a full song from hearing just a few notes.

The research reveals that neuron-astrocyte networks naturally implement a sophisticated variant called Dense Associative Memory. Unlike traditional Hopfield networks, which involve pairwise interactions between neurons, Dense Associative Memories include higher-order interactions, in this case, four-way interactions mediated by astrocytic processes (Krotov, 2016).

This mathematical structure dramatically increases memory capacity. While traditional biological neural networks can store a number of memories that remains constant relative to network size, neuron-astrocyte networks achieve linear scaling: as the network grows, the number of memories per computational unit increases proportionally.

The key insight lies in how astrocytes transform local synaptic interactions into global computational operations. Through their extensive calcium transport networks, astrocytes effectively bring information about distant neural activities to each tripartite synapse.

This creates "effective four-neuron synapses" that can connect neurons potentially very far apart in the brain. The result is a many-body interaction that enables superior memory storage and retrieval capabilities.

Bridging Neuroscience and Artificial Intelligence

Perhaps most intriguingly, the mathematical framework reveals deep connections between neuron-astrocyte networks and state-of-the-art AI architectures. By adjusting the connectivity tensor that describes calcium transport between astrocytic processes, the model can smoothly transition between operating as a Dense Associative Memory and functioning like a Transformer, the architecture underlying large language models like GPT.

When the connectivity tensor is set to store specific memory patterns using a Hebbian-like learning rule, the network operates as a Dense Associative Memory capable of storing and retrieving complex patterns (Osorio, 2022).

However, when the tensor is simplified (setting all entries to a constant value), the equilibrium states of the network approximate the output of a Transformer's self-attention mechanism. This mathematical relationship suggests that the brain might implement computational principles similar to those driving modern AI systems, but using biological hardware that achieves superior efficiency and scaling properties.

The connection to Transformers is particularly fascinating given the recent success of these models in artificial intelligence. Transformers excel at processing sequential information and capturing long-range dependencies, capabilities that are also crucial for biological memory systems. The fact that neuron-astrocyte networks can implement both Dense Associative Memory and Transformer-like computations suggests a unified framework for understanding diverse cognitive functions.

Experimental Predictions and Validation

The theoretical framework makes several testable predictions that could validate the astrocyte memory hypothesis. Most directly, the model predicts that selectively disrupting calcium diffusion within astrocytes should significantly impair memory recall while leaving memory formation potentially intact. This could be tested using pharmacological agents that block astrocytic calcium channels or genetic modifications that alter calcium transport proteins.

The authors demonstrate their model's capabilities through computational experiments. Using energy-based dynamics with Hebbian-like learning rules, they successfully stored and retrieved 25 different patterns from the CIFAR-10 dataset.

The network consistently converged to correct memory attractors, with the energy function decreasing monotonically along trajectories confirming the theoretical predictions.

Additionally, they trained a more flexible version using backpropagation on a self-supervised task, showing that the network could perform error correction on masked images from the Tiny ImageNet dataset.

These experiments reveal that the network doesn't require perfect symmetry to function effectively. While the energy-based formulation assumes symmetric connectivity patterns for mathematical tractability, the backpropagation experiments demonstrate that the core principles work even when these symmetries are relaxed. This flexibility is crucial for biological plausibility, as real neural circuits exhibit various asymmetries and noise.

Implications for Understanding Brain Disorders

The astrocyte memory hypothesis has profound implications for understanding neurological and psychiatric disorders. Many brain conditions, including Alzheimer's disease, epilepsy, and depression, involve astrocyte dysfunction. If astrocytes indeed serve as critical memory storage components, then understanding and treating these disorders may require focusing as much on glial cells as on neurons.

For example, in Alzheimer's disease, astrocytes show altered calcium signaling and reduced ability to support synaptic function. The current framework suggests that these changes might directly impair the brain's memory storage capacity, not just through effects on synaptic transmission but through disruption of the astrocytic memory substrate itself. This perspective could inspire new therapeutic approaches targeting astrocyte calcium signaling rather than solely focusing on neuronal pathology.

The research also highlights the evolutionary significance of astrocyte complexity. Human astrocytes are significantly larger and more active than their rodent counterparts, suggesting enhanced computational capabilities that may contribute to our species' superior cognitive abilities. Understanding how astrocyte networks scale and organize could provide insights into the evolutionary origins of human intelligence and consciousness.

Computational Architecture of Memory

The mathematical description reveals the elegant computational architecture underlying the proposed memory system. The model describes three interconnected dynamical systems: neural membrane voltages, synaptic strengths, and astrocytic calcium concentrations. Each component operates on potentially different timescales, with neurons typically fastest, followed by astrocytes, and then synaptic plasticity.

The neural dynamics follow a standard firing rate model, where each neuron's activity depends on weighted inputs from other neurons. However, these synaptic weights are not static, they depend dynamically on both pre- and postsynaptic neural activity and on the local astrocytic calcium concentration. This creates a three-way interaction that goes beyond traditional models of synaptic plasticity.

Astrocytic processes maintain their own dynamics, with calcium concentrations influenced by synaptic activity through specific coupling functions and by calcium diffusion from other processes within the same astrocyte. The diffusion pattern, encoded in the connectivity tensor, determines how information flows through the astrocytic network and ultimately how memories are stored and retrieved.

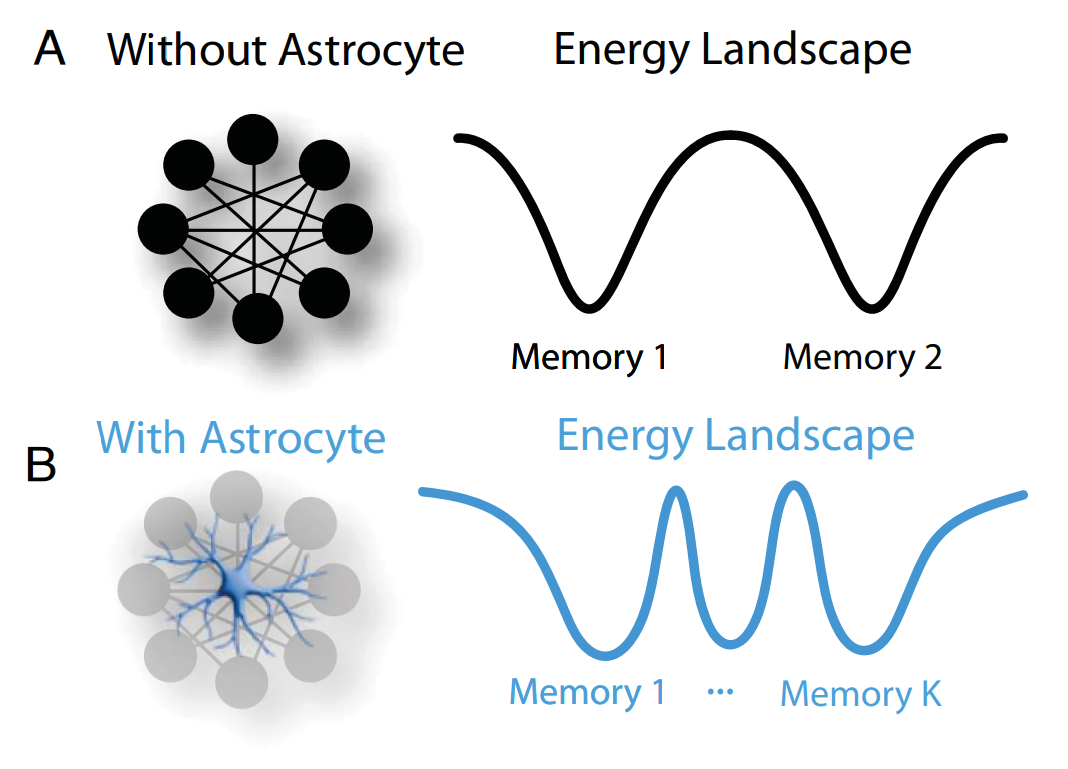

Fig. 2. For a fixed number of neurons, the neuron–astrocyte network is capable of storing many more memories than the neuron-only network. (A) The energy landscape of a neuron-only associative network. (B) The energy landscape of a neuron–astrocyte associative network. The memories are more densely packed into the state-space, thereby enabling superior memory storage and retrieval capabilities. Credit: Krotov et al.

When analyzed using energy function methods, this three-component system exhibits guaranteed convergence to stable attractor states under appropriate conditions. The mathematical framework ensures that starting from any initial condition, the network dynamics will flow toward one of the stored memory patterns, implementing robust associative recall.

The Mathematics of Astrocyte Memory

At the heart of the authors' proposal is a set of coupled dynamical equations that describe the interactions between neurons, synapses, and astrocytic processes. These equations provide a formal language for understanding how astrocytes contribute to memory storage and retrieval. The model begins by defining the dynamics for each component.

The activity of each neuron, represented by its membrane voltage

Here,

The dynamics of the astrocytic processes are central to the theory. The state of an astrocytic process

In this equation, the term that stands out is the tensor

The key insight of the paper is that this complex system can be understood through an energy function (a Lyapunov function), which guarantees that the network dynamics will always settle into stable fixed-point attractors, which represent stored memories. By analyzing the system in a regime where astrocyte and synaptic dynamics are fast compared to neural dynamics, the authors derive an "effective" energy function that governs the neurons:

This equation reveals the computational consequence of the astrocyte's involvement. The second term shows a four-body interaction between neurons (

So, how are memories stored? The authors propose a Hebbian-like learning rule where the memory patterns

This equation is the core of the memory storage mechanism. It suggests that learning occurs by modifying the internal communication pathways within a single astrocyte. Each memory

Future Directions and Open Questions

While the theoretical framework is compelling, many questions remain about how astrocyte memory storage might work in practice. The model assumes extensive communication between astrocytic processes, but the extent of such connectivity in real brains remains unclear.

Future experiments using advanced imaging techniques could map the functional connectivity of astrocytic networks and determine whether the required communication pathways exist.

The research also opens exciting possibilities for bio-inspired artificial intelligence. If astrocytes do indeed implement superior memory architectures, then incorporating astrocyte-like components into artificial neural networks could lead to more efficient and capable AI systems. The ability to smoothly interpolate between Dense Associative Memory and Transformer operations could enable novel architectures that combine the strengths of both approaches.

Another intriguing direction involves understanding how astrocyte memory networks might interact with other brain circuits. The current model focuses on a single astrocyte, but real brains contain millions of these cells connected through gap junctions and other communication mechanisms. Understanding how these larger networks organize and process information could reveal principles of brain-wide computation that go far beyond current theories.

The temporal dynamics of astrocyte signaling also warrant further investigation. While the model can accommodate various timescales, the actual temporal characteristics of calcium diffusion and memory storage remain poorly understood. Advanced experimental techniques that can monitor astrocyte activity with high spatial and temporal resolution will be crucial for validating and refining the theoretical predictions.

Revolutionizing Memory Research

This research represents a fundamental shift in how we think about memory storage in the brain. Rather than viewing memories as residing solely in synaptic connections between neurons, the astrocyte hypothesis suggests a distributed storage system that leverages the vast computational resources of glial networks. This perspective could revolutionize our approach to understanding learning, memory, and cognition.

The mathematical framework provides a unifying lens for understanding diverse neural phenomena, from basic associative memory to complex language processing. By showing how biological circuits can implement computations similar to state-of-the-art AI architectures, the research bridges neuroscience and artificial intelligence in unprecedented ways.

Moreover, the focus on astrocytes highlights the importance of studying all brain cell types, not just neurons. Half of all brain cells are glial, yet they have received relatively little attention in computational theories of brain function. This research suggests that ignoring glial contributions may have caused us to fundamentally misunderstand how the brain works.

The implications extend beyond basic science to practical applications in medicine and technology. Understanding astrocyte memory networks could lead to new treatments for neurological disorders, novel approaches to artificial intelligence, and deeper insights into the nature of intelligence itself. As the authors note, astrocytes may offer "a fresh source of inspiration for building state-of-the-art AI systems."

The path forward will require close collaboration between theorists, experimentalists, and technologists. Testing the astrocyte memory hypothesis will demand new experimental techniques, refined mathematical models, and innovative approaches to measuring and manipulating glial function. But if the predictions prove correct, we may be on the verge of a new era in understanding how the brain achieves its remarkable computational capabilities—an era where astrocytes take center stage as the hidden powerhouses of memory and cognition.

Definitions

Astrocytes: Star-shaped glial cells that are the most abundant cell type in the brain, traditionally thought to provide structural and metabolic support to neurons but increasingly recognized as active participants in neural computation.

Dense Associative Memory: A class of neural network models that extend traditional Hopfield networks by incorporating higher-order interactions, leading to superior memory storage capacity and pattern recognition capabilities.

Tripartite synapse: A functional unit consisting of presynaptic neuron, postsynaptic neuron, and surrounding astrocytic processes that can detect neurotransmitters and release gliotransmitters to modulate synaptic transmission.

Gliotransmitters: Chemical signaling molecules released by glial cells, including astrocytes, that can influence neuronal activity and synaptic transmission.

Energy-based model: A mathematical framework where network dynamics are governed by minimization of an energy function, ensuring convergence to stable attractor states that represent stored memories.

Calcium signaling: A form of cellular communication where changes in intracellular calcium concentration serve as signals that can trigger various cellular responses and enable communication between different parts of a cell.

Transformer architecture: A neural network architecture widely used in artificial intelligence, particularly for language processing, that relies on self-attention mechanisms to capture long-range dependencies in sequential data.

Astrocytes as Memory Powerhouses: Rethinking How the Brain Stores Information

Neuron–astrocyte associative memory