In a remarkable demonstration of artificial intelligence's advancing capabilities, researchers from Princeton University and collaborating institutions have developed Physics Supernova, an AI agent system that achieved gold-medalist-level performance on the International Physics Olympiad (IPhO) 2025 theoretical examination.

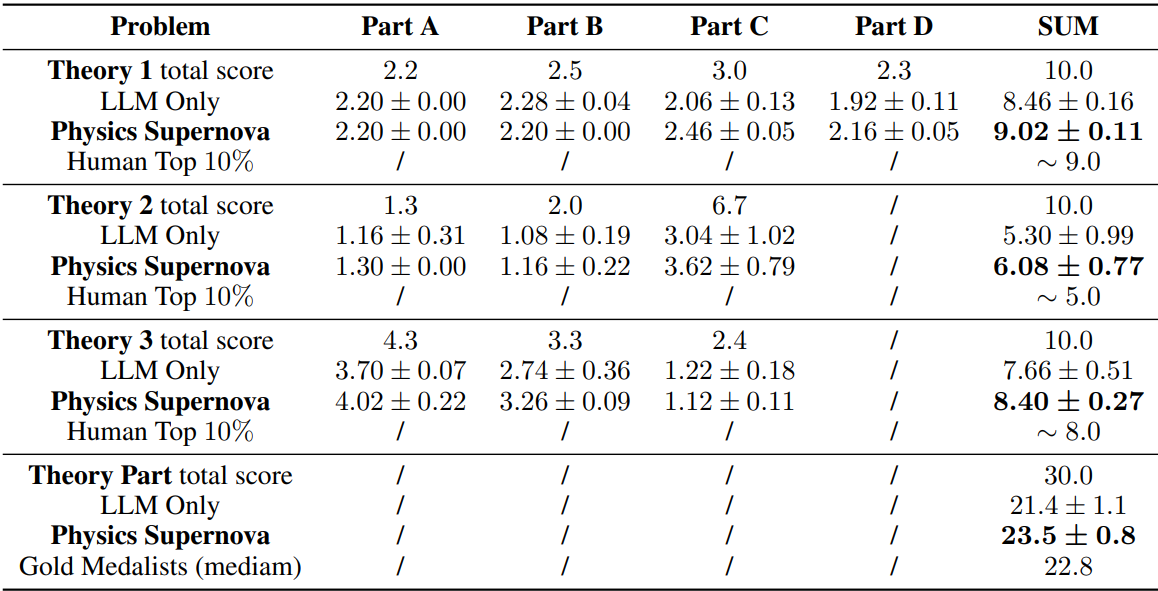

The system scored an impressive 23.5 out of 30 points across five independent runs, placing it 14th among 406 human contestants worldwide and surpassing the median theory score of human gold medalists (22.8 points).

This achievement represents a significant milestone in AI's journey toward mastering complex scientific reasoning. Unlike previous benchmarks that focus on final answers, IPhO 2025 employs fine-grained, part-level scoring that evaluates reasoning steps, problem-solving methodology, and the ability to extract precise measurements from figures.

The competition's emphasis on novel physics models, multi-step derivations, and visual interpretation makes it an exceptionally rigorous test of scientific competence. Published in a recent arXiv preprint (Qiu et al., 2025), the research demonstrates how tool-augmented agent systems can push the limitations of standalone large language models (LLMs) in scientific problem-solving.

The work builds on the CodeAgent architecture from the smolagents framework, enhanced with physics-specific tools that enable precise figure analysis and self-correction mechanisms.

Key Takeaways

- Physics Supernova achieved a mean theory score of 23.5 ± 0.8 points out of 30, ranking 14th among 406 contestants and exceeding the gold medalist median (22.8 points)

- The system demonstrated top-10% performance on all three IPhO 2025 theory problems, with particularly strong results on the most challenging problems

- Two specialized tools (ImageAnalyzer for precise figure measurements and AnswerReviewer for consistency checks) provided measurable performance gains over base LLM approaches

- Ablation studies confirmed that both tools contribute significantly, with ImageAnalyzer reducing measurement errors from 0.015 to 0.004 in critical tasks

- The architecture demonstrates how domain-specific tools can enhance general-purpose LLMs without requiring specialized pretraining

Understanding the IPhO Challenge

The International Physics Olympiad stands as one of the world's most prestigious academic competitions, attracting the brightest high school physics students from over 90 countries. The 2025 competition, held in France, featured three theory problems spanning modern physics, thermodynamics, and dynamics, each worth 10 points. These problems are designed to test not just knowledge retention, but deep conceptual understanding, mathematical modeling, and the ability to apply physical principles to novel scenarios.

What makes IPhO particularly challenging for AI systems is its emphasis on visual interpretation and measurement. Theory Problem 1, for example, required contestants to accurately read galactic rotation curves and mass distribution plots, demanding precision measurements that could make or break a solution.

Theory Problem 2, the most difficult of the three, involved complex thermodynamic calculations with Cox's timepiece mechanism, a novel physics model that challenged even experienced contestants. The official results show human top-10% scores of 9.0, 5.0, and 8.0 points for the three problems respectively, highlighting the steep difficulty gradient.

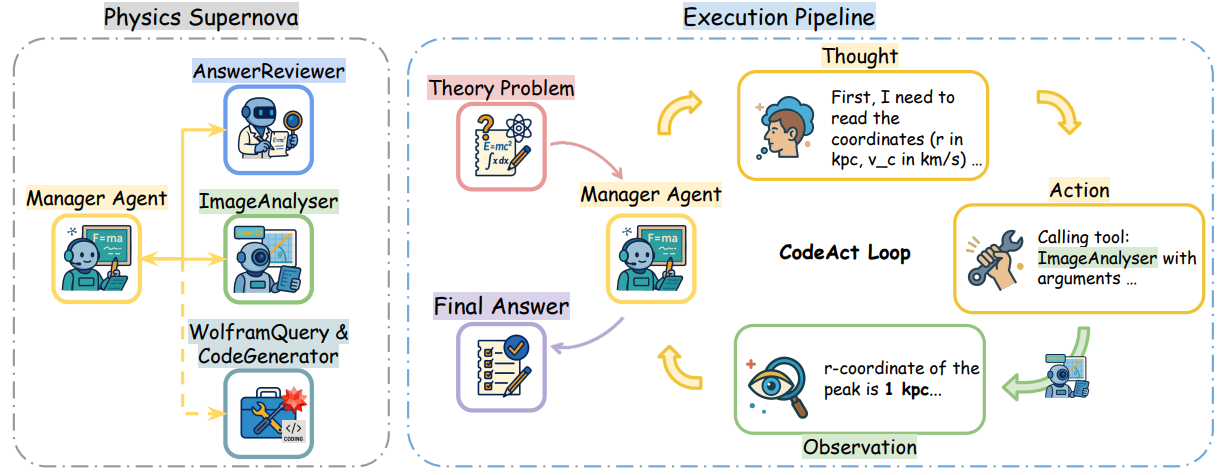

Figure 1: Our proposed agent system: Physics Supernova, for solving theory problems in physics. Credit: Wang et al.

The Physics Supernova Architecture

Physics Supernova adopts a flexible, tool-augmented approach that contrasts sharply with rigid, hard-coded workflows common in mathematical problem-solving systems. Built on the CodeAgent pattern from the smolagents framework, the system consists of a Manager Agent that orchestrates calls to specialized tools through iterative Reason-Act (ReAct-like) loops. This design philosophy of "minimum pre-definition and maximal autonomy" allows the agent to adapt its approach based on the specific demands of each physics subproblem.

The Manager Agent operates by first analyzing the problem structure, identifying key challenges such as figure interpretation requirements or complex derivation needs. It then selects appropriate tools, executes them, and incorporates the results into its reasoning process. This cycle continues until the agent produces final answers for all subquestions, with each iteration building upon previous insights and tool outputs.

The system's backbone relies on Gemini 2.5 Pro, chosen for its advanced multimodal capabilities and support for agentic workflows. This foundation model provides the core reasoning abilities while the specialized tools handle domain-specific challenges that would otherwise limit performance.

Innovative Physics-Specific Tools

The ImageAnalyzer tool addresses a critical weakness in current LLMs: precise interpretation of scientific figures and measurements. When Physics Supernova encounters diagrams requiring accurate readings, such as the galactic rotation curves in Theory Problem 1, it delegates this task to a dedicated vision-language model optimized for measurement precision. The tool returns not just numeric values but also uncertainty estimates, allowing the agent to account for measurement limitations in subsequent calculations.

A compelling example from the research demonstrates ImageAnalyzer's impact on Theory Problem 1 Part C, which required reading frequency values from a complex astrophysical plot. Direct LLM analysis produced measurement errors averaging 0.015 MHz, while the ImageAnalyzer reduced this to just 0.004 MHz, a nearly four-fold improvement that directly translated to higher part-level scores.

The AnswerReviewer tool embodies the self-critical thinking that characterizes expert physicists. This component systematically evaluates intermediate and final results for dimensional consistency, sign conventions, boundary condition compliance, and physical plausibility. The tool draws inspiration from historic physics breakthroughs where apparent contradictions, like the ultraviolet catastrophe, led to revolutionary insights. By catching errors that might otherwise propagate through multi-step solutions, AnswerReviewer significantly improves overall accuracy.

The research also explores integration with WolframAlpha for accessing expert domain knowledge, particularly relevant for problems requiring specific physical constants or reference data. Testing on ten expert-knowledge problems showed marked improvement when the computational knowledge engine was available, suggesting pathways for even more sophisticated tool integration.

Detailed Performance Analysis

The experimental results reveal fascinating insights about AI performance on physics problems. Physics Supernova achieved scores of 9.02 ± 0.11, 6.08 ± 0.77, and 8.40 ± 0.27 on Theory Problems 1, 2, and 3 respectively. Notably, the system showed its greatest advantage over standalone LLMs on the most difficult problems, exactly where human expertise also becomes most critical.

Table 2: Experiment results for Physics Supernova (mean±std) across multiple problems and parts (with Gemini 2.5 Pro), for 5 rounds. Our agent results rank top 10% among human contestants on all three Theory problems. Credit: Wang et al.

Theory Problem 2, focusing on thermodynamics and featuring Cox's timepiece mechanism, proved most challenging for both humans and AI. Here, Physics Supernova's tools provided the largest performance gains, with the complete system scoring 6.08 points compared to 5.30 for the base LLM alone. This pattern suggests that tool augmentation becomes increasingly valuable as problems require more sophisticated reasoning and domain-specific capabilities.

The ablation studies provide crucial validation of the tool-based approach. Removing ImageAnalyzer decreased the total theory score from 23.5 to 22.8 points, while removing AnswerReviewer reduced it to 22.4 points. These decrements, while seemingly modest, represent the difference between gold-medal performance and falling short of the elite threshold demonstrating how incremental improvements in AI systems can yield qualitatively different outcomes.

Perhaps most remarkably, the system showed lower variance on easier problems and higher variance on difficult ones, mirroring human performance patterns. This suggests that Physics Supernova is not simply memorizing solutions but genuinely grappling with the cognitive challenges that make certain physics problems inherently difficult.

Key Technical Innovations

Several technical aspects of Physics Supernova merit deeper examination. The system's use of summarization tools to compensate for smolagents' limited built-in memory demonstrates practical engineering solutions to framework limitations. The careful prompt engineering for both ImageAnalyzer and AnswerReviewer tools, detailed in the paper's appendices, provides replicable approaches for similar tool development.

The research methodology itself sets new standards for AI evaluation on scientific tasks. By conducting five independent runs per problem and reporting mean performance with standard deviations, the authors account for the stochastic nature of LLM outputs, a crucial consideration often overlooked in AI research. The careful mapping of agent scores onto official human score distributions provides meaningful context for performance claims.

The integration testing with WolframAlpha demonstrates how modern AI agents can seamlessly incorporate external knowledge sources. This capability becomes particularly relevant as AI systems tackle increasingly specialized domains where comprehensive training data may be unavailable or rapidly evolving.

Implications for AI and Scientific Research

Physics Supernova's success carries implications that extend far beyond olympiad competitions. The system demonstrates that tool-augmented agents using frontier models can achieve expert-level performance on tasks requiring multi-modal reasoning, precise measurement, and domain-specific knowledge integration. This represents a potential pathway toward AI systems capable of contributing to actual scientific research workflows.

The modular design philosophy proves particularly significant. Rather than requiring massive, physics-specific model training, Physics Supernova shows how general-purpose frontier LLMs can be enhanced through targeted tool integration. This separation of concerns makes the approach more adaptable and cost-effective than alternatives requiring specialized model development.

For the broader AI community, the work provides a template for domain-specific agent development. The principles of flexible tool orchestration, specialized capability augmentation, and rigorous evaluation against expert benchmarks could readily apply to chemistry, biology, engineering, and other scientific disciplines.

The research also highlights the importance of evaluation methodology. IPhO's part-level scoring reveals capabilities that final-answer-only benchmarks might miss, providing a more nuanced view of AI reasoning abilities. This evaluation approach becomes increasingly important as AI systems approach human-level performance on various tasks.

Limitations and Future Directions

While Physics Supernova's achievements are impressive, important limitations remain. The system was evaluated only on theory problems, avoiding the hands-on experimental components that comprise 40% of IPhO scoring. Real-world physics research demands both theoretical reasoning and experimental design, implementation, and analysis capabilities that remain challenging for current AI systems.

The reliance on a single foundation model (Gemini 2.5 Pro) raises questions about the generalizability of results. Cross-model validation would strengthen claims about the tool-based approach versus model-specific capabilities. Additionally, the ranking methodology, while reasonable, relies on mapping agent scores onto human distributions rather than direct participation with live evaluation.

Future work might explore program-based experimental proxies, which the authors suggest could bridge the gap between pure theory and hands-on experimentation. Such approaches could evaluate experimental design and data analysis capabilities without requiring robotic manipulation of physical instruments. The integration of more sophisticated domain tools, real-time physics simulations, and collaborative multi-agent systems all represent promising research directions.

Conclusion

Physics Supernova represents a significant milestone in AI's journey toward scientific competence. By achieving gold-medalist-level performance on one of the world's most challenging physics competitions, the system demonstrates that tool-augmented agents can rival human experts on complex scientific reasoning tasks. The success stems not from brute-force scaling but from thoughtful integration of specialized capabilities that address the unique demands of physics problem-solving.

The work provides a compelling blueprint for advancing AI in scientific domains: identify the specific capabilities required for expert performance, develop targeted tools to address those needs, and integrate them through flexible agent architectures that preserve autonomy while leveraging specialization. This approach promises to be more sustainable and adaptable than alternatives requiring massive domain-specific model training.

For researchers interested in replication or extension, the authors provide comprehensive implementation details, tool prompts, and evaluation protocols. The Physics Supernova codebase, available on GitHub, offers a starting point for further development. As AI systems continue advancing toward artificial general intelligence, Physics Supernova's demonstration of expert-level scientific reasoning marks an important waypoint on that journey.

Definitions

International Physics Olympiad (IPhO): A prestigious annual physics competition for high school students, featuring theoretical and experimental problems that test deep conceptual understanding and problem-solving skills.

CodeAgent: An architectural pattern from the smolagents framework where a manager agent plans and executes tool usage through iterative code generation and execution cycles.

ImageAnalyzer: A specialized tool that routes high-resolution scientific figures to vision-language models for precise measurement and data extraction tasks.

AnswerReviewer: A tool that evaluates intermediate and final solutions for dimensional consistency, physical plausibility, and mathematical correctness.

Reason-Act Loop: An iterative process where the agent alternates between reasoning about the current state and taking actions (tool calls) to advance toward a solution.

Smolagents: A lightweight framework for building agentic AI systems with emphasis on code-based tool integration and minimal predefined workflows.

References

(Qiu et al., 2025) Physics Supernova: AI Agent Matches Elite Gold Medalists at IPhO 2025. arXiv:2509.01659.

(IPhO 2025, 2025) Final results page with official scoring framework and contestant rankings.

(IPhO Olimpicos, 2025) Community-maintained index of official IPhO problems and solutions across multiple years.

(Comanici et al., 2025) Gemini 2.5: Pushing the Frontier with Advanced Reasoning, Multimodality, Long Context, and Agentic Capabilities.

(Roucher et al., 2025) smolagents: A lightweight library for building agentic AI systems with code-based tool integration.

AI Agent Achieves Gold Medal Performance on IPhO 2025 Physics Competition

Physics Supernova: AI Agent Matches Elite Gold Medalists at IPhO 2025