The paper "Build the web for agents, not agents for the web" proposes a reorientation of how we design the modern web: rather than forcing AI systems to operate through human-centered pages, we should build agent-first interfaces that expose structured, safe, and efficient operations to machine agents.

Authored by Xing Han Lù, et al at McGill University, the position piece argues that today's browser- and DOM-centric approaches saddle web agents with brittle perception, high token costs, and unclear safety boundaries.

The authors introduce Agentic Web Interfaces (AWIs): standardized, safety-conscious, developer-friendly interfaces that let agents perform high-level operations with predictable semantics, access controls, and clear success criteria.

This article synthesizes the paper, places it alongside related efforts such as real-world web benchmarks and web automation tooling, and examines how AWIs could reshape agent design and evaluation.

Key Takeaways

- AWIs propose designing the web with machine agents in mind, rather than adapting agents to human-centric UIs.

- Six guiding principles emerge: standardized, human-centric, safe, optimally represented, efficient to host, and developer-friendly.

- AWIs could reduce token and computation overhead by replacing raw DOM/screenshot parsing with compact, task-relevant state and high-level actions.

- Safety moves upstream: access control lists, user approvals for risky operations, and hardened channels become part of the interface contract.

- Compatibility with human-facing UIs can be maintained through bidirectional mappers built on automation tools like Playwright.

- Benchmarks such as WebArena and emerging safety measures from ST-WebAgentBench contextualize how to evaluate agents and AWIs in realistic, risk-aware scenarios.

- AWIs differ from the Model Context Protocol: MCP standardizes tool invocation within model runtimes, whereas AWIs standardize the web's surface itself.

- Agentic research trends like OpenAI's Deep Research underscore the momentum toward long-horizon, web-grounded workflows that would benefit from AWI-standardized environments.

Overview

Web agents today mainly rely on three regimes: screenshots of browser state, full or partial DOM trees, or a mixture of both. Screenshots provide visual grounding but hide off-screen or latent elements, and they balloon context when over-annotated. DOM trees carry rich structure but often exceed practical token budgets and include irrelevant noise.

APIs can bypass visualization but are unevenly documented, inconsistent across sites, and usually not tailored to agentic safety and workflow needs. The result is an uneasy fit - agents emulate humans in a space not built for them, and both performance and safety suffer.

AWIs invert this relationship. Instead of parsing whatever the UI renders, an agent would access a compact, semantically meaningful state representation and invoke a stable set of high-level actions - open_product(id), add_to_cart(item_id), schedule_appointment(slot), and so on.

The AWI defines what success means, governs what the agent may see or do, and can progressively transfer detail only when needed (for example, thumbnails or embeddings first, then higher resolution on demand). The interface can also mediate human approval for sensitive operations like payments or account deletion.

Crucially, AWIs are compatible with the existing web. Sites could expose both their human-facing UI and an AWI. A bidirectional translator - implemented with automation frameworks such as Playwright - can sync AWI state to the UI and vice versa, enabling recording and replay, human-in-the-loop reviews, and hybrid agent-human workflows (Microsoft Playwright, 2025). This makes the transition evolutionary rather than disruptive.

Why It Matters

The AWI proposal addresses three persistent pain points for web agents: representation, reliability, and risk. First, it confronts the representation problem by defining a task-appropriate state and action space, providing clarity for models and reducing token waste.

Second, it improves reliability by standardizing behaviors across sites - open, search, select, transact - that agents can learn once and reuse. Third, it constrains risk: explicit access control lists (ACLs), privilege separation between user and agent, and approval gates reduce the blast radius of automation.

This shift has cross-domain implications. In safety, AWIs allow policy-aware evaluation and proactive mitigation strategies. In reinforcement learning, they make reward computation easier by defining environment-grounded success criteria.

In multimodality, AWIs can deliver purposeful image/audio/video encodings better suited to agent reasoning than raw screenshots. And in human-centric design, AWIs encourage personalization and levels of autonomy aligned with user preferences and context.

Discussion

The paper enumerates six guiding principles for AWIs that together define a practical design north star.

- First, standardized: sites should converge on a shared action/state taxonomy so agents can generalize across ecosystems.

- Second, human-centric: the interface must be aligned to user needs and preferences, support human oversight, and preserve accessibility.

- Third, safe: defaults minimize harm, high-risk actions require consent, and adversarial channels are limited.

- Fourth, optimally represented: information is structured, compact, and progressively disclosed.

- Fifth, efficient to host: AWIs should manage load and queue agents to avoid degrading service for human users.

- Sixth, developer-friendly: building and maintaining an AWI should be feasible, with tooling, documentation, and backward compatibility.

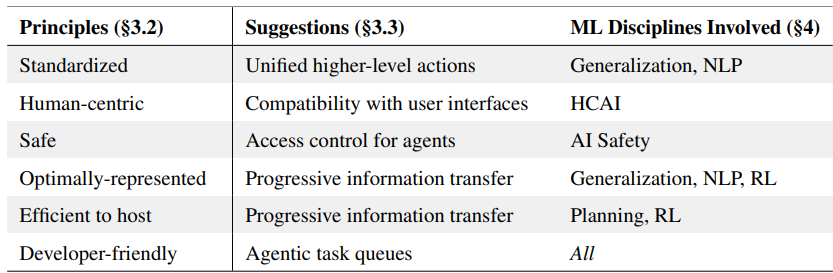

"Table 1" in the paper juxtaposes these principles with concrete suggestions and the ML disciplines best positioned to drive them. For instance, progressive information transfer aligns with NLP methods such as iterative summarization and retrieval augmentation; unified high-level actions connect to planning and reinforcement learning; and access control connects to AI safety. This disciplines-by-principle mapping clarifies that AWIs are a multi-field project rather than a single library or spec.

Browser-based agents face representational limits: screenshot pipelines miss occluded or latent elements and ask models to reason over dense pixels; DOM-first pipelines can exceed 1M tokens on complex pages, driving up compute and cost.

AWIs aim to replace both with task-appropriate abstractions. In addition, resource challenges - agents as a share of web traffic, repeated render cycles in crawlers, CAPTCHAs escalating arms races - call for new infrastructure. The authors argue agent queues should throttle concurrent access and smooth demand, reserving capacity for human users.

Safety is a central theme. The authors emphasize strict access control for agents, privacy-preserving credential flows, and explicit user approvals for high-risk actions. This aligns with external work on safety and trustworthiness for web agents, including frameworks that measure risk ratio and completion under policy (CuP) to quantify both task success and exposure to hazards (ST-WebAgentBench, 2024). AWIs can embed such policies directly into the interface contracts - what actions are allowed, what disclosures are required, and how violations are detected.

The authors also compare AWIs to the Model Context Protocol. MCP standardizes how a model calls tools in its runtime environment - file systems, databases, web APIs - so that tool providers and model clients interoperate cleanly. AWIs, by contrast, propose that websites themselves adopt a machine-facing standard. In other words, MCP is about how models talk to tools; AWIs are about how the web talks to models (Model Context Protocol, 2024). These are complementary and, together, could enable end-to-end, reliable agent toolchains.

Finally, the paper suggests compatibility layers bridging AWIs and traditional UIs. With automation frameworks like Playwright, sites can reflect AWI actions in the human-facing UI for review and audit and mirror human actions back into the AWI state for reproducible agent training. Given the pace of agentic research - e.g., OpenAI's Deep Research for long-horizon browsing and verification - such harmonization could accelerate safe deployment by keeping humans in the loop (OpenAI, 2025; Microsoft Playwright, 2025).

Conclusion

AWIs reframe web automation from a perception problem into an interface design problem. If adopted, they promise more reliable agents, clearer safety boundaries, and lower costs. The proposal remains high-level - by design - and calls for cross-community participation: safety researchers to define guardrails; NLP and retrieval experts to optimize representations; RL and planning researchers to standardize actions and rewards; and HCI researchers to keep humans at the center. The next steps are concrete pilots on real sites, evaluations on realistic benchmarks, and early standards work among platform providers.

For practitioners, the message is actionable: start by sketching an AWI facade over a single workflow - checkout, scheduling, or support triage - backed by a policy-aware gateway and a Playwright-driven UI bridge. Iterate with measurements of success rate, latency, token cost, and safety incidents. The broader ecosystem will benefit as patterns converge, and benchmarks evolve to reflect agent-first web design.

Definitions

Agentic Web Interface (AWI): A website-exposed interface designed for machine agents, providing compact state, high-level actions, safety controls, and clear success criteria (Lù et al., 2025).

Access Control List (ACL): A policy that specifies which identities or roles may perform which actions or read which data within the AWI environment.

Progressive Information Transfer: A strategy where coarse signals (thumbnails, embeddings, summaries) are sent initially and richer media are provided on demand.

Model Context Protocol (MCP): A protocol that standardizes how models interact with tools and resources in their runtime environment (Model Context Protocol, 2024).

WebArena: A realistic web-based benchmark for evaluating web agents in end-to-end tasks (Zhou et al., 2023).

ST-WebAgentBench: A benchmark emphasizing safety and trustworthiness in web agents, including measures such as CuP and Risk Ratio (ST-WebAgentBench, 2024).

References

(Lù et al., 2025) Build the web for agents, not agents for the web.

(Zhou et al., 2023) WebArena: A Realistic Web Environment for Building Autonomous Agents.

(ST-WebAgentBench, 2024) ST-WebAgentBench: A Benchmark for Evaluating Safety and Trustworthiness in Web Agents.

(Microsoft Playwright, 2025) Playwright official site. GitHub repository.

(Model Context Protocol, 2024) Model Context Protocol documentation.

(OpenAI, 2025) Introducing Deep Research.

Agentic Web Interfaces: Building Websites That Welcome AI Agents

Build the web for agents, not agents for the web