The landscape of artificial intelligence is rapidly evolving as researchers explore new ways to harness the collaborative power of multiple Large Language Models (LLMs). A groundbreaking paper from Ludwig Maximilian University of Munich and the Munich Center for Machine Learning introduces the Agentic Neural Network (ANN), a revolutionary framework that bridges the gap between neural network optimization principles and multi-agent LLM collaboration.

The ANN framework conceptualizes multi-agent collaboration as a layered neural network architecture, where each agent operates as a node and each layer forms a cooperative team focused on specific subtasks. This innovative approach combines the structured optimization principles of neural networks with the flexible reasoning capabilities of LLMs, enabling systems that can adapt and improve their collaborative strategies automatically.

By implementing both forward and backward optimization phases, ANN demonstrates remarkable performance improvements across diverse benchmarks while maintaining the interpretability and modularity that makes multi-agent systems valuable for complex problem-solving.

Key Takeaways

- ANN introduces a novel neural network-inspired framework for organizing and optimizing multi-agent LLM collaboration

- The system employs textual backpropagation to refine agent prompts, roles, and coordination strategies automatically

- ANN outperforms existing baselines across four benchmark datasets: HumanEval (code generation), Creative Writing, MATH (mathematical reasoning), and DABench (data analysis)

- The framework achieves up to 93.9% accuracy on HumanEval using GPT-4o-mini, demonstrating cost-effective performance gains

- Dynamic team selection and momentum-based optimization enable robust adaptation to diverse task requirements

- The approach generalizes across different LLM backbones (GPT-3.5, GPT-4o-mini, GPT-4) without task-specific fine-tuning

- ANN provides a unified framework that eliminates the need for manual prompt engineering and agent configuration

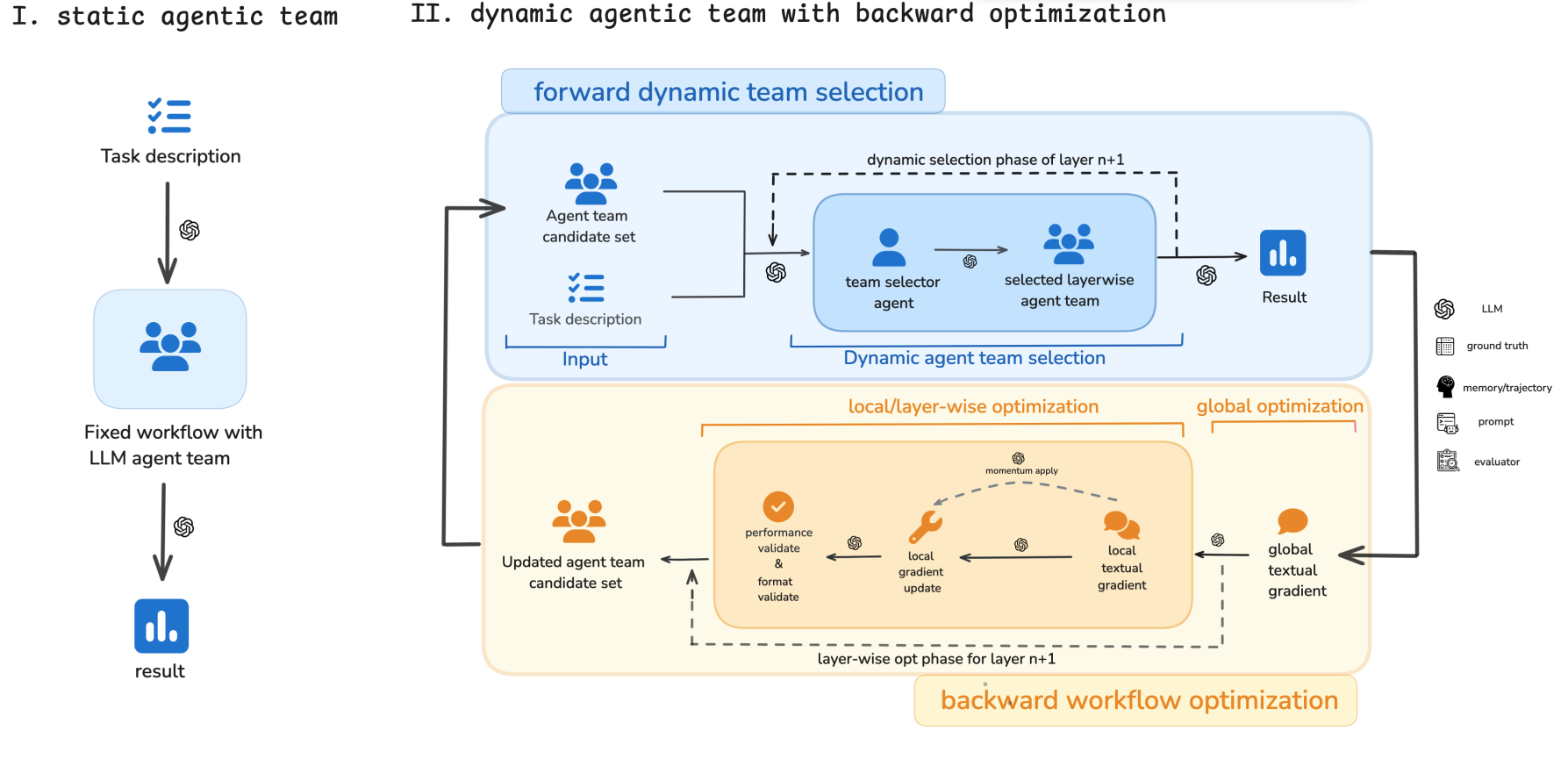

Figure 2: Difference between static agentic team and our framework. The left panel illustrates a static agentic team,

where a fixed workflow is predefined for a given task without adaptability. In contrast, the right panel demonstrates

our \)ANN\) framework, which dynamically selects and refines agent teams layer by layer. During the forward phase,

Revolutionary Architecture Design

The Agentic Neural Network represents a fundamental reimagining of how multi-agent systems can be structured and optimized. Unlike traditional approaches that rely heavily on manual configuration and static workflows, ANN draws inspiration directly from neural network architecture and optimization principles. Each layer in the network consists of specialized agent teams that collaborate on specific subtasks, with information flowing seamlessly between layers through dynamic aggregation functions.

The forward phase of ANN operation focuses on dynamic team selection and task decomposition. During this phase, the system decomposes complex problems into structured subtasks and assigns specialized agent teams to each layer. The selection of aggregation functions is particularly sophisticated, utilizing a dynamic routing mechanism that considers task complexity and execution history. Mathematically, this is expressed as

The execution of each layer follows the formula

Why It's Important

The significance of ANN extends far beyond its impressive benchmark performance, representing a crucial step toward fully automated multi-agent system design. Traditional multi-agent frameworks require extensive manual engineering of prompts, role assignments, and coordination mechanisms, often involving trial-and-error approaches that are both time-consuming and suboptimal. ANN addresses this fundamental limitation by providing a data-driven, principled approach to multi-agent optimization that can automatically discover effective collaboration patterns.

The framework's importance is particularly evident in its cross-domain applicability and cost-effectiveness. By demonstrating strong performance across diverse tasks from mathematical reasoning to creative writing and code generation, ANN shows that neural network optimization principles can be successfully adapted to the discrete, textual domain of LLM collaboration.

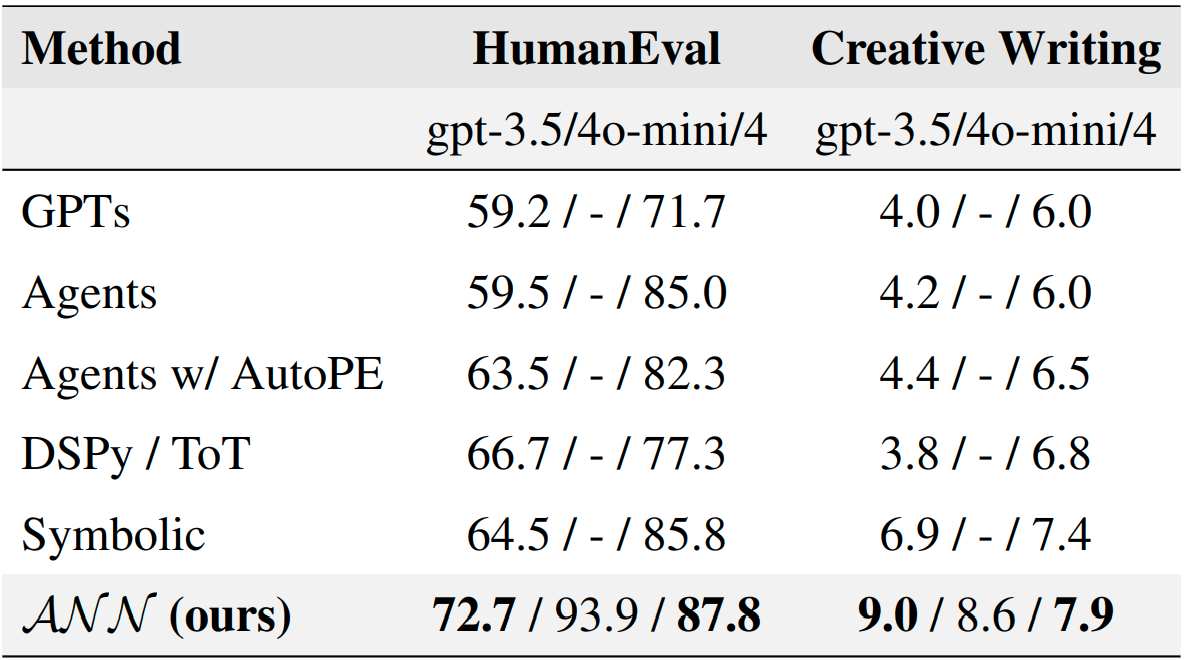

Table 1: Comparison results on HumanEval and Creative Writing benchmarks. The best results in each category are marked in bold. Credit: Ma et al.

The fact that GPT-4o-mini often achieves performance comparable to or exceeding more expensive models highlights the framework's potential for democratizing access to high-performance AI systems.

ANN's self-evolving capabilities also address a critical challenge in AI system deployment, that is the need for systems that can adapt to new domains and requirements without extensive reengineering.

The textual backpropagation mechanism enables continuous improvement of agent interactions and coordination strategies, potentially leading to systems that become more effective over time through experience. This adaptive capacity is essential for real-world applications where task requirements may evolve or where new challenges emerge that weren't anticipated during initial system design.

Textual Backpropagation and Mathematical Foundations

The backward optimization phase represents one of ANN's most innovative contributions, adapting the fundamental principles of neural network backpropagation to the textual domain.

When performance falls below predetermined thresholds, the system triggers a comprehensive optimization process that operates at both global and local levels.

The global optimization analyzes inter-layer coordination and refines the overall system architecture, computing gradients as

Local optimization provides fine-grained adjustments to individual layers and agents, combining global guidance with layer-specific feedback. The local gradient computation follows the formula

To enhance stability and prevent oscillations, ANN incorporates momentum-based optimization through the update rule

This mathematical framework enables smooth, stable optimization while maintaining the flexibility needed for textual domain optimization. The integration of format validation and performance validation mechanisms ensures that all optimizations contribute positively to system functionality while maintaining coherent communication protocols between agents.

Comprehensive Experimental Validation

The experimental evaluation of ANN spans four diverse benchmark datasets, each testing different aspects of multi-agent collaboration and reasoning capabilities.

On HumanEval (Table 1 above), a standard benchmark for code generation tasks, ANN achieves remarkable performance improvements, reaching 72.7% accuracy with GPT-3.5, 93.9% with GPT-4o-mini, and 87.8% with GPT-4. These results significantly outperform previous baselines, with the GPT-4o-mini results being particularly noteworthy as they demonstrate that cost-effective models can achieve superior performance through effective collaboration frameworks.

The Creative Writing benchmark evaluates the system's ability to generate coherent, engaging narratives that conclude with specific four-sentence prompts. ANN's layered approach proves particularly effective for this task, achieving scores of 9.0 and 7.9 for GPT-3.5 and GPT-4 respectively.

The authors attribute this success to the structured, layer-wise approach that fosters creative synergy among specialized agents while maintaining logical consistency in narrative structure. This performance demonstrates ANN's versatility in handling both analytical and creative tasks.

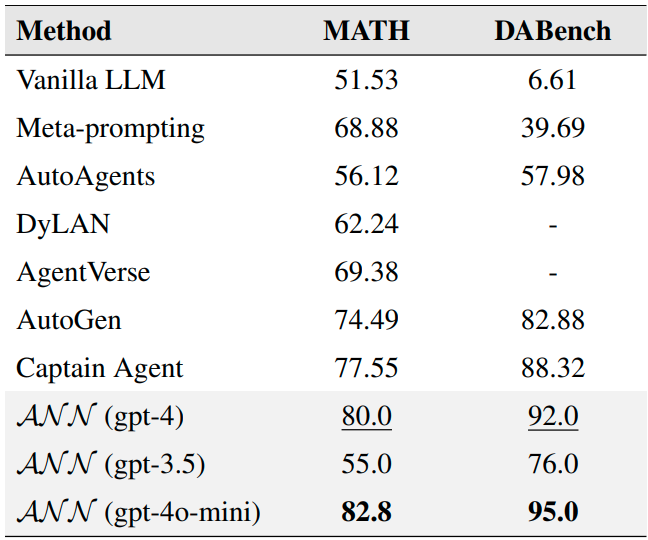

Table 2: Comparison results on the MATH and DABench datasets. The best results in each column are marked in bold, and the second-best results are underlined. All results without special annotation are based on GPT-4. Credit: Ma et al.

Mathematical reasoning capabilities are tested through the MATH dataset, which comprises challenging competition-level problems requiring multi-step symbolic reasoning. ANN demonstrates strong performance with accuracies of 55.0%, 82.5%, and 80.0% across GPT-3.5, GPT-4o-mini, and GPT-4 respectively.

The framework's ability to decompose complex mathematical problems into manageable subtasks while maintaining logical consistency across layers proves crucial for these challenging reasoning tasks. On DABench, focusing on data analysis tasks, ANN achieves impressive results of 75.6%, 95.0%, and 88.88% across the three model variants, with GPT-4o-mini again showing exceptional performance.

Ablation Studies and Component Analysis

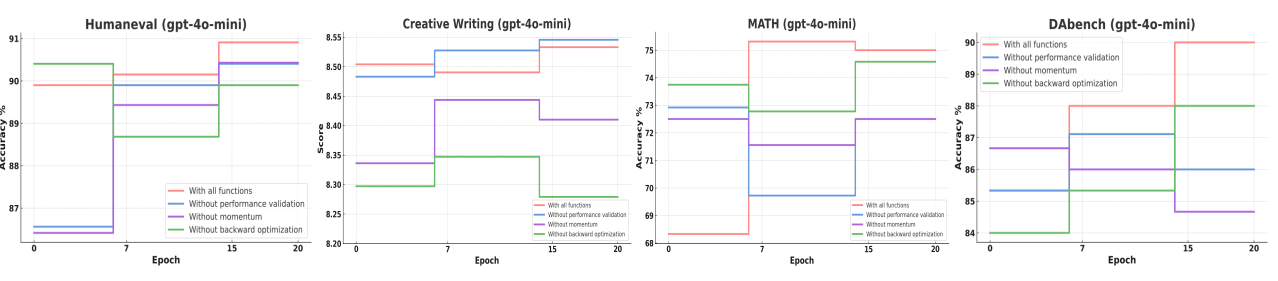

The comprehensive ablation studies conducted using GPT-4o-mini reveal the critical importance of each component within the ANN framework. The removal of momentum-based optimization shows the largest performance degradation on HumanEval, indicating that gradual accumulation of textual gradient signals is essential for code generation tasks that require precise correctness.

This finding aligns with the mathematical formulation where momentum helps smooth optimization trajectories and prevents oscillations that could destabilize the delicate balance required for accurate code generation.

Validation-based performance checks prove crucial for maintaining system reliability, particularly evident in the MATH benchmark where their removal leads to more erratic updates. Without proper validation filtering, suboptimal agent prompts can be accepted too frequently, leading to degraded performance in tasks requiring mathematical precision.

The backward optimization mechanism emerges as fundamental to the framework's success, with its removal resulting in significantly weaker improvements per training epoch across all tested datasets.

Figure 3: Ablation results on HumanEval, Creative Writing, MATH, and DABench using the gpt-4o-mini model for both training and validation. We compare the full AN N framework (red curve) against three ablated variants: w/o Validation Performance (blue curve), w/o Momentum (purple curve), and w/o Backward Optimization (green curve). Each curve shows average validation accuracy (or equivalent score) over three runs. The full AN N consistently outperforms all ablations, confirming the necessity of each component. Credit: Ma et al.

Figure 3 illustrates these ablation results across all four benchmarks, showing consistent upward trends in validation accuracy with the full ANN approach converging to the highest performance. The systematic nature of these improvements demonstrates that each component contributes synergistically to the overall framework effectiveness, validating the integrated design philosophy underlying ANN's architecture.

Computational Efficiency and Cost Analysis

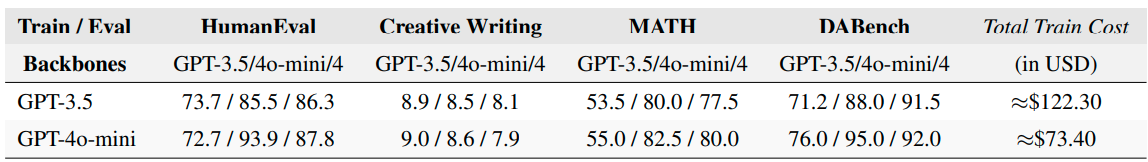

One of ANN's most compelling advantages lies in its computational efficiency and cost-effectiveness. The framework's training costs are estimated at approximately $73.40 when using GPT-4o-mini as the training backbone, compared to $122.30 for GPT-3.5, based on processing approximately 244.6 million input tokens. These costs are remarkably reasonable considering the comprehensive optimization performed across multiple datasets and the significant performance improvements achieved.

Table 4: Evaluation results across four benchmarks (HumanEval, Creative Writing, Math, and DABench) with two different training backbones (GPT-3.5 vs GPT-4o mini), evaluated across GPT-3.5, GPT-4o, and GPT-4. Training costs are estimated based on approximately 244.6M input tokens. Credit: Ma et al.

The efficiency gains extend beyond monetary considerations to include reduced human engineering effort and faster deployment times. Traditional multi-agent system development often requires weeks or months of manual prompt engineering and architecture design, followed by extensive testing and refinement. ANN automates this entire process, enabling rapid deployment of effective multi-agent systems across new domains without requiring domain-specific expertise in prompt engineering or agent coordination.

The framework's ability to generalize across different LLM backbones without retraining represents another significant efficiency advantage. Systems trained with GPT-4o-mini can effectively generalize to more powerful models like GPT-4, enabling organizations to develop and test with cost-effective models before deploying to production with higher-capacity alternatives. This flexibility reduces both development costs and deployment risks while maintaining performance guarantees.

Future Directions and Limitations

Despite its impressive capabilities, ANN faces several limitations that present opportunities for future research and development. The framework's current reliance on manually defined initial structure candidates and node prompts limits its adaptability to completely novel domains.

The authors suggest that meta-prompt learning could address this limitation by generating initial layouts from accumulated agent experience, potentially enabling fully autonomous system initialization.

Computational overhead becomes a concern as the number of candidate teams grows, making efficient team identification increasingly challenging. Advanced pruning techniques, including periodic pruning and performance-driven filtering, could enhance efficiency while preserving the diversity necessary for effective exploration. However, such pruning must be carefully balanced to avoid homogenization that could reduce the system's adaptive capacity.

The static nature of agent roles once teams are instantiated presents another area for improvement. Dynamic role adjustment mechanisms that react in real-time to changing requirements would enhance adaptability for highly complex or evolving tasks. Additionally, the integration of multi-agent fine-tuning with global and local workflow optimization represents a promising direction for improving performance across diverse task categories while maintaining the framework's general applicability.

Conclusion

The Agentic Neural Network framework may successfully bridge the gap between neural network optimization principles and LLM collaboration. By introducing textual backpropagation and dynamic team selection mechanisms, ANN demonstrates that automated optimization can outperform manual engineering approaches across diverse domains. The framework's consistent performance improvements across HumanEval, Creative Writing, MATH, and DABench benchmarks validate its general applicability and effectiveness.

The research offers a pathway toward fully automated multi-agent system development that could democratize access to sophisticated AI capabilities. The cost-effectiveness demonstrated through GPT-4o-mini's strong performance suggests that high-quality multi-agent systems need not require the most expensive LLM variants, potentially making advanced AI collaboration accessible to a broader range of applications and organizations.

As AI systems become increasingly complex and multifaceted, frameworks like ANN that can automatically discover and optimize collaboration patterns will become essential tools for AI development. The integration of symbolic coordination with connectionist optimization principles pioneered by ANN may well represent the future direction of AI system architecture, enabling the development of truly self-evolving artificial intelligence systems. For researchers and practitioners interested in exploring this framework further, the authors have committed to open-sourcing the entire codebase, facilitating broader adoption and continued development of this promising approach.

Definitions

Agentic Neural Network (ANN): A framework that conceptualizes multi-agent LLM collaboration as a layered neural network architecture, where agents act as nodes and layers form cooperative teams.

Textual Backpropagation: An optimization process that uses natural language feedback from LLMs to refine agent prompts, roles, and coordination strategies, analogous to gradient-based optimization in traditional neural networks.

Dynamic Team Selection: A mechanism that automatically chooses optimal agent configurations and aggregation functions based on task complexity and execution history, eliminating the need for manual team composition.

Multi-Agent System (MAS): A computational system composed of multiple autonomous agents that interact and collaborate to solve complex problems beyond the capability of individual agents.

Large Language Model (LLM): A type of artificial intelligence model trained on vast amounts of text data, capable of understanding and generating human-like language for various tasks.

Aggregation Functions: Mathematical or logical operations that combine outputs from multiple agents within a layer to produce coherent input for subsequent layers or final results.

Momentum-based Optimization: A technique borrowed from neural network training that uses historical gradient information to smooth optimization trajectories and prevent oscillations during the learning process.

Agentic Neural Networks: Self-Evolving Multi-Agent Systems Through Textual Backpropagation

Agentic Neural Networks: Self-Evolving Multi-Agent Systems via Textual Backpropagation