Accessing different large language model providers has traditionally meant wrestling with unique APIs, compatibility issues, and the risk of getting stuck on a single platform. Mozilla.ai’s newly released Python library, any-llm, offers a unified, user-friendly interface to leading LLM providers regardless of who operates them or where they’re hosted.

What is Mozilla's any-llm?

ANY-LLM is a comprehensive, open-source AI application designed to provide users with a seamless and private way to interact with and utilize the capabilities of Large Language Models (LLMs). It functions as an all-in-one platform that allows for the local and secure use of various LLMs, catering to both individual and enterprise needs.

At its core, ANY-LLM offers a user-friendly interface that simplifies the process of setting up and experimenting with different LLMs. One of its standout features is its "private by default" architecture, which ensures that all data and interactions remain on the user's own machine, addressing growing concerns about data privacy in the age of AI.

The Importance of Provider Flexibility

When building applications with LLMs, developers must weigh not just the capabilities of each model, but also crucial factors like cost, latency, and security. The current landscape is fragmented, with options like OpenAI, Google, Mistral, Azure, AWS, and more.

any-llm removes the friction of switching providers or models by allowing you to make simple configuration changes, breaking free from vendor lock-in and making it easy to experiment with new options.

Key Features of any-llm

any-llm is designed for straightforward integration and maximum compatibility. It uses official SDKs from each provider wherever possible, reducing maintenance work and ensuring reliable performance.

Setup is as simple as installing the library, there’s no need for complex proxies or external gateways. This direct approach keeps your data secure and your application lightweight.

- Unified Python API: Interact with multiple LLMs using a single, simple interface.

- SDK-First Integration: Relies on official SDKs for seamless compatibility.

- No Extra Infrastructure: Skip proxy servers and extra gateways just use the library.

- Active Maintenance: any-llm evolves alongside the popular any-agent project, ensuring regular updates and community support.

OpenAI-Compatible Outputs for Easy Integration

OpenAI’s API has become the industry standard, but not every provider matches its interface exactly. any-llm normalizes provider responses into the OpenAI ChatCompletion format, letting you write code as if you’re using OpenAI, while retaining the flexibility to switch to Anthropic, Mistral, and others without rewriting your application logic.

- Consistent Output: All providers return OpenAI-style objects for smooth integration.

- Lightweight Wrapping: Handles provider-specific quirks so you don’t have to.

Supported Providers

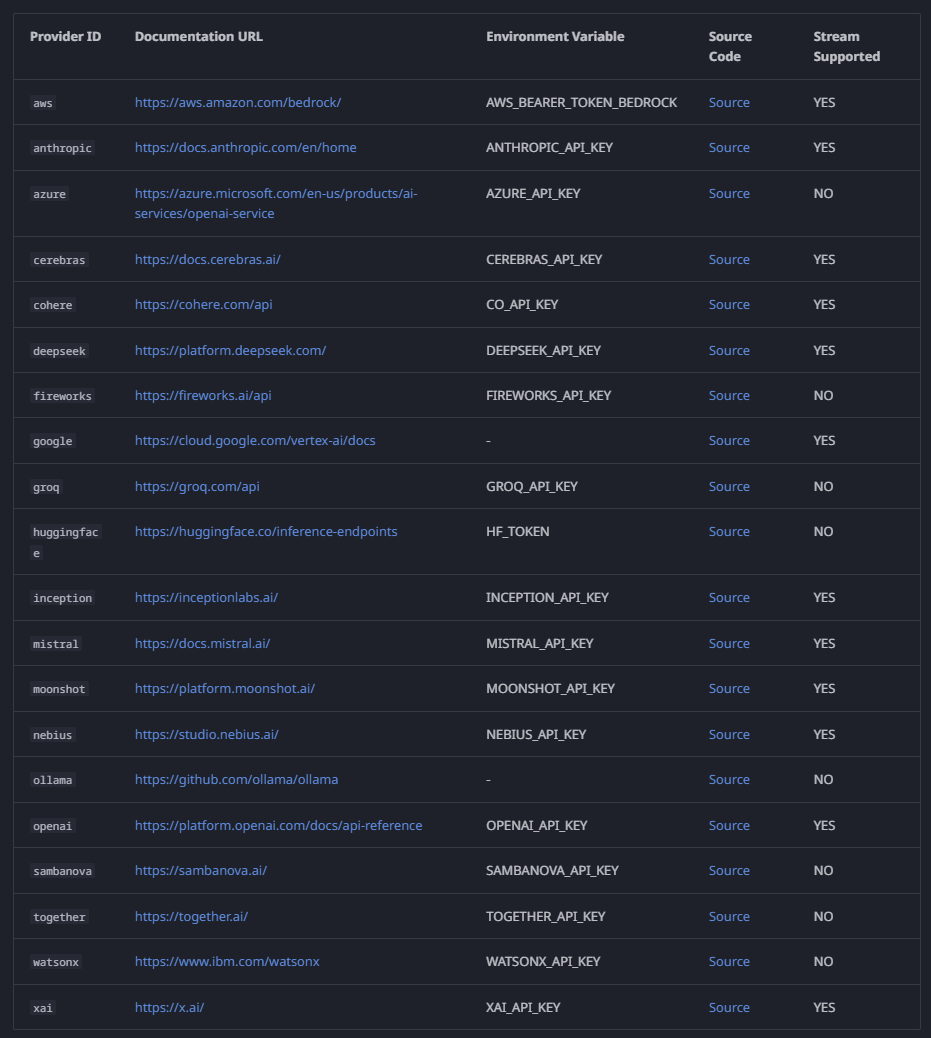

Currently any-llm supports the below providers but be sure to check the official list for the latest updates!

How any-llm Differs from Competing Solutions

Many libraries aim to unify LLM access, but each comes with trade-offs. LiteLLM supports many providers but doesn’t use official SDKs, leading to possible compatibility gaps. AISuite offers modularity but lacks strong typing and active maintenance. Framework-specific tools like Agno can be difficult to use outside their ecosystem. Proxy-based solutions such as OpenRouter and Portkey add infrastructure and dependencies that may not fit every use case.

any-llm stands out by:

- Leveraging official SDKs for robust reliability.

- Eliminating the need for extra infrastructure just install and go.

- Delivering consistent, typed outputs that match industry standards.

- Ensuring ongoing updates and tight integration with active projects.

Getting Started with any-llm

Developers are encouraged to explore any-llm by checking out the GitHub repository and reviewing the official documentation. With any-llm, you gain the flexibility to build LLM-powered apps that are portable and resilient in a fast-changing provider landscape.

Greater Freedom for AI Development

any-llm empowers developers to focus on innovation and application logic, not integration headaches. By standardizing access and outputs across providers, it makes LLM development more agile and future-proof. Try it today and experience a new level of control in your AI projects.

Source: Mozilla.ai Blog

Unlocking Flexibility: any-llm Simplifies Access to Multiple LLM Providers