Artificial intelligence has made remarkable advances in recognizing objects and sorting images, yet it struggles to truly see the world as we do. While AI can efficiently spot patterns and group images, it often misses the deeper relationships and abstract concepts humans naturally grasp. Recent research from Google DeepMind offers promising new strategies to close this gap, making AI vision more intuitive, organized, and robust.

Why AI and Human Perception Differ

Humans process visual information on multiple levels. When we look at an image, we recognize both simple features, like color or shape, and higher-level concepts such as the idea of "animal" or "vehicle." AI vision models also map images, but their groupings frequently diverge from ours. For instance, while we might group a giraffe and a sea turtle as animals, an AI might focus on surface similarities like color or background, missing the deeper connection.

This discrepancy becomes clear in the "odd-one-out" task, where someone picks the image that doesn't fit among a set of three. People and AI often agree on obvious cases, but differ on subtle ones. AI models sometimes overlook hidden conceptual links, revealing a systemic misalignment in visual organization.

Three examples of the "odd one out" task. Three images of subjects in the natural world are shown in three rows. The first row shows an easy task where humans and models align. The second row shows an example where humans and AI models disagree. The third row shows an example where humans tend to agree, but models make a different choice. Image Credit: Google Deepmind

Mapping AI's Vision: The Hidden Structure

To better understand this misalignment, researchers visualized an AI model's internal representations on a two-dimensional map. They found that the AI's categories (animals, furniture, food) were mixed up with little meaningful structure. After applying a new alignment method, these categories became clearly separated, echoing the way humans intuitively organize concepts.

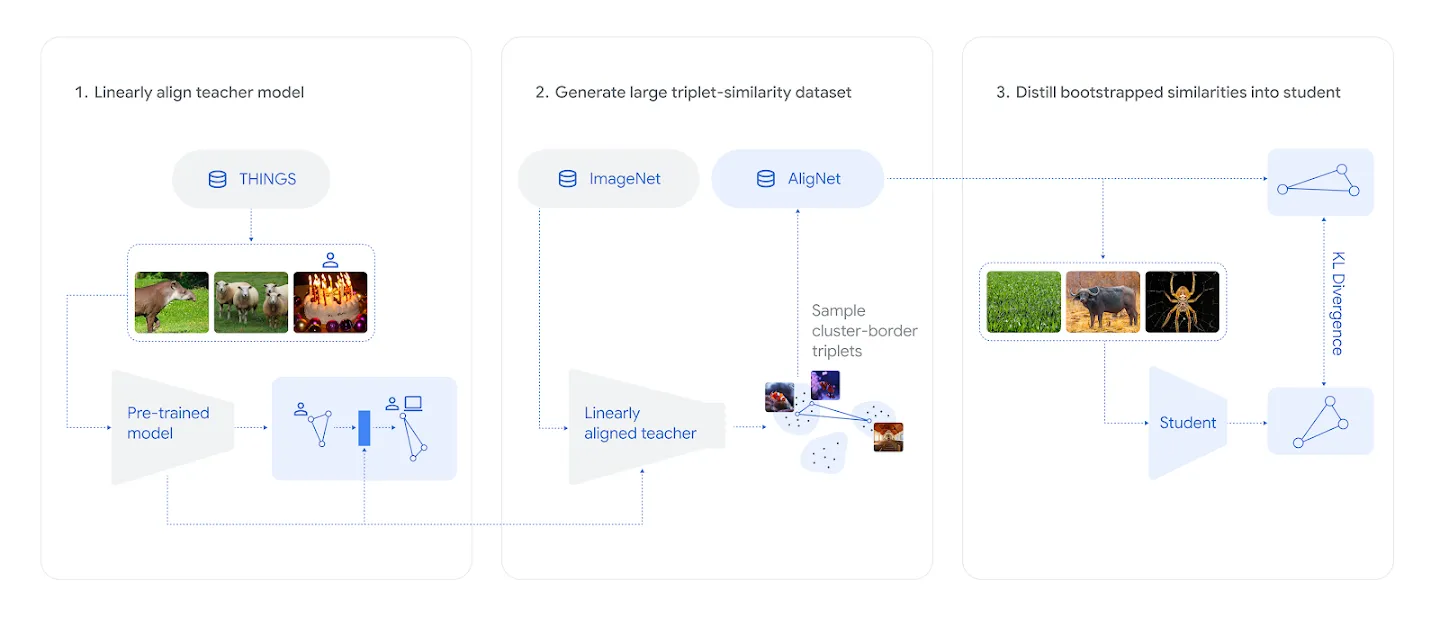

Diagram of the three-step model-alignment method. Credit: Google Deepmind

How DeepMind Brought AI Closer to Human Thinking

A Three-Step Alignment Process

Aligning AI with human perception isn't easy, especially because datasets with human judgments are limited. The DeepMind team introduced a three-step approach to overcome this challenge:

- Adapter Training: A small adapter was trained on the THINGS dataset using a strong, pretrained vision model. This enabled the adapter to learn human-like groupings without erasing existing knowledge.

- Teacher Model Generation: The adapted model then acted as a "teacher," generating millions of human-like odd-one-out decisions across a wider range of images. This process created the new AligNet dataset.

- Student Model Fine-Tuning: Finally, other models ("students") were fine-tuned on the AligNet dataset, learning to reorganize their own internal maps to better match human conceptual hierarchies.

This approach allowed AI to learn nuanced, hierarchical relationships—like grouping animals by species, or separating food from vehicles—mirroring the way we naturally structure knowledge.

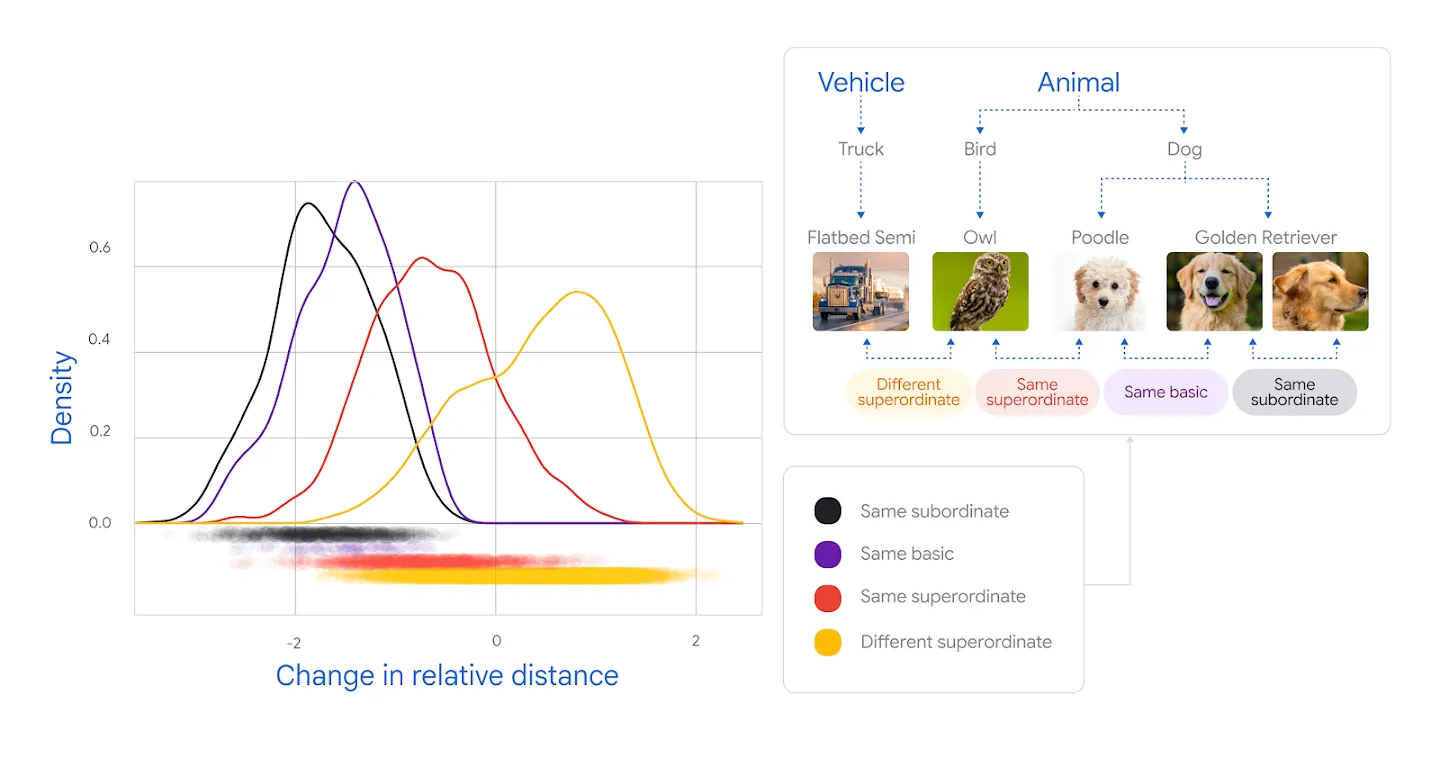

A line graph shows the change in relative distances between human and AI representations. The representations of very similar categories tend to get closer together, while representations of less similar pairs of objects tend to move further apart. Image Credit: Google Deepmind

Evaluating Success: Closer to Human-Like Understanding

To test their results, researchers used a variety of cognitive science tasks, including a new dataset called Levels, which challenges models to make similarity judgments. The aligned models agreed with human decisions far more often and even exhibited "uncertainty" when answers were ambiguous, taking longer to decide just like humans do.

Impressively, these human-aligned models also excelled in traditional AI benchmarks. They showed improved few-shot learning, recognizing new categories from minimal examples, and remained reliable even when faced with unfamiliar images. This demonstrates not just better alignment, but also stronger generalization and robustness.

Looking Ahead: The Promise of Human-Aligned AI

Aligning AI perception with human conceptual structures is a leap toward AI that is more intuitive, trustworthy, and useful in real-world scenarios. While achieving complete alignment remains a challenge, this research illustrates that the divide between machine and human perception can be bridged. As AI continues to evolve, these advances bring us closer to systems that can truly understand and interact with our world.

Learn more

Teaching AI to See the World Through Human Eyes: Bridging the Perception Gap