Adapting AI models for business is often a trade-off between generic tools and high-cost, complex customization. Amazon Bedrock is revolutionizing this landscape by introducing reinforcement fine-tuning, making powerful, feedback-driven model optimization accessible and practical for more developers and organizations.

The Power of Reinforcement Fine-Tuning

Traditional fine-tuning requires large labeled datasets and advanced machine learning expertise, making it expensive and slow. In contrast, reinforcement fine-tuning uses feedback and reward signals, allowing models to learn through iterative improvement without exhaustive labeling. This method leads to smarter, more tailored models and delivers notable accuracy gains, on average, a 66% improvement over base models.

How Amazon Bedrock Streamlines the Process

Bedrock automates and simplifies the reinforcement fine-tuning workflow, lowering entry barriers for developers. The process includes:

- Data Integration: Seamlessly use API logs or upload datasets, with built-in validation and automatic format conversion, even supporting OpenAI Chat Completions format.

- Reward Functions: Define success using custom Python code (executed with AWS Lambda) for objective tasks, or leverage foundation models as judges for more subjective cases.

- Parameter Flexibility: Tweak training hyperparameters like learning rate, batch size, and epochs as needed.

- Security: Data and models stay private within AWS's secure ecosystem, with Virtual Private Cloud (VPC) and AWS KMS encryption support to meet compliance needs.

Two Approaches to Reinforcement Fine-Tuning

Amazon Bedrock supports both:

- Reinforcement Learning with Verifiable Rewards (RLVR): Ideal for tasks with clear, objective outcomes such as code generation or mathematical reasoning, using rule-based graders.

- Reinforcement Learning from AI Feedback (RLAIF): Employs AI judges for subjective evaluation, perfect for instruction following or content moderation.

Step-by-Step: Building and Deploying Fine-Tuned Models

Getting started is straightforward:

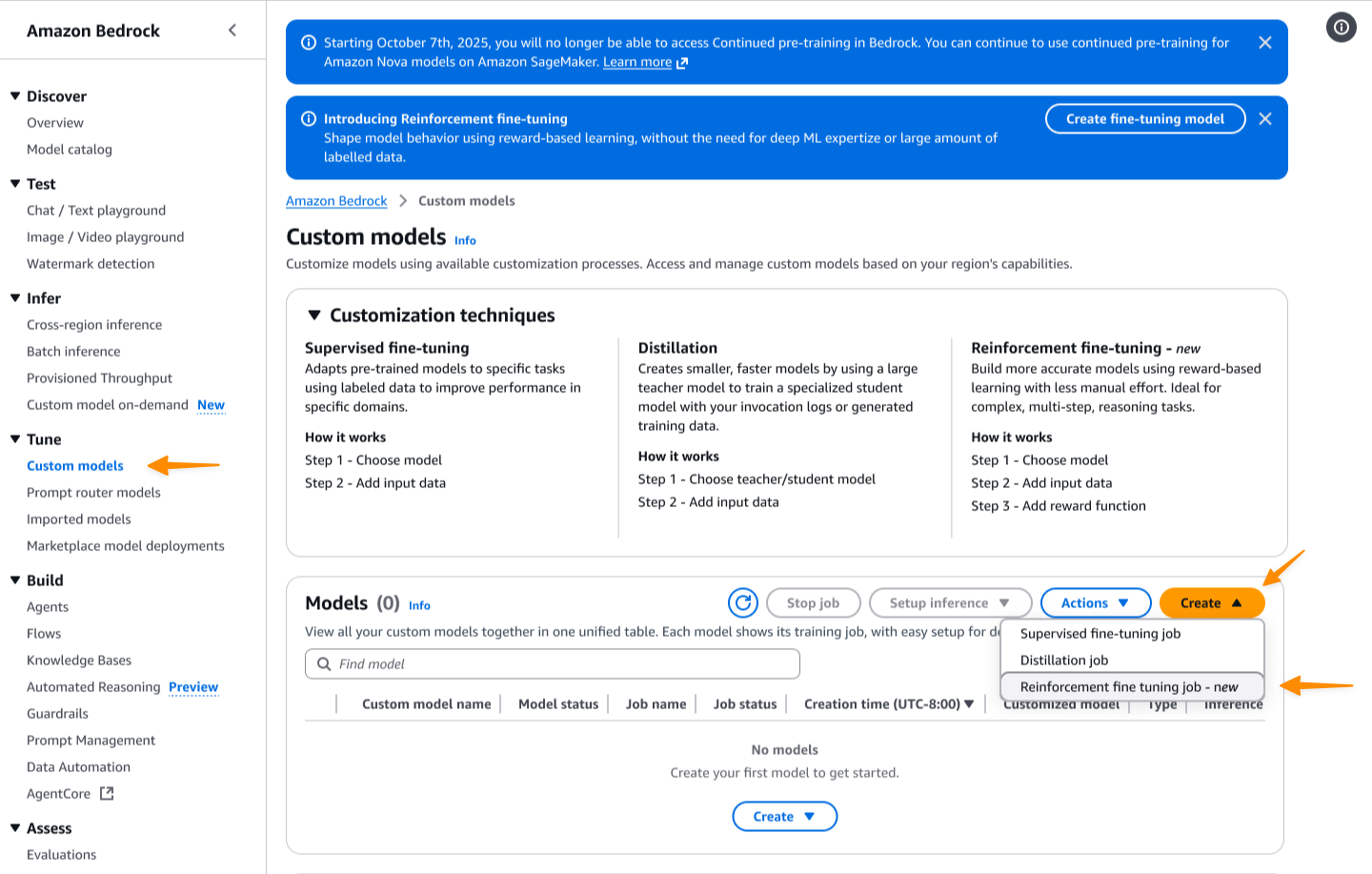

- Open the Bedrock console and navigate to "Custom models," then select "Reinforcement fine-tuning job."

- Choose a base model (beginning with Amazon Nova 2 Lite, with more options coming soon).

- Provide your training data via logs, file uploads, or Amazon S3 datasets.

- Set up your reward function,either custom Lambda code or a model-judge, depending on your scenario.

- Optionally adjust training parameters and specify security settings like VPC and KMS encryption.

- Launch the job and monitor real-time metrics: track reward scores, loss curves, and accuracy gains to ensure progress.

- Once training finishes, deploy your model with a single click and test its performance in the Bedrock playground before production integration.

Additional Features and Resources

Bedrock provides seven ready-to-use reward function templates for common use cases, accelerating setup. Security is a priority, with private data handling and encryption options throughout. Pricing is transparent on the Bedrock pricing page, and extensive documentation and interactive demos help new users get up to speed quickly.

Takeaway: Empowering Smarter AI for All

Reinforcement fine-tuning in Amazon Bedrock democratizes advanced AI customization, removing barriers of cost and complexity. By automating the workflow and offering flexible, secure tools, Bedrock enables developers to easily iterate, deploy, and optimize AI for their specific needs. Ready to elevate your AI? Dive into Bedrock's demos and documentation to begin your journey.

Let's Put Your Fine-Tuned Models to Work

Thanks for reading! Reinforcement fine-tuning opens exciting possibilities for tailoring AI to your specific needs, but the magic really happens when those models become part of a larger intelligent system. With more than 20 years of experience building data-driven solutions for organizations ranging from startups to companies like Samsung and Google, I help businesses move beyond isolated AI experiments to fully integrated automation that transforms operations.

Are you ready to put your fine-tuned models to work? Whether you need help designing evaluation pipelines, building the applications that consume AI outputs, or automating entire workflows around your customized models, I can help bridge the gap between AWS capabilities and your business goals. My software development and automation services are designed to help you get real ROI from your AI investments. I'd love to schedule a free consultation and explore what's possible together.

Source: AWS News Blog

Reinforcement Fine-Tuning: Amazon Bedrock's Breakthrough for Smarter AI Models