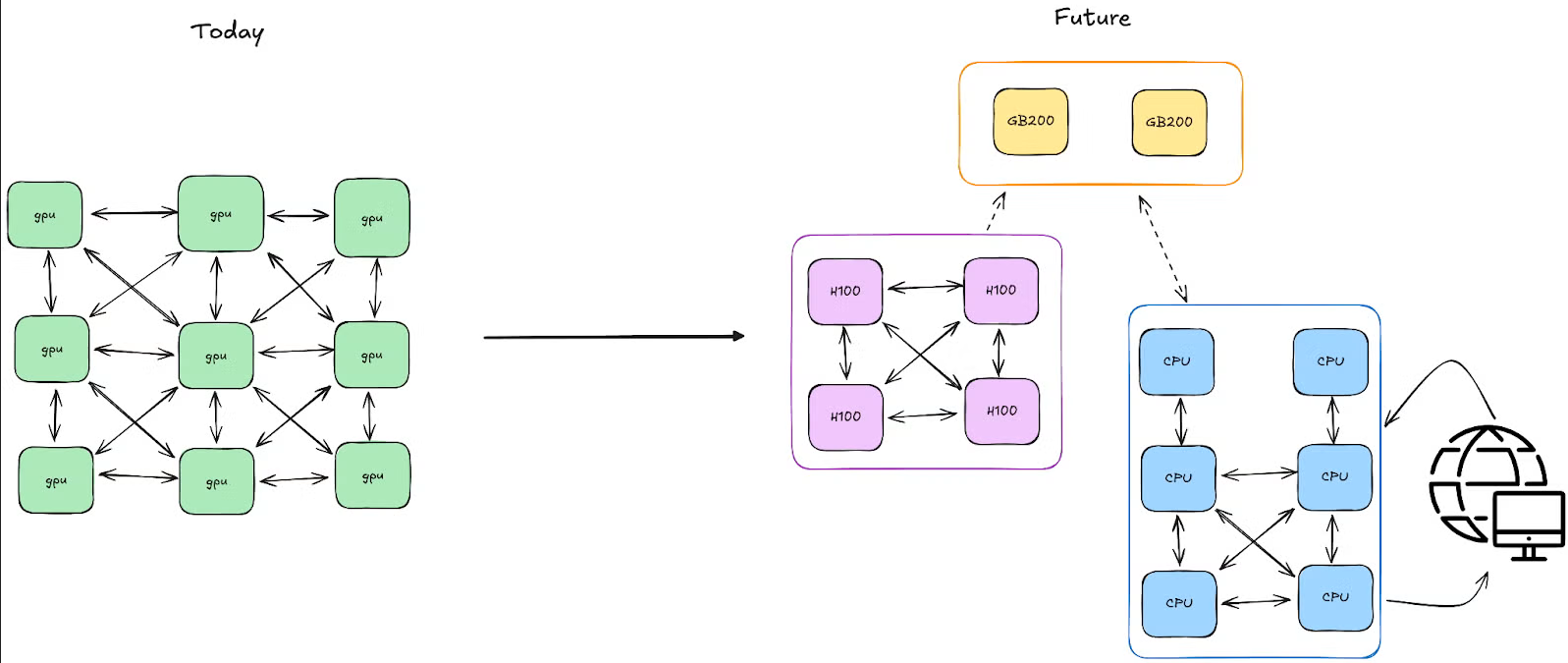

We now live in a world where ML workflows such as pre-training, post-training, and everything in between are heterogeneous, must contend with hardware failures, and are increasingly asynchronous and highly dynamic.

Traditionally, PyTorch has relied on an HPC-style multi-controller model, where multiple copies of the same script are launched across different machines, each running its own instance of the application (often referred to as SPMD, or Single Program Multiple Data).

While this approach has served the community well, ML workflows are becoming more complex: pre-training might combine advanced parallelism with asynchrony and partial failure, while RL models used in post-training require a high degree of dynamism with complex feedback loops.

The logic of these workflows may be relatively straightforward, but they are notoriously difficult to implement well in a multi-controller system, where each node must decide how to act based on only a local view of the workflow's state.

PyTorch's Answer to Distributed Computing Complexity

The PyTorch team at Meta believes that the long-term sustainable way to address this is through a single-controller programming model, in which a single script orchestrates all distributed resources, making them feel almost local. This architectural shift simplifies distributed programming where your code looks and feels like a single-machine Python program, but can scale across thousands of GPUs.

Image Credit: Meta

Key Takeaways

- Single-controller simplicity: Monarch replaces complex multi-controller coordination with a single Python script that orchestrates thousands of GPUs

- Modern ML demands: Pre-training and RL workflows now require handling asynchrony, partial failures, and dynamic feedback loops at scale

- Local-to-distributed: Write code that looks like single-machine Python but scales seamlessly across entire GPU clusters

- Four core principles: Program clusters like arrays with meshes, progressive fault handling with try-except blocks, separate control/data planes with RDMA, and distributed tensors that feel local

- Rust-powered performance: Python frontend for ease of use, Rust backend (hyperactor/hyperactor_mesh) for production-grade performance and scalability

- Proven at scale: Successfully orchestrated RL training on 2,048 GPUs, achieved 60% faster recovery than full job restarts, and scaled from 16 to 2,048 GPUs progressively

- Production-ready integrations: Works with VERL, TorchFT, TorchTitan, Lightning AI, and includes TorchForge for native RL workflows

- Interactive debugging: Drive entire GPU clusters from Jupyter notebooks with persistent compute, workspace sync, and distributed debugging capabilities

- Real-world resilience: Handles one failure every 3 hours in 16K GPU jobs with 90-second process recovery and 2.5-minute machine recovery times

The Framework That Makes Clusters Feel Like Single Machines

Monarch is a distributed programming framework that brings the simplicity of single-machine PyTorch to entire clusters. It lets you program distributed systems the way you'd program a single machine, hiding the complexity of distributed computing through four key principles:

- Program clusters like arrays. Monarch organizes hosts, processes, and actors into scalable meshes that you can manipulate directly. You can operate on entire meshes (or slices of them) with simple APIs, Monarch handles the distribution and vectorization automatically, so you can think in terms of what you want to compute and not where the code runs.

- Progressive fault handling. With Monarch, you write your code as if nothing fails. When something does fail, Monarch fails fast by default stopping the whole program, just like an uncaught exception in a simple local script. Later, you can progressively add fine-grained fault handling exactly where you need it, catching and recovering from failures just like you'd catch exceptions.

- Separate control from data. Monarch splits the control plane (messaging) from the data plane (RDMA transfers), enabling direct GPU-to-GPU memory transfers across your cluster. Monarch lets you send commands through one path while moving data through another, optimized for what each does best.

- Distributed tensors that feel local. Monarch integrates seamlessly with PyTorch to provide tensors that are sharded across clusters of GPUs. Monarch tensor operations look local but are executed across distributed large clusters, with Monarch handling the complexity of coordinating across thousands of GPUs.

Programming with Meshes and Actors

At the heart of Monarch's programming model are two key APIs: process meshes and actor meshes. A process mesh is an array of processes spread across many hosts; an actor mesh is an array of actors, each running inside a separate process. Like array programming in NumPy or PyTorch, meshes make it simple to dispatch operations efficiently across large systems.

At launch, Monarch supports process meshes over GPU clusters, typically one process per GPU, onto which you can spawn actors into actor meshes. For local development, the same meshes can also run on a local development server, providing a consistent development experience from laptop to supercomputer.

A simple example demonstrates the elegance of this approach. You define an actor class with a method, spawn instances of that actor on eight GPUs on the local host, invoke methods across all actors simultaneously, and collect results. The controller invokes operations across the entire mesh and waits for responses. No explicit coordination code is required.

Monarch expresses broadcasted communication by organizing actors into multidimensional meshes with named dimensions. For instance, a cluster might have dimensions like hosts: 32 and gpus: 8, creating a clear logical structure for your distributed resources.

You can slice meshes to target specific subsets, similar to NumPy array slicing. For example, you might have half of your actors execute one operation while the other half execute a different operation, simply by slicing the mesh along the GPU dimension. This vectorized approach to distributed computing eliminates the need for explicit loops and conditionals that check which node is executing the code.

Users can express distributed programs that handle errors through pythonic try-except blocks. Complex fault detection and fault recovery schemes can be built on top of these primitives. A fault recovery example showcases handling a simple runtime exception in a remote actor, where one slice of actors successfully returns results while another slice encounters an error that's cleanly caught and handled just like local Python code.

Advanced Capabilities

Monarch's tensor engine brings distributed tensors to process meshes. It lets you write PyTorch programs as if the entire cluster of GPUs were attached to the machine running the script. Operations on these distributed tensors are automatically coordinated across the cluster, with Monarch handling sharding, communication, and synchronization.

For bulk data movement, Monarch provides an RDMA buffer API, enabling direct, high-throughput transfers between processes on supported network interface cards. This separation of control and data planes ensures that large tensor transfers don't block the control messages that orchestrate your workflow.

The Rust-Powered Backend

Monarch is split into a Python-based frontend and a backend implemented in Rust. Python is the lingua franca of machine learning, and the Python frontend APIs allow users to seamlessly integrate with existing code and libraries (like PyTorch) and to use Monarch with interactive computing tools like Jupyter notebooks.

The Rust-based backend provides the performance, scale, and robustness required for production deployments, the team leverages Rust's fearless concurrency throughout Monarch's implementation.

At the bottom of the stack is hyperactor, a Rust-based actor framework focused on performant message passing and robust supervision. Built on top of hyperactor is hyperactor_mesh, which combines its various components into an efficient "vectorized" actor implementation oriented toward providing actor operations cheaply over large meshes. Monarch's core Python APIs are fairly thin wrappers around hyperactor_mesh, ensuring minimal overhead.

Scalable Messaging Architecture

Everything in Monarch relies on scalable messaging: the core APIs for casting messages to large meshes of actors. Hyperactor achieves this through two key mechanisms:

- Multicast trees. To support multicasting, Hyperactor sets up multicast trees to distribute messages. When a message is cast, it is first sent to some initial nodes, which then forward copies of the message to a set of children, and so on, until the message has been fully distributed throughout the mesh. This avoids single-host bottlenecks by effectively using the whole mesh as a distributed cluster for message forwarding.

- Multipart messaging. The control plane is never in the critical path of data delivery. Monarch uses multipart messaging to avoid copying, to enable sharing data across high-fanout sends (such as those in multicast trees), and to materialize into efficient, vectorized writes managed by the operating system.

Real-World Applications: Three Case Studies

Case Study 1: Reinforcement Learning at Scale

Reinforcement learning has been critical to the current generation of frontier models. RL enables models to do deep research, perform tasks in an environment, and solve challenging problems in domains like mathematics and code generation. The complexity of RL workflows makes them an ideal test case for Monarch's capabilities.

In a typical RL training loop for reasoning models, generator processes produce prompts from the reasoning model specializing in a specific domain (e.g., programming code generation). The generator uses these prompts to derive a set of solutions or trajectories (executable code in this example), often interacting with the world through tools (like a compiler) and environments.

Reward pipelines evaluate these solutions and produce scores. These scores and rewards are used to train the model, whose updated weights are then transferred back to the systems that generated the prompt responses. This constitutes a single training loop, a real-time pipeline of heterogeneous computations that must be orchestrated and scaled individually.

When implementing this in Monarch, each component (generator, trainer, inference engine, reward pipeline) is represented by a mesh. The training script uses these meshes to orchestrate the overall flow: telling the generator mesh to start working from a new batch of prompts, passing the data to the training mesh when complete, and updating the inference mesh when a new model snapshot is ready. Because Monarch supports remote memory transfers (RDMA) natively, the actual data is transferred directly between members of meshes, just like copying a tensor from one GPU to another.

The team integrated Monarch with VERL (Volcano Engine Reinforcement Learning), a widely used RL framework. They post-trained the Qwen-2.5-7B math model using GRPO on a curated math dataset and evaluated it on the AIME 2024 benchmark. Training ran for 500+ steps on H200 GPUs using Megatron-LM, scaling progressively from 16 to 64 to 1,024 to 2,048 GPUs. The runs were stable and achieved numerical parity with existing options, demonstrating that Monarch can orchestrate existing RL frameworks effectively.

TorchForge represents a different approach: a PyTorch-native RL framework designed from the ground up with Monarch primitives. TorchForge's goal is to let researchers express RL algorithms as naturally as pseudocode, while Monarch handles the distributed complexity underneath. The result is code with no explicit distributed coordination, no retry logic just RL written in Python using async/await patterns.

This clean API is possible because TorchForge builds two key abstractions on Monarch's primitives. Services wrap Monarch ActorMeshes with RL-specific patterns, leveraging Monarch's fault tolerance, resource allocation, and mailbox system while adding patterns like load-balanced routing (.route()), parallel broadcasts (.fanout()), and sticky sessions for stateful operations. TorchStore is a distributed key-value store for PyTorch tensors that handles weight synchronization between training and inference. Built on Monarch's RDMA primitives and single-controller design, it provides simple DTensor APIs while efficiently resharding weights on the fly, critical for off-policy RL where training and inference use different parallelism layouts.

Case Study 2: Fault Tolerance in Large-Scale Pre-Training

Hardware and software failures are common and frequent at scale. In the Llama 3 training runs (Dubey et al., 2024), the team experienced 419 interruptions across a 54-day training window for a 16,000-GPU training job. This averages to about one failure every 3 hours. Projecting this to tens of thousands of GPUs represents a failure once every hour or more frequently. Restarting the entire job for each failure significantly reduces effective training time.

A solution is to make the numerics of the model more tolerant of asynchrony, allowing various groups to run more independently. TorchFT, released from PyTorch, provides a way to withstand GPU failures and allow training to continue.

One strategy uses Hybrid Sharded Data Parallelism that combines fault-tolerant DDP with FSDP v2 and pipeline parallelism. On failure, the system uses torchcomms to gracefully handle errors and continue training on the next batch without downtime. This isolates failures to a single "replica group," allowing training to continue with a subset of the original job.

Monarch integrates with TorchFT, centralizing the control plane into a single-controller model. Monarch uses its fault detection primitives to detect failures and, upon detection, can spin up new logical replica groups (Monarch Meshes) to join training once initialized.

TorchFT's Lighthouse server acts as a Monarch actor. Monarch provides configurable recovery strategies based on failure type. On faults, the controller first attempts fast, process-level restarts within the existing allocation and only escalates to job reallocation when necessary, while TorchFT keeps healthy replicas stepping so progress continues during recovery.

The team ran this code on a 30-node (240 H100s) CoreWeave cluster, using the SLURM scheduler to train Qwen3-32B using TorchTitan and TorchFT. They injected 100 failures every 3 minutes across multiple failure modes: segfaults, process kills, NCCL abort, host eviction, and GIL deadlock. Monarch's configurable recovery strategies proved 60% faster by avoiding unnecessary job rescheduling (relative to full SLURM job restarts). The system achieved 90-second average recovery for process failures and 2.5-minute average recovery for machine failures.

Case Study 3: Interactive Debugging with Large GPU Clusters

The actor framework is not just limited to large-scale orchestration of complex jobs. It enables seamless debugging of complex, multi-GPU computations interactively. This capability represents a fundamental shift from traditional batch-oriented debugging to real-time, exploratory problem-solving that matches the scale and complexity of contemporary AI systems.

Traditional debugging workflows break down when confronted with the realities of modern ML systems. A model that trains perfectly on a single GPU may exhibit subtle race conditions, deadlocks, memory fragmentation, or communication bottlenecks when scaled across dozens of accelerators. Monarch provides an interactive developer experience. With a local Jupyter notebook, a user can drive a cluster as a Monarch mesh.

Key features include: persistent distributed compute that allows very fast iteration without submitting new jobs; a workspace sync API that quickly syncs local conda environment code to mesh nodes; and a mesh-native distributed debugger that lets you inspect and debug actors across the cluster.

Lightning AI Integration

Monarch integrates with Lightning AI notebooks to provide a powerful development environment. Users can launch a 256-GPU training job from a single Studio notebook, powered by TorchTitan. This demonstrates seamless scaling, persistent resources, and interactive debugging all in one notebook. In this example, the traditional SPMD TorchTitan workload is encapsulated as an Actor within Monarch, allowing users to pre-train large language models (such as Llama 3 and Llama 4) interactively.

Monarch enables you to reserve and maintain compute resources directly from your local Studio Notebook. Even if your notebook session is interrupted or disconnects, your cluster allocation remains active through Multi-Machine Training (MMT). This persistence allows you to iterate, experiment, and resume work seamlessly, making the notebook a reliable control center for distributed training tasks.

Using Monarch's Actor model, you can define and launch the Titan Trainer as an Actor on a process mesh, scaling your training jobs to hundreds of GPUs from within the Studio notebook. Monarch handles the orchestration, code and file sharing, and log collection, so you can reconfigure and relaunch jobs quickly. Logs and metrics are available directly in the notebook, as well as through external tools like Litlogger and WandB.

Monarch brings interactive debugging to distributed training. You can set Python breakpoints in your Actor code, inspect running processes, and attach to specific actors for real-time troubleshooting usign the notebook interface. After training, you can modify configurations or define new actors and relaunch jobs on the same resources without waiting for new allocations.

Getting Started Today

Monarch is available now on GitHub ready for you to explore, build with, and contribute to. The Monarch repository provides comprehensive documentation for deeper technical details, and interactive Jupyter notebooks demonstrate Monarch in action. For an end-to-end example of launching large-scale training directly from your notebook, check out the Lightning.ai integration. Whether you're orchestrating massive training runs, experimenting with reinforcement learning, or interactively debugging distributed systems, Monarch gives you the tools to do it all—simply and at scale.

This general-purpose API and its native integration with PyTorch will unlock the next generation of AI applications at scale and the more complex orchestration requirements that they present. By abstracting away the complexity of distributed systems while preserving the full power of multi-GPU computing, Monarch represents a significant step forward in making large-scale machine learning more accessible and manageable.

Read the original announcement on the PyTorch blog for additional details and code examples.

PyTorch Monarch: Single-Controller Distributed Programming for ML at Scale