AI agents can impress with accurate answers, but does accuracy guarantee reliable reasoning? In platforms like Microsoft Power Platform and Copilot Studio, it’s not enough for agents to simply get things right, they need to demonstrate sound reasoning behind their actions. That’s where plan validation enters, providing a bridge between surface-level correctness and genuine process integrity.

Why Justified Answers Matter

Most AI evaluations focus on whether a response is correct, a concept known as true belief in philosophy. However, the real benchmark is justified true belief: not just a correct outcome, but an explanation of how that outcome was reached.

In AI, this is measured through tool correctness; did the agent use the right tools and logical steps to arrive at its answer? Prioritizing tool correctness ensures AI agents are not just guessing well, but are reasoning as intended.

Inside Copilot Studio Kit’s Plan Validation

The Copilot Studio Kit, an open-source toolkit, empowers developers to rigorously evaluate AI agents. Initially, it provided semantic testing - assessing answer quality - but some tasks, like updating databases or triggering backend operations, don’t leave much to judge in the agent’s reply.

Image Credit: Microsoft Research

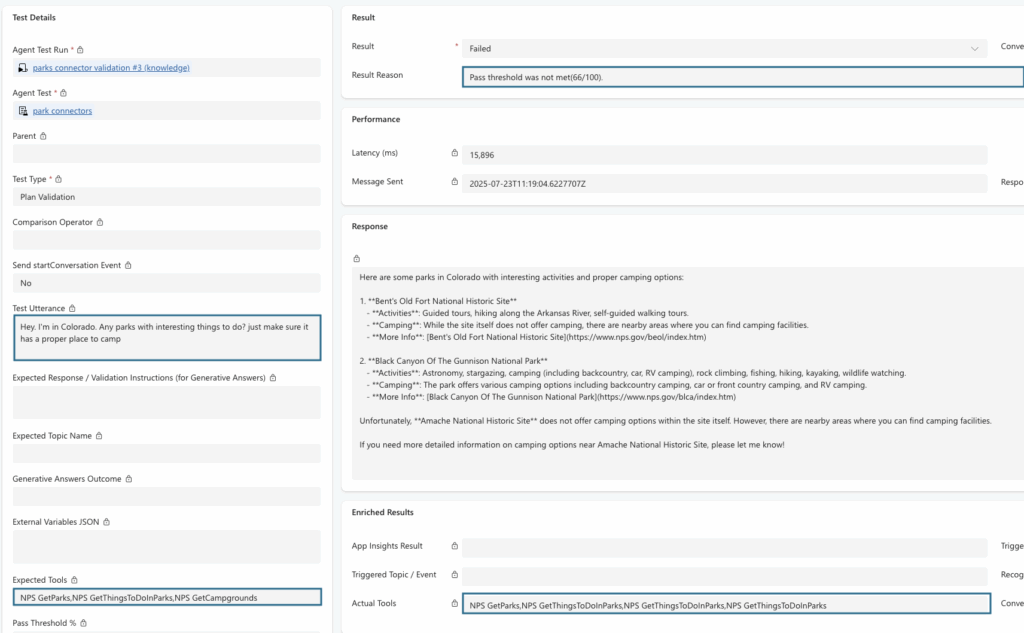

Plan Validation addresses this by checking if an agent used the expected tools in its reasoning process. Developers define trigger phrases, specify required tools, and set acceptable deviation thresholds. The validation process is deterministic since it evaluates whether the agent’s actions align with the plan without relying on subjective AI scoring.

Real-World Example: The Difference Is in the Details

Imagine a user asks for Colorado parks with camping options. Two test runs produce identical-sounding answers, but a deeper look reveals critical differences:

- First scenario: The agent uses all specified tools (

GetParks,GetCampgrounds, andGetThingsToDoInParks) to source live data. - Second scenario: The agent skips

GetCampgroundsand relies on generic training data to fill in camping information.

Both answers appear correct, but only the first is truly dependable. Generic data can introduce errors, such as referencing outdated or nonexistent campgrounds. Plan Validation uncovers these discrepancies, ensuring agents consistently follow the intended process and use the correct resources.

The Impact of Plan Validation

As AI agents increasingly handle complex workflows and automate critical processes, maintaining process integrity is essential. Plan Validation gives developers the tools to confirm not just what AI agents say, but how they operate. This method moves beyond content checking to emphasize reliable, transparent workflows—critical for building safer, more dependable AI systems.

Microsoft’s roadmap includes extending Plan Validation to autonomous agents and broader workflow scenarios, setting the stage for even greater transparency and trust in Power Platform’s AI-powered solutions.

Key Takeaway

Plan Validation in Copilot Studio Kit equips developers to ensure AI agents follow the right procedures, not just deliver the right answers. By emphasizing tool correctness, teams can catch hidden mistakes and build more trustworthy solutions on Microsoft Power Platform.

Plan Validation: Ensuring AI Agents in Power Platform Truly Reason Well