Coding with an AI assistant that responds instantly, never compromises your code’s privacy, and runs entirely on your own device is now possible thanks to local copilots powered by NVIDIA RTX AI PCs and workstations, ushering in a new era for both experienced and aspiring developers.

AI Coding Assistants: Shaping Modern Development

Coding assistants are transforming the programming landscape. These tools offer features like real-time code development, suggestions, instant explanations, debugging support and more.

Professional developers use them to build faster, streamline repetitive tasks and explore creative solutions, while students and hobbyists benefit from on-demand code logic explanations and best practices integrated into their workflow.

Popular cloud-based coding assistants often come with drawbacks such as subscription costs, privacy concerns, and latency due to remote processing. In contrast, local AI assistants provide immediate responses and complete code privacy, but require robust hardware to deliver smooth performance.

NVIDIA RTX GPUs: The Engine Behind Local AI

NVIDIA’s GeForce RTX GPUs bring the necessary acceleration for local AI coding assistants to operate efficiently. Leveraging Tensor Cores, these GPUs enable large language models (LLMs) to run in real time right on your PC or workstation. This advancement makes instant, private, and cost-effective coding assistance a reality for anyone.

Top Tools for Local AI Coding

A range of accessible tools now allow developers to use AI coding assistants locally on RTX-powered machines. Leading solutions include:

- continue.dev: An open-source VS Code extension connecting to local LLMs via Ollama and LM Studio, offering chat, autocomplete, and debugging inside your editor.

- Tabby: A secure and transparent assistant supporting multiple IDEs, providing code completion, Q&A, and inline chat which are all optimized for RTX GPUs.

- OpenInterpreter: An innovative interface that unites LLMs with command-line access and agentic task execution, ideal for automation and DevOps tasks.

- LM Studio: A graphical interface to run and test local LLMs interactively before integrating them into your coding workflow.

- Ollama: A high-performance inference engine supporting models like Code Llama and StarCoder2, seamlessly integrating with tools like Continue.dev.

These solutions utilize frameworks such as Ollama and llama.cpp, and are now especially optimized for GeForce RTX and NVIDIA RTX PRO GPUs, ensuring peak performance for all local AI workloads.

Real-World Performance and Learning Benefits

With a GeForce RTX-powered system, developers can use tools like Continue.dev combined with advanced models such as Gemma 12B for real-time explanations, algorithm exploration, and debugging all without sending code to external servers. The AI serves as a virtual mentor, delivering plain-language guidance, context-aware insights, and personalized suggestions, all within a private environment.

Running on a GeForce RTX-powered PC, Continue.dev paired with the Gemma 12B Code LLM helps explain existing code, explore search algorithms and debug issues — all entirely on device. Acting like a virtual teaching assistant, the assistant provides plain-language guidance, context-aware explanations, inline comments and suggested code improvements tailored to the user’s project.

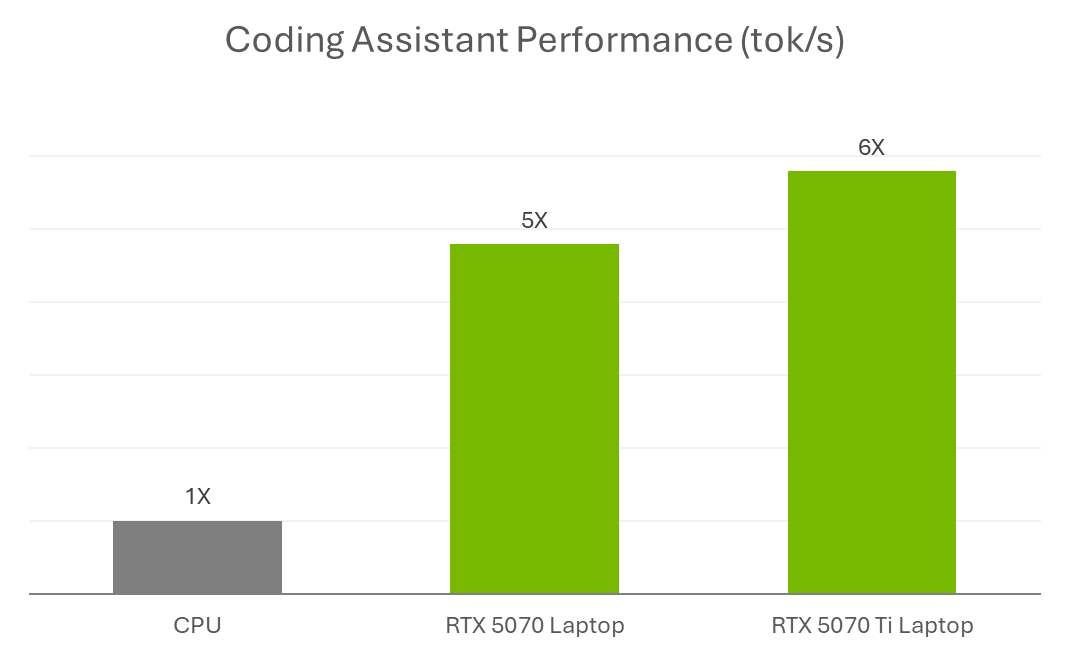

Benchmark results highlight the speed advantage: running the Meta Llama 3.1-8B model on RTX laptops delivers up to 5-6 times faster throughput compared to CPUs. This enables fluid workflows even with complex projects, allowing the assistant to keep pace with your development process.

Image: NVIDIA

Getting Started and Engaging with the Community

NVIDIA invites both newcomers and seasoned developers to explore the potential of local AI on RTX-powered devices. The latest GeForce RTX 50 Series laptops feature advanced AI acceleration, supporting leading creative and educational applications making them ideal for students and professionals alike.

To inspire innovation, NVIDIA is hosting the “Plug and Play: Project G-Assist Plug-In Hackathon.” This event encourages participants to develop plug-ins for Project G-Assist, an experimental AI assistant, with opportunities to win prizes and connect with a growing community of AI enthusiasts on NVIDIA’s Discord.

Takeaway: Empowering Developers with AI-Enhanced Coding

By harnessing the power of RTX GPUs, local AI coding assistants are democratizing advanced development tools and delivering real-time, private, and cost-free support for all. Whether you’re developing production software, learning new concepts, or building your first app, RTX AI PCs enable you to code, experiment, and create at the speed of thought. Now is the perfect time to experience the future of AI-powered productivity.

NVIDIA RTX AI PCs Are Revolutionizing Local Coding Assistants