Mistral AI has introduced Mistral 3, a revolutionary family of open-source, multimodal, and multilingual models. This release is designed to empower developers and enterprises, setting a new benchmark for accessible and transparent artificial intelligence. By open-sourcing all models under the Apache 2.0 license, Mistral AI invites widespread adoption and collaboration across industries.

Mistral Large 3: Flagship Performance and Flexibility

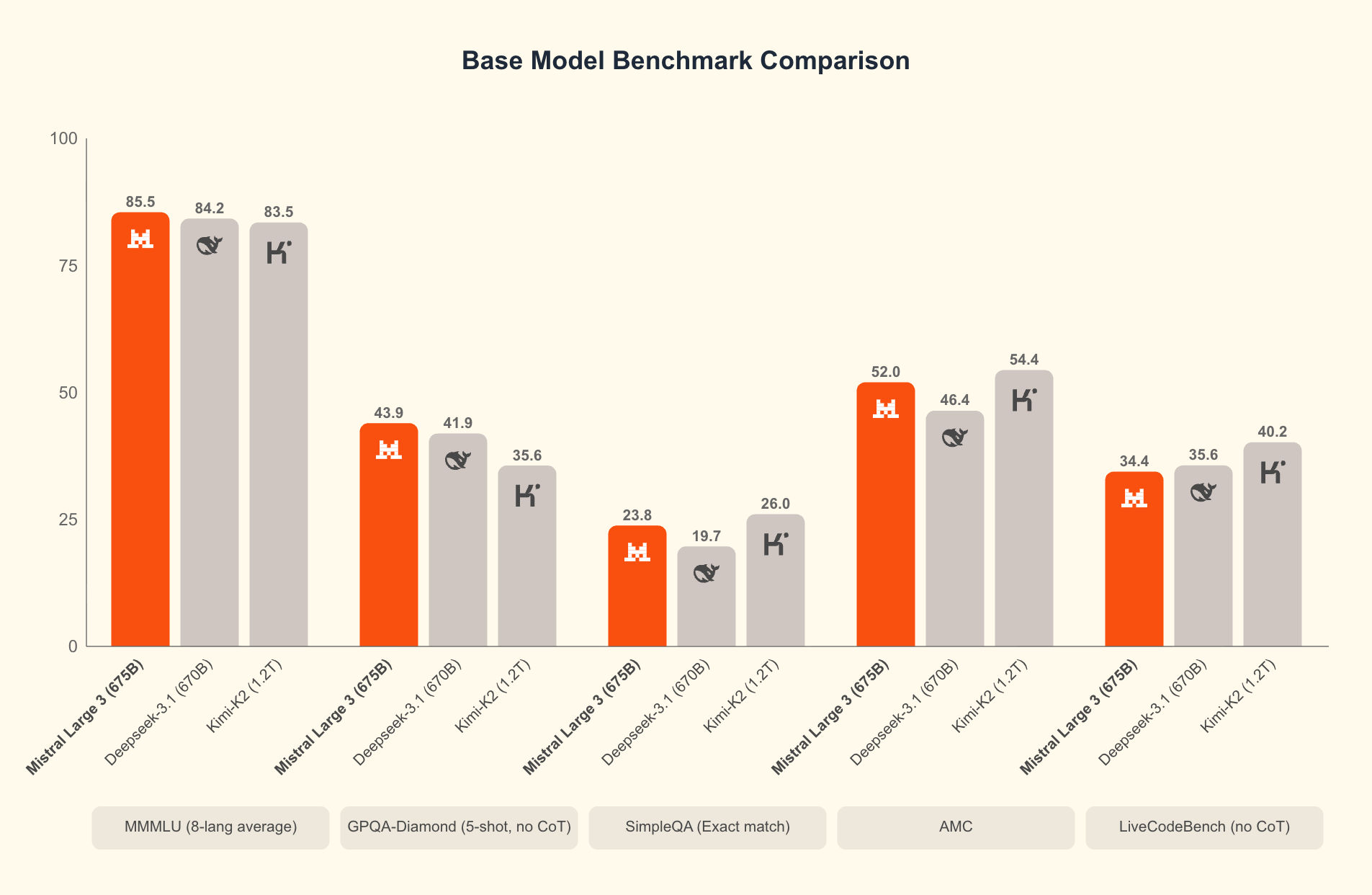

Mistral Large 3 is the highlight of this release, featuring a sparse mixture-of-experts (MoE) architecture with 41B active and 675B total parameters. Trained on 3,000 NVIDIA H200 GPUs, it is Mistral's most advanced open-weight model to date. The model excels in general prompting, multilingual understanding, and image processing. On key industry leaderboards, it ranks among the top open-source models, making it a compelling choice for innovative AI projects.

Enterprises and developers can choose between base and instruction-tuned versions, with a reasoning-optimized variant coming soon. This flexibility enables a wide range of applications, from creative generation to complex analysis.

Image and Results by Mistral.

Key Advantages of Mistral 3

- Frontier performance with open access: Achieve best-in-class results while maintaining transparency and control.

- Multimodal and multilingual capabilities: Handle text, images, and complex logic in over 40 languages.

- Scalable architecture: Options range from 3B to 675B parameters, suitable for all deployment environments.

- Versatile and agentic: Ideal for code, creative tasks, document analysis, and integration with external tools.

Optimized Collaboration and Hardware Integration

To maximize accessibility and performance, Mistral AI collaborated with NVIDIA, vLLM, and Red Hat. The result is highly optimized deployment for modern hardware, including efficient inference on NVIDIA Blackwell systems and mainstream GPUs through vLLM.

- NVIDIA integration enables support for advanced tools like TensorRT-LLM and SGLang, optimizing low-precision inference across platforms.

- MoE advantages leverage Blackwell attention, innovative kernels, and speculative decoding for high-throughput, long-context tasks.

- Edge readiness ensures seamless deployment on DGX Spark, RTX PCs, laptops, and Jetson devices, making AI accessible beyond the data center.

Ministral 3: Excellence at the Edge

For edge and local environments, the Ministral 3 models (3B, 8B, 14B) deliver outstanding cost-to-performance ratios. Each variant supports base, instruct, and reasoning tasks, along with image and multilingual capabilities. These compact models are perfect for real-world applications where efficiency and resource management are key.

- The 14B reasoning variant achieves state-of-the-art accuracy on benchmarks like AIME '25.

- Token efficiency and adaptability make them ideal for edge deployments and resource-constrained settings.

Accessible Deployment and Tailored Solutions

Mistral 3 models are available via major platforms such as Mistral AI Studio, Amazon Bedrock, Azure Foundry, Hugging Face, IBM WatsonX, and OpenRouter. This broad accessibility ensures organizations can quickly integrate advanced AI into their workflows.

For specialized needs, Mistral AI offers custom model training services, empowering enterprises to fine-tune models for unique tasks, datasets, and business goals. This approach supports secure, scalable, and impactful AI deployments tailored to any organization.

Engagement and Community Growth

Mistral AI encourages developers, researchers, and businesses to explore comprehensive documentation, experiment with model checkpoints, and participate in the community. Platforms like Hugging Face and the Mistral AI console offer easy onboarding, while support channels enable feedback and further customization.

Final Thoughts

Mistral 3 represents a significant leap forward in open, flexible, and scalable AI technology. By prioritizing openness, efficiency, and adaptability, Mistral AI empowers users everywhere to innovate and deploy advanced solutions that meet real-world needs. This release not only raises the bar for open AI but also fosters an environment of collective progress and creativity.

Explore the model documentation:

Technical documentation for customers is available on the AI Governance Hub

Start building with Ministral 3 and Large 3 on Hugging Face, or deploy via Mistral AI's platform for instant API access and API pricing

Ready to Put Mistral 3 to Work for Your Business?

Thanks for reading! The Mistral 3 family represents an exciting step forward for open AI, and I'm genuinely thrilled to see this level of capability become accessible to more organizations. But having access to powerful models is just the starting point. The real value comes when you integrate them into intelligent workflows that solve specific business problems. With over 20 years of experience helping startups and enterprise clients like Samsung and Google build custom AI solutions, I specialize in turning cutting-edge technology into practical, revenue-generating applications.

Whether you're looking to deploy Mistral 3 for document analysis, build multilingual customer support automation, or create agentic workflows that leverage these multimodal capabilities, I can help you design and implement a solution that fits your exact needs. Let's discuss how my software development and automation expertise can help you move from experimentation to production-ready AI.

Curious how Mistral 3 could transform your operations? I'd love to schedule a free consultation to explore the possibilities together.

Mistral 3: Redefining Open AI with Multimodal and Multilingual Power