Organizations today face growing pressure to deliver applications that go beyond basic functionality. Modern users expect adaptive, intelligent, and conversational experiences. Microsoft Fabric, when combined with GraphQL and Model Context Protocol (MCP), offers a robust path for turning static data into dynamic, AI-powered solutions. Together, these technologies empower developers to create agentic applications that leverage real-time data and natural language interactions.

What is Microsoft Fabric?

Microsoft Fabric is a comprehensive, all-in-one analytics platform designed for enterprises and data professionals. It unifies a wide array of data tools and services into a single, integrated environment. This platform streamlines everything from data movement and processing to real-time analytics and business intelligence.

By combining new and existing components from Power BI, Azure Synapse, and Azure Data Factory, Fabric provides a seamless Software as a Service (SaaS) experience. At its core is OneLake, a unified, multi-cloud data lake that eliminates data silos and simplifies data management.

With the integration of AI-powered tools like Copilot, Microsoft Fabric empowers users to transform large and complex datasets into actionable insights, fostering a data-driven culture within organizations.

Laying the Groundwork: Data Foundations for Agentic AI

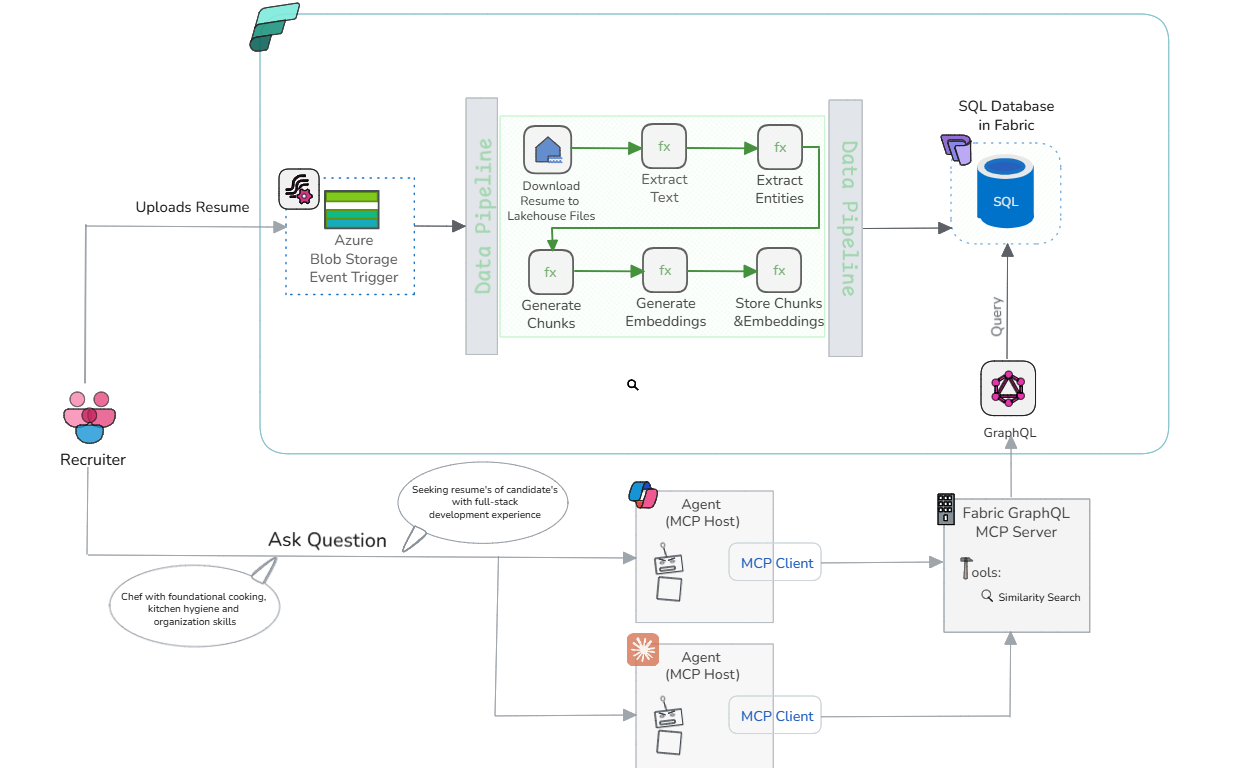

As an example we can use a recruiting agency scenario to illustrate the step-by-step transformation of unstructured resume data into actionable AI-ready assets.

The journey starts with a recruiter uploading a resume to Azure Blob Storage, which kicks off an automated pipeline within Microsoft Fabric. This structured data foundation dramatically improves how recruiters and AI agents search and interact with candidate information.

Image Credit: Microsoft

This process includes:

- Ingestion and Transformation: Resumes are ingested into Fabric Lakehouse, where their text is extracted and divided into manageable chunks.

- Entity Extraction: Azure AI identifies critical candidate details like name, email, phone, and location.

- Embedding Generation: Azure OpenAI converts these text segments into vector embeddings, enabling deep semantic search capabilities.

- Data Storage: Extracted entities and embeddings are stored securely in a Fabric SQL Database, organizing data for AI-driven workflows.

Constructing the Intelligent Data Pipeline

To replicate this setup, organizations need to configure their own Azure resources. The required components include storage accounts, AI services, and a Fabric workspace. The implementation steps are:

- Establishing a workspace, Lakehouse, and SQL database within Fabric.

- Importing and setting up data processing notebooks and pipelines.

- Configuring triggers for immediate file processing as resumes arrive in storage.

- Automating text extraction, entity recognition, chunking, embedding, and inserting results into the database.

- Implementing error handling to provide alerts and safely halt pipelines when issues occur.

This modular pipeline design leverages templates for rapid deployment and includes troubleshooting strategies to help users overcome common setup obstacles.

Making Data Accessible: GraphQL APIs for Natural Language Search

After processing, the next step is to open up the data through a GraphQL API. This approach offers several advantages:

- Flexible Querying: Both humans and AI agents can search for candidates by skill, experience, or location using natural language queries.

- Automated Schema Generation: The API schema is generated from stored procedures, particularly for similarity search with vector embeddings.

- Rapid Integration: Developers can expose their SQL database as a GraphQL endpoint within minutes for secure, targeted data access.

Comprehensive scripts and configuration guidance ensure that only the appropriate data is available for queries, maintaining security and compliance.

Enabling Conversational AI with MCP

MCP takes data access to the next level by enabling interactive, multi-turn dialogues between users and AI agents. Key features include:

- Conversational Context: MCP servers treat the GraphQL endpoint as a tool, allowing agents to clarify requirements and reason about the data in real time.

- Multi-Agent Compatibility: Popular agent hosts like Claude and GitHub Copilot in VS Code can access the data, letting users interact through natural language prompts.

- Realistic Prompts: Examples provided demonstrate how recruiters can find specialized candidates, filter by criteria, and gain insights in seconds.

This approach empowers end-users to extract value from their data quickly, supporting smarter, faster decision-making.

Best Practices and Troubleshooting Tips

The blog emphasizes the importance of managing Python dependencies, setting up stored procedures correctly, and configuring pipeline parameters. It provides practical advice and step-by-step solutions for common issues, ensuring a smooth deployment and reliable operation.

Accelerating AI-Readiness for Modern Applications

By combining Microsoft Fabric with GraphQL and MCP, organizations can move beyond traditional data management. Automated ingestion, semantic search, and conversational AI agents create a new class of intelligent, responsive applications. Readers are encouraged to experiment with these tools, contribute feedback, and help define the future of agentic AI solutions.

Microsoft Fabric, GraphQL, and MCP Enable AI-Ready Applications