Google is bringing you an AI collaborator that not only crafts code but also rigorously critiques its own output before you even see it. Google Developers have unveiled Jules, featuring a groundbreaking critic functionality.

This advancement is designed to boost the reliability, security, and efficiency of AI-generated code, redefining what developers can expect from automated tools.

Critic-Augmented Generation: A Smarter Workflow

At the core of Jules is critic-augmented generation. Each time Jules writes code, its critic component launches an adversarial review challenging every edit before it’s finalized. Think of this as a seasoned peer reviewer who is deeply versed in coding best practices, ensuring no risky patterns or subtle errors slip through.

The critic currently reviews the code in a single pass, but future updates aim to make the process more interactive and iterative. Even now, this built-in scrutiny means you get code that has already passed a robust internal checkpoint before delivery.

What Sets the Critic Apart

Traditional linters and test suites flag surface-level issues. Jules’ critic goes further, evaluating code with an understanding of context and intent. Its focus isn’t just on finding errors, but also on identifying incomplete, illogical, or inefficient solutions that automated tools might miss.

- Logic errors: Such as code that passes tests but fails with edge cases.

- Incomplete updates: For example, modifying a function’s signature without updating all usage points.

- Inefficiency: Solutions that work but are unnecessarily complex or slow.

This loop, generation, critique, revision can repeat until the critic is satisfied, resulting in code that is more robust and production-ready.

Judgment Over Rules

Unlike tools that check code against fixed standards, the critic uses reference-free evaluation. It judges code based on intent and resilience, drawing on recent advances in LLM-as-a-judge research. This means Jules can catch nuanced issues and provide real feedback, not just pass or fail checks, helping developers start with higher-quality code.

By weaving this judgment into the code generation process, Google turns review into a dynamic, iterative feedback loop, catching more subtle issues early.

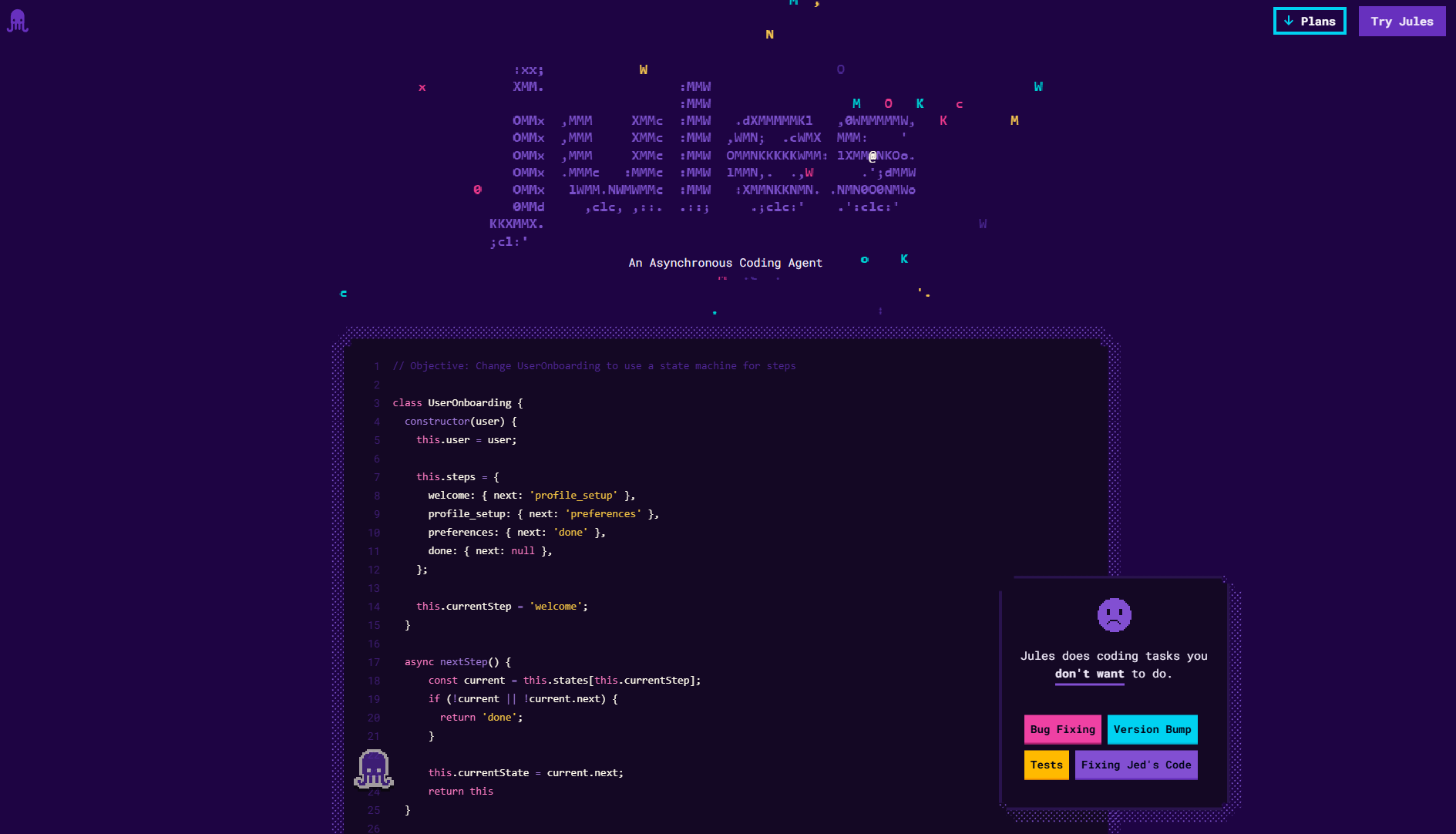

The Jules Workflow

- Step 1: You prompt Jules with a coding task.

- Step 2: The critic reviews the proposed code and its rationale, providing a high-level assessment.

- Step 3: Jules revises the code in response, and the critic re-evaluates as needed.

- Step 4: You receive internally vetted, stress-tested code.

This approach takes inspiration from the actor-critic model in reinforcement learning, where Jules proposes solutions, the critic evaluates, and immediate feedback leads to smarter, safer code without any retraining required.

Impact for Developers

With software development moving faster than ever, early detection of issues is crucial. Jules’ critic brings review further upstream, helping developers catch mistakes before they escalate. While human oversight remains essential, this system ensures every line of AI-generated code is already a step ahead in quality.

Ultimately, strong code is built on a foundation of questioning and critique. By equipping Jules with this capability, Google is pushing the boundaries of automated code generation ensuring that AI is not just a coder, but a vigilant reviewer as well.

Jules: Google’s AI Code Reviewer Setting a New Standard for Quality