As artificial intelligence continues to power increasingly complex workflows the risk of closed-domain hallucination becomes a critical challenge. In these environments, AI models may introduce outputs that stray from their source material.

Traditional methods that only check final outputs fall short, as multi-step workflows generate numerous intermediate results. Addressing this complexity requires not just detection, but also traceability from source to output to pinpoint where inaccuracies arise.

VeriTrail: A Next-Generation Solution

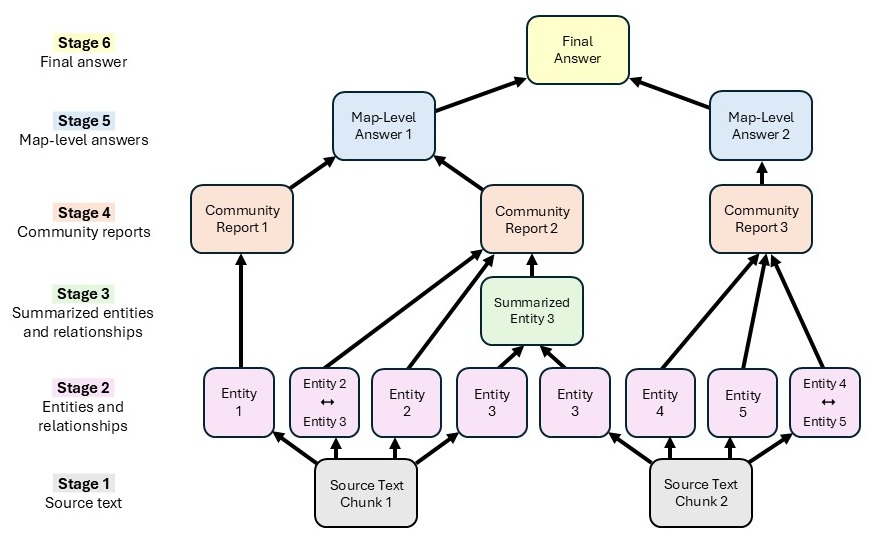

VeriTrail is a new approach designed specifically for these intricate workflows. By modeling the generative process as a directed acyclic graph (DAG), VeriTrail treats each node as a text chun; be it original data, an intermediate result, or the final output and connects them via data flow edges. This structure empowers VeriTrail to deliver:

- Provenance: Tracing the lineage of claims from output back to source material.

- Error Localization: Identifying exactly which step introduces unsupported or hallucinatory content.

Image Credit: Microsoft

How the System Works

VeriTrail begins by extracting distinct claims from the final output using a tool called Claimify. Each claim is then traced backward through the workflow’s DAG:

- Evidence Selection: At every node, sentences are chosen as potential evidence for or against each claim.

- Verdict Generation: An AI model evaluates the claim, categorizing it as “Fully Supported,” “Not Fully Supported,” or “Inconclusive” based on the available evidence.

This claim verification proceeds step by step until support is found in the original material or the claim is flagged as unsupported. The entire evidence trail is logged, ensuring transparency for each decision.

Real-World Examples: Supported vs. Unsupported Claims

Consider two cases that showcase VeriTrail’s capabilities:

- Fully Supported Claim: For a claim about legislative action on diabetes supply costs, VeriTrail traced support through multiple summaries, confirming its accuracy at every step.

- Not Fully Supported Claim: When assessing a claim linking electric vehicle battery repairs to slow auto sales in China, VeriTrail found little to no supporting evidence across nodes. After two “Not Fully Supported” verdicts (a configurable threshold), the system correctly flagged the claim as likely hallucinated and localized the issue to the final synthesis step.

Actionable Traceability for Users

VeriTrail’s output is more than a simple yes/no verdict. For every claim, the system delivers:

- All verdicts generated during the verification process

- A detailed evidence trail, including text snippets, node locations, and concise summaries

This granular traceability means users can quickly review relevant portions of the workflow, rapidly verify outputs, and efficiently debug errors even in workflows with thousands of steps.

Scalable and Reliable by Design

VeriTrail is built to handle real-world scale and complexity. Key features include:

- Evidence Integrity: Sentence IDs are cross-checked to ensure only non-hallucinated evidence is considered.

- Efficiency: The system narrows its focus to relevant nodes at each step, optimizing resource use as workflows grow.

- Configurability: Users can choose how many consecutive unsupported verdicts trigger a final flag, balancing accuracy and efficiency.

- Scalability: The approach manages arbitrarily large graphs, batching and rerunning evidence selection as needed.

Outperforming Alternatives in Real-World Settings

VeriTrail has been evaluated on massive datasets for hierarchical summarization and GraphRAG-based question answering, each with over 100,000 tokens of source content. It outperformed leading baselines including natural language inference models and retrieval-augmented generation, on both accuracy and traceability. Critically, its stepwise approach not only detects hallucinations, but also highlights exactly where the AI strayed from its source.

Building Trust Through Transparency

By enabling rigorous traceability across complex, multi-step workflows, VeriTrail represents a major step forward in trustworthy AI. Users gain the power to understand, verify, and debug generative processes with precision, making it easier to detect hallucinations, correct problems, and ultimately foster confidence in AI systems. To explore VeriTrail’s technical details further, refer to the original research paper linked below.

Source: Microsoft Research Blog

How VeriTrail Transforms Hallucination Detection in Multi-Step AI Workflows