Developing advanced AI applications often means wrestling with asynchronous code and specialized data handling, especially for real-time, multimodal experiences. Google DeepMind’s new GenAI Processors open-source Python library addresses these challenges by offering a streamlined, unified interface for orchestrating AI workflows, reducing both complexity and development time.

The Processor Abstraction: Modular and Streamlined

Central to GenAI Processors is the Processorabstraction, a modular unit that processes streams of data and outputs results. Data moves through these pipelines as asynchronous “ProcessorParts,” which can represent text, audio, images, or other types.

Each part carries metadata, simplifying the construction of advanced, multimodal pipelines. By leveraging Python’s asyncio, GenAI Processors connect low-level data manipulation with high-level model calls, creating efficient and readable workflows.

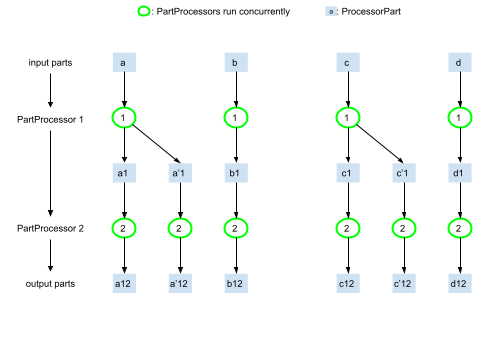

The GenAI Processors library is designed to optimize the concurrent execution of a Processor. Any part in this example of execution flow can be generated concurrently when all its ancestors in the graph are computed, e.g. `c'12` can be generated concurrently to `a’1`. The flow maintains the ordering of the output stream with respect to the input stream and will be executed to minimize Time To First Token (prefer `a12` to `d12` whenever possible). This concurrency optimization is done under the hood: applying a Processor to a stream of input will automatically trigger this concurrent execution whenever possible.

Concurrency for Responsiveness

GenAI Processors are engineered for automatic concurrent execution, processing parts of the pipeline in parallel to reduce latency and improve response times. This means developers can focus on application logic without needing to manage threads or manual synchronization. Whether you’re creating a live conversational agent or a turn-based research tool, this architecture ensures fast, robust performance as user interactions unfold in real time.

Key Features and Developer Benefits

- Modular Design: Pipelines are composed of reusable, testable Processor units, making maintenance and code clarity much easier.

- Native Async Support: Built-in compatibility with Python’s asynchronous capabilities delivers efficient I/O and computation.

- Effortless Gemini API Access: Dedicated processors simplify integrations with both standard and Live Gemini APIs, minimizing boilerplate code.

- Extensible Architecture: Developers can extend functionality by subclassing base processors or using decorators, enabling custom logic or third-party API connections.

- Unified Multimodal Data Handling: The ProcessorPart abstraction gives consistent, flexible handling of diverse data types, supporting sophisticated multimodal applications.

- Stream Utility Tools: Built-in utilities allow advanced manipulation of data streams, such as splitting, merging, and concatenation.

Real-World Application Scenarios

The blog highlights how developers can create a “Live Agent” that combines video, audio, and Gemini Live API calls with minimal code. By chaining processors with the + operator, data flows remain clear and maintainable, allowing quick prototyping of interactive, multimodal apps. Other use cases include agents that transcribe speech, generate text responses, and convert them to audio in real time, demonstrating both the flexibility and practical power of the framework.

Getting Started and Community Engagement

Installation is as straightforward as running pip install genai-processors. Google DeepMind provides Colab notebooks and scripts to help new users grasp the essentials and begin building immediately. While the library currently supports only Python, its open-source nature encourages community-driven expansion—particularly for new use cases or advanced features.

What Lies Ahead

Though still maturing, GenAI Processors lay the groundwork for more maintainable and responsive AI applications. Google DeepMind actively encourages developers to contribute, experiment, and explore new possibilities in building Gemini-based and generative AI solutions.

Takeaway

GenAI Processors deliver a unified, concurrent, and extensible framework for building sophisticated multimodal AI apps. By simplifying workflow orchestration and integration with Gemini APIs, the library empowers developers to focus on innovation and user experience, rather than underlying technical complexity.

GenAI Processors Simplify Multimodal AI App Development