Google’s latest AI breakthrough in time-series forecasting proposes a system that instantly adapts to new challenges with only a handful of examples provided. For industries such as retail, energy, and finance, this shift means moving away from laborious model retraining toward flexible, powerful forecasting with minimal data and effort.

The Rise of Few-Shot Learning in Forecasting

Traditionally, accurate forecasting demanded custom-trained models for each new scenario. Google’s foundation model, TimesFM, initially introduced zero-shot capabilities making predictions with no specific training for each task.

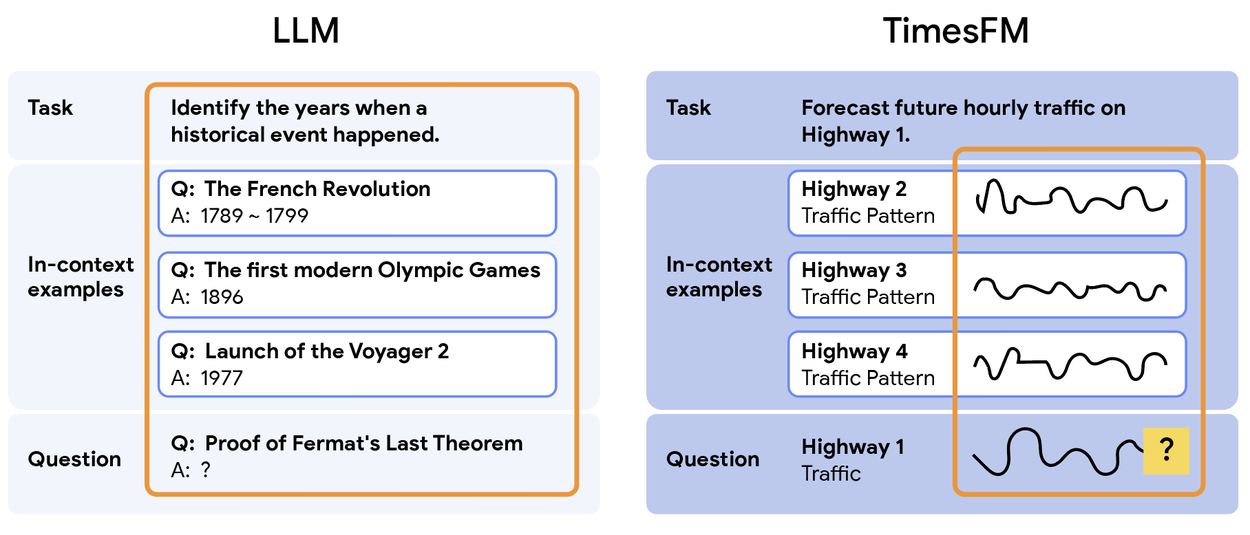

Yet, real-world success often depends on leveraging context, like recent trends or historical similarities. Enter In-Context Fine-Tuning (ICF): a novel approach that empowers TimesFM to learn from a few relevant examples provided at inference, transforming it into a true few-shot learner.

Technical Innovations: Separator Tokens & Smarter Training

To unlock in-context learning, Google’s researchers enhanced TimesFM’s architecture. Time-series data is tokenized and processed through a transformer stack, but the key is the learnable separator token. This token divides the core task’s history from any in-context examples, ensuring the model draws insights from relevant examples without confusion.

The team continued pre-training the model on datasets mixing these separator tokens and various in-context cases. This training enables TimesFM to focus on patterns within contextually similar data, effectively applying relevant trends to the current forecasting task.

Similar to few-shot prompting of an LLM (left), a time-series foundation model should support few-shot prompting with an arbitrary number of related in-context time series examples (right). The orange box encloses the inputs to the models. Credit: Google Research

Benchmarking the Advances: How Does TimesFM-ICF Perform?

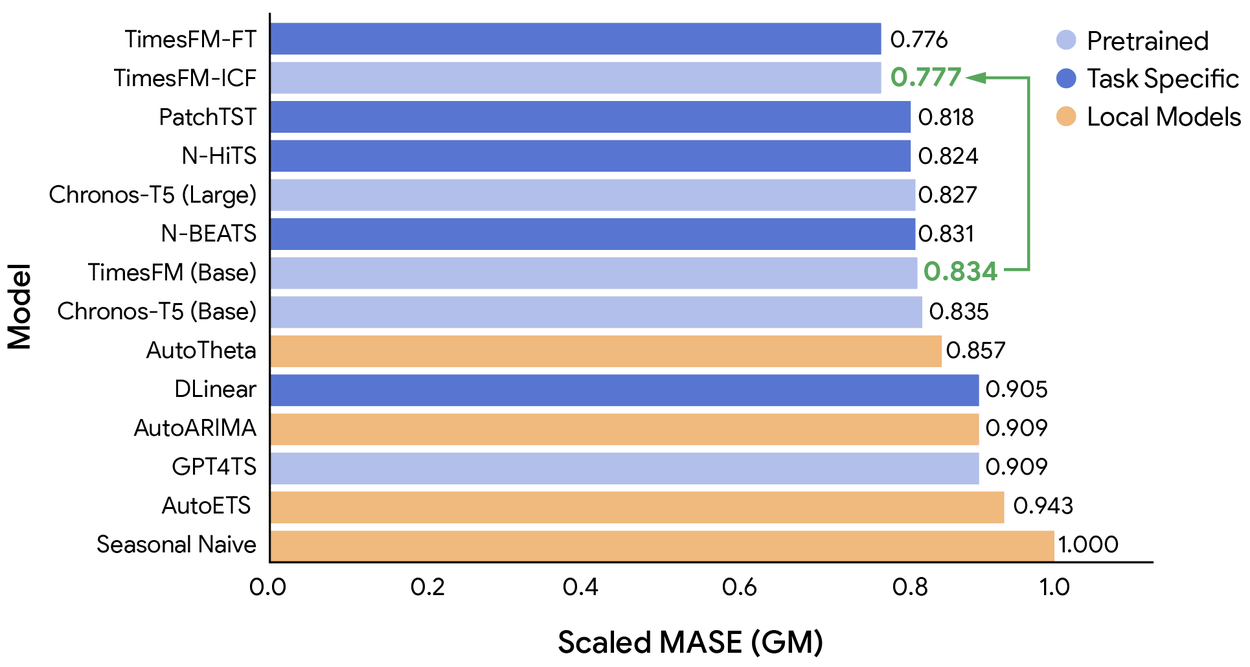

The newly enhanced TimesFM-ICF was tested across 23 diverse, previously unseen datasets. Three models were compared:

- TimesFM (Base): The original foundation model.

- TimesFM-FT: The base model, fine-tuned for each task with supervised learning.

- TimesFM-ICF: The upgraded few-shot model using in-context learning.

TimesFM-ICF improves the performance of TimesFM (Base) over many task-specific models and achieves the same performance as that of TimesFM-FT, which is a version of TimesFM fine-tuned for each specific dataset, respectively. Credit: Google Research

Results were compelling: TimesFM-ICF achieved a 6.8% improvement in accuracy over the base model and matched the performance of the supervised fine-tuned variant. This means organizations can now achieve state-of-the-art forecasting without the resource-heavy process of building and fine-tuning new models for every situation.

Business Impact: Flexibility, Efficiency, and Accessibility

TimesFM-ICF offers several key advantages:

- Seamless integration of additional in-context examples, enabling more accurate results as more data is supplied though with minor increases in inference time.

- Superior utilization of contextual information, outperforming models that only process longer input windows without explicit in-context handling.

For businesses, this means deploying a single, versatile forecasting model for a variety of tasks. A new product launch or market entry no longer requires starting from scratch; a few well-chosen examples suffice to generate robust forecasts. This cuts operational costs, accelerates innovation, and democratizes access to advanced AI-driven insights.

Future Directions: Toward Automated Context Selection

The research team is now working on automating the process of selecting the most relevant in-context examples. This next step will further simplify the user experience and amplify the value of foundation models in time-series forecasting. By making data-driven decision-making more accessible, these advances promise to transform industries and empower organizations of all sizes.

Conclusion

With TimesFM-ICF, Google demonstrates that foundation models can be both general-purpose and context-aware. The marriage of few-shot learning with robust, pretrained models marks a significant leap forward in AI forecasting, setting a new benchmark for accuracy, flexibility, and ease of use.

Source: Google Research Blog

Few-Shot Learning Revolutionizes Time-Series Forecasting: Inside Google's TimesFM-ICF