ether0, FutureHouse's new open-source, 24-billion-parameter model, hints at a future where scientific breakthroughs are achieved faster thanks to AI models that excel at complex reasoning in fields like chemistry by not just understanding chemistry but designing novel drug-like molecules. This leap bridges the gap between theoretical discussions and practical molecular invention.

Experiance the Model or view the Github Model Repo

Innovative Techniques: Reinforcement Learning and Reasoning Tokens

ether0 differentiates itself through reinforcement learning, which lets it develop reasoning skills beyond simple mimicry.

The model learns via trial and error, refining its answers based on accuracy. Each response begins with "reasoning tokens", sometimes unique or invented phrases, allowing ether0 to articulate its thought process.

This strategy enables tackling new challenges, such as identifying functional groups, even without prior examples.

Performance: Surpassing Human and AI Peers

While top language models like OpenAI’s Opus 4 can discuss chemistry, they often stumble with detailed molecular tasks, like atom counting, proposing structures, or inventing new molecule names.

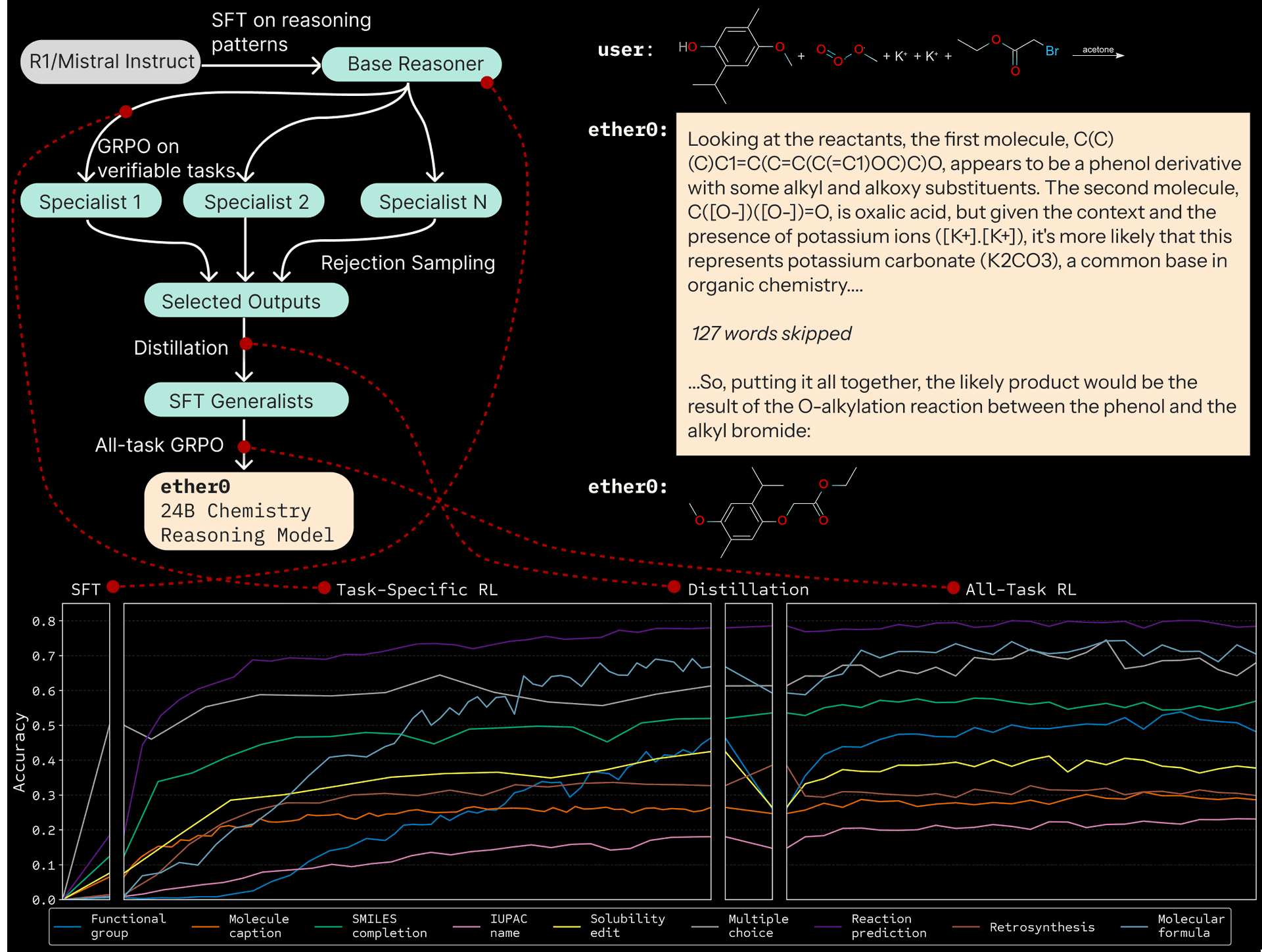

By fine-tuning Mistral 24B using reinforcement learning and curated "chains of thought," ether0 not only excels in molecular design but also does so efficiently, needing just about 50,000 examples per task.

This efficiency suggests a broader potential for applying specialized models in other scientific areas.

ether0 training process. A key idea is multiple separate specialists that can be combined via aggregating their answers + chains of thought into a generalist model.

Understanding Limitations and Model Behaviors

ether0 remains a research prototype. Its strengths lie in creative molecule design, but it struggles with memorizing obscure reactions or standardized test questions.

As it matures, the model sometimes invents new words or takes conceptual shortcuts, charming to some chemists, puzzling to others. Its conversational abilities are limited, yet its imaginative molecular suggestions set it apart from other open models.

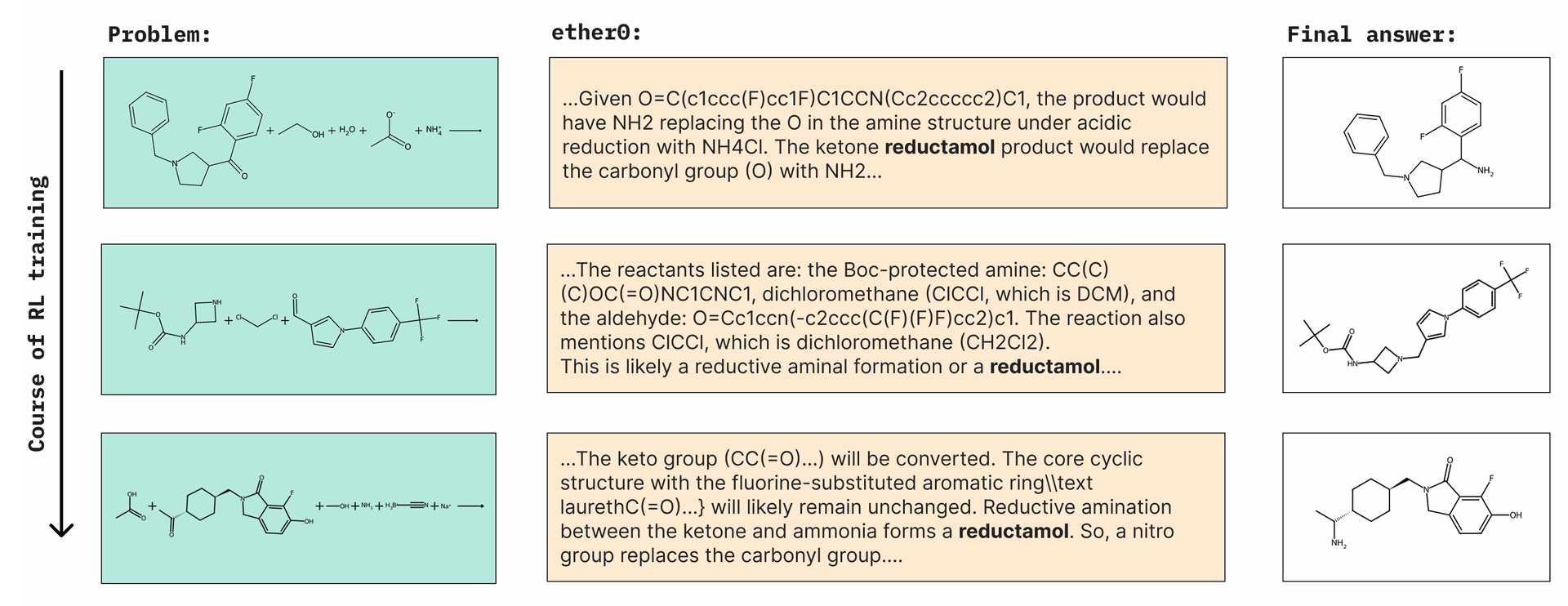

The invention of a new word: reductamol. It appears around step 50, and then is used often in the rest of the training run.

Prioritizing Safety and Responsible Use

FutureHouse addresses dual-use concerns by actively monitoring ether0’s outputs, especially regarding controlled substances.

Built-in safety measures ensure it resists generating harmful compounds. ether0 is designed for theoretical generation and rarely provides actionable laboratory guidance, focusing on safe, creative exploration.

Open Source Collaboration: Building Together

The development of ether0 highlights the strength of open-source research and industry collaboration. Leveraging the Mistral 24B architecture, FutureHouse worked with partners like VoltagePark and NVIDIA to access significant GPU resources.

They openly share model weights, methodologies, and benchmarks, empowering the scientific community to iterate, benchmark, and innovate further.

The Future: Towards Autonomous Scientific Agents

ether0 represents a promising step toward general AI scientists capable of uncovering connections beyond human intuition.

Already powering the Phoenix chemistry agent and released under a permissive Apache 2.0 license, ether0 paves the way for applying similar models across other scientific disciplines. FutureHouse invites researchers worldwide to collaborate in accelerating discovery.

Key Takeaway

Specialized, reinforcement-trained models like ether0 can achieve creative breakthroughs in scientific reasoning, especially in chemistry - areas where general-purpose AIs fall short. As open-source innovation and community partnerships grow, truly autonomous scientific AI agents move from vision to reality.

Source: FutureHouse Blog

Ether0 Is Transforming Chemistry with AI-Powered Scientific Reasoning