Apple is redefining generative AI by embedding advanced language models directly into its apps, all while maintaining a steadfast commitment to user privacy.

This innovation, revealed at the 2025 Worldwide Developers Conference, introduces next-generation foundation models powering Apple Intelligence and a new Foundation Models framework, granting developers unparalleled access to on-device AI.

Revolutionary Model Design and Performance

Apple’s approach features both on-device and server-based models, each tailored for specific needs. The on-device model, optimized for Apple silicon, harnesses around 3 billion parameters for swift, efficient processing with minimal power use.

Meanwhile, the server model employs a groundbreaking parallel track mixture-of-experts (PT-MoE) architecture, ensuring high accuracy for complex tasks through Private Cloud Compute.

- Efficiency Redefined: Smart block division and KV cache sharing dramatically reduce memory demand and boost device response times.

- Advanced Multimodal Abilities: Both models handle text and images, using custom vision encoders to grasp both local and global context.

- Broader Language Support: With support for 15 languages, Apple Intelligence serves a global audience.

Ethical Data Use and Training Integrity

Privacy isn’t just a feature, it’s built into Apple’s data practices. The foundation models are trained on an ethically sourced mix of licensed, curated, and responsibly crawled data, never user content. Strict filtering blocks personal, profane, or unsafe data, and publishers can manage content inclusion through Applebot and robots.txt.

- Diverse Text Sources: Priority is given to high-quality web data and domain-specific documents, extracted with advanced crawling strategies.

- Vast Visual Data: Over 10 billion quality image-text pairs and millions of interleaved documents, enhanced by synthetic captions, power rich visual comprehension.

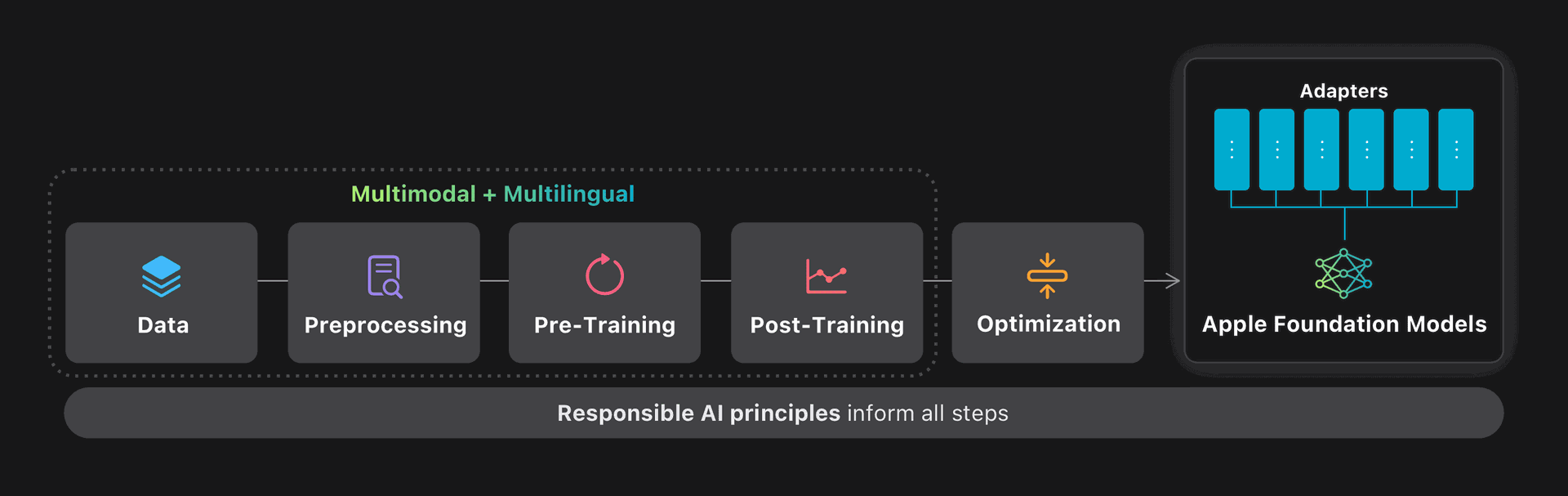

Scalable Training and Optimization

Apple’s pre-training pipeline now covers more languages and modalities, using staged training that focuses first on text, then integrates vision. Innovations like sparse-upcycling for on-device models and using 14 trillion tokens for server models slash computational costs and boost performance.

- Superior Compression: The on-device model leverages 2-bit quantization per weight, while the server model is compressed via Adaptive Scalable Texture Compression (ASTC).

- Quality Assurance: Technologies like low-rank adapters and quantized embeddings minimize any performance trade-offs, with some tasks even improving.

Empowering Developers with Foundation Models Framework

The new Foundation Models framework gives developers direct access to the 3B on-device model, enabling production-quality AI features like summarization, extraction, and creative content, all with robust privacy. Integration with Swift and intuitive macros make guided generation easy and safe.

- Guided Generation: Developers can constrain outputs with Swift macros, relying on specialized post-training datasets.

- Tool Calling: Models can connect to custom data sources or services, expanding app capabilities.

- Extensibility: A comprehensive Python toolkit lets developers train adapters for advanced, domain-specific tasks.

Comprehensive Evaluation and Competitive Results

Apple’s models have undergone stringent human and automated testing across languages and benchmarks. The on-device model outperforms open-source peers like Qwen-2.5-3B and competes well with larger models in English. The server model rivals leaders like Llama-4-Scout, though top-tier proprietary models like GPT-4o still lead in some areas. Visual tasks see Apple’s models matching or besting much larger competitors.

Commitment to Responsible AI

Apple places responsibility at the center of its AI strategy, with clear principles guiding data use, development, and deployment. Core priorities include empowering users, respecting global diversity, and safeguarding privacy through on-device and Private Cloud Compute processing. Multilingual evaluation and safety guardrails reduce risks, while feedback loops ensure ongoing improvement and contextual relevance.

Looking Ahead: The Future of Private, Capable AI

Apple’s foundation models mark a major step forward for privacy-first, efficient AI. By equipping developers with powerful, accessible tools and maintaining a strong ethical stance, Apple is shaping a future where advanced AI features are available to all—without compromise on privacy or responsibility.

Apple Foundation Models 2025: A Leap Forward in Private, Powerful AI