This research introduces LLMVoX, a lightweight, autoregressive streaming Text-to-Speech (TTS) model designed to generate high-quality speech with minimal latency. The model preserves original large language model (LLM) capabilities by fully decoupling speech synthesis from text generation.

https://arxiv.org/abs/2503.04724

Key Takeaways

- LLMVoX is a lightweight (30M parameters), autoregressive, streaming text-to-speech (TTS) framework.

- LLMVoX significantly outperforms existing speech-enabled LLMs in terms of latency and Word Error Rate (WER).

- Offers superior Word Error Rate (WER) and high-quality speech generation.

- Achieves significantly lower latency (475 ms) compared to existing cascaded TTS systems.

- It maintains the linguistic capabilities of the base LLM without fine-tuning.

- The framework generalizes to new languages with minimal dataset adjustments.

- Successfully integrated into multimodal pipelines, demonstrating capabilities in speech, text, and vision.

- Potential applications include real-time voice assistants, interactive multimodal AI systems, and multilingual speech interfaces.

- Demonstrates effective real-time interactions, critical for user engagement and application responsiveness.

- Applicable to real-time dialogue systems, voice assistants, and multilingual speech synthesis.

Overview

The field of conversational AI has been transformed by Large Language Models (LLMs), initially developed for text-based interactions. Recently, efforts have been made to expand these interactions into speech-to-speech dialogue systems, allowing more natural human-machine communication.

However, current speech-enabled LLMs often involve extensive fine-tuning with speech data, negatively impacting the original linguistic capabilities of the LLMs and significantly increasing computational and data demands.

To address these issues, the authors propose LLMVoX, an LLM-agnostic, lightweight, autoregressive streaming TTS model designed to operate independently from the underlying LLM, thereby preserving its original performance.

LLMVoX employs a multi-queue token streaming system, allowing speech generation to occur concurrently with text generation from the LLM, significantly reducing latency.

The system leverages a lightweight Transformer architecture (30 million parameters) optimized for discrete acoustic token prediction, offering a streamlined integration that can be adapted to various tasks and languages.

This architecture utilizes a ByT5-based Grapheme-to-Phoneme (G2P) model, effectively embedding phonetic information directly into the speech synthesis pipeline without explicit phoneme prediction. Such embedding extraction improves computational efficiency and ensures accurate pronunciation across multiple languages.

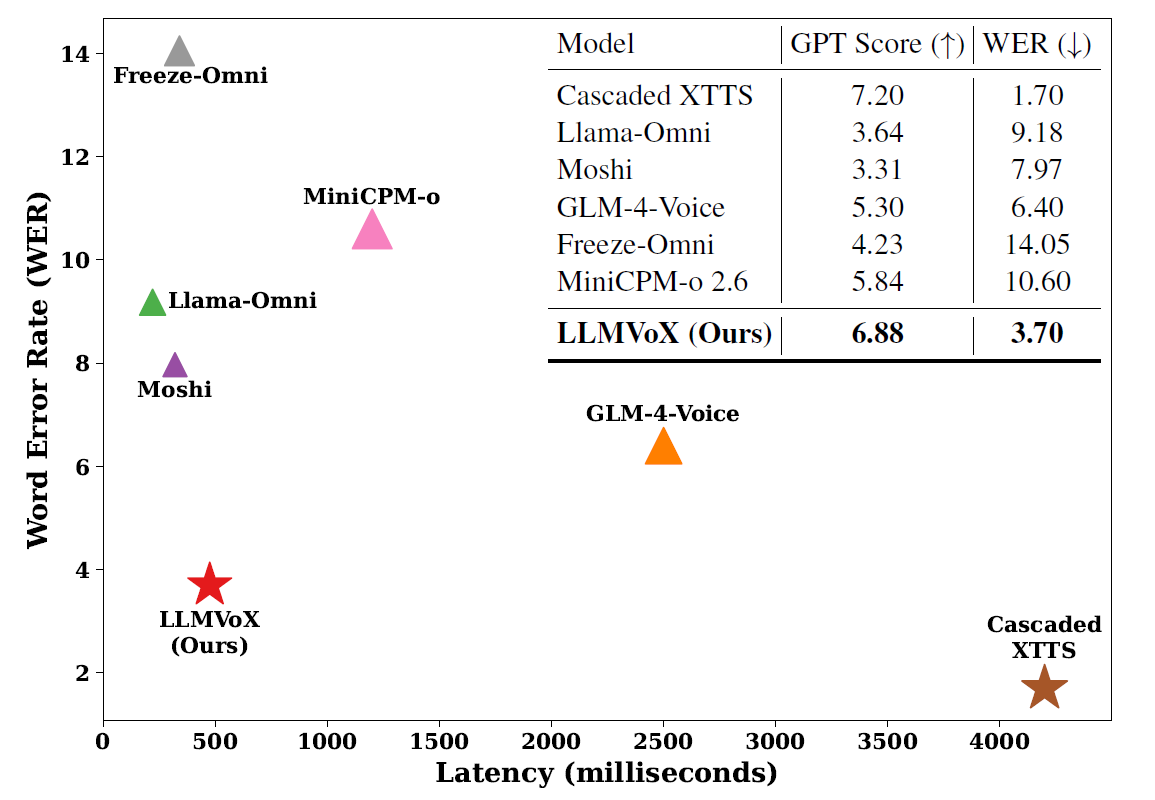

Figure 1: Speech quality (WER) vs latency (milliseconds) comparison of recent speech-enabled LLMs. Our LLMVoX is LLM-agnostic streaming TTS that generates high-quality speech (lower WER) comparable to XTTS (Casanova et al., 2024) while operating 10× faster. In the plot, △ represents LLM-dependent methods, and ⋆ denotes LLM-agnostic methods. The size of each symbol is proportional to the GPT score, indicating overall response quality. All methods are evaluated under similar settings and use similarly sized base LLMs

Introducing LLMVoX

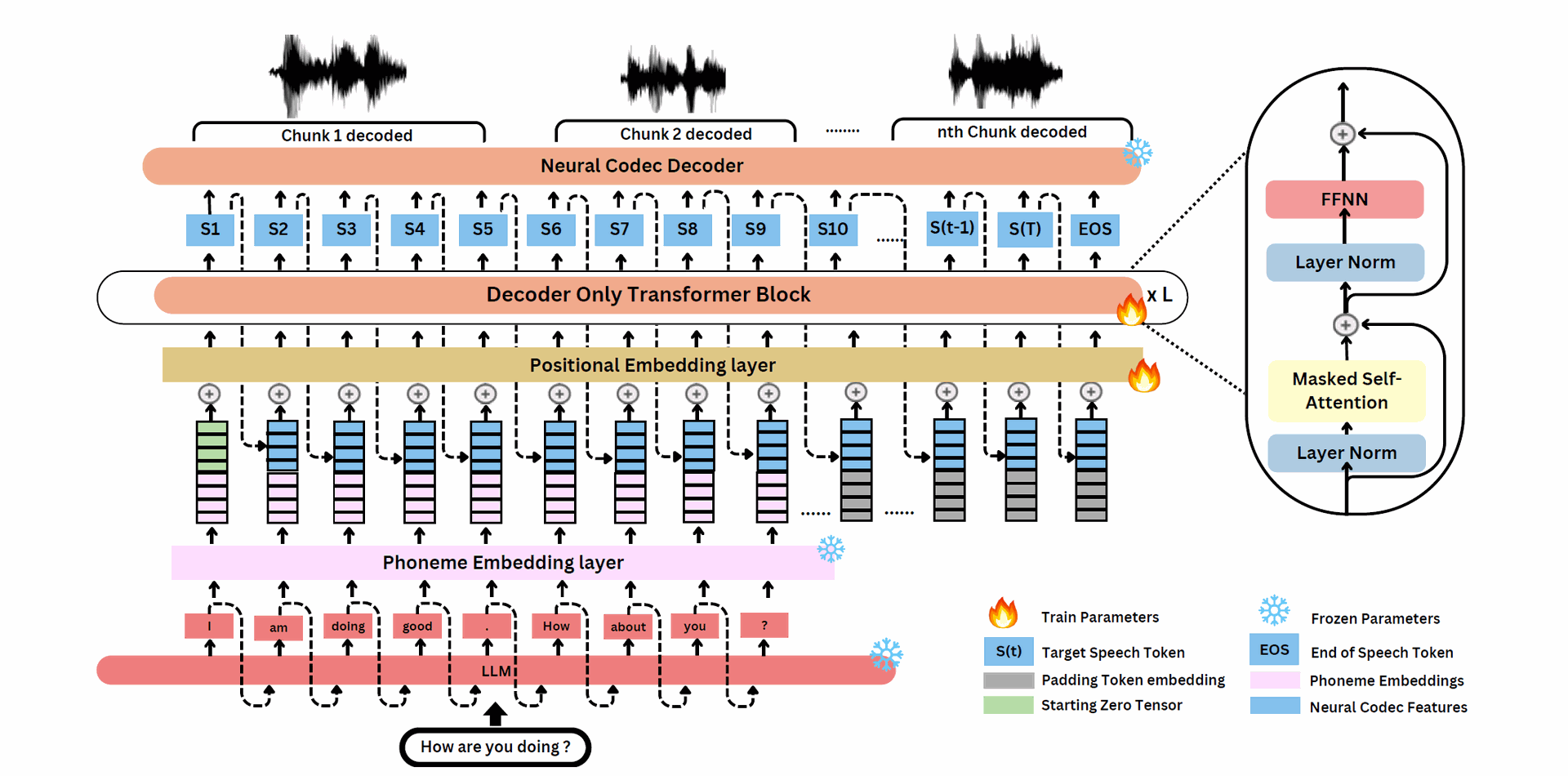

The LLMVoX architecture utilizes a Transformer-based autoregressive model, designed for real-time streaming TTS. At its core, LLMVoX predicts speech tokens sequentially based on phoneme-aware embeddings derived from a Byte-Level Grapheme-to-Phoneme (G2P) model.

By combining these phonetic embeddings with acoustic features from previously generated speech tokens, the system achieves improved natural audio output. LLMVoX uniquely combines discrete neural audio tokenization and an autoregressive Transformer-based decoder, enabling efficient and high-quality speech synthesis.

Figure 2: Overview of the proposed architecture. Text from the LLM is tokenized via a ByT5-based Grapheme-to-Phoneme(G2P) model, producing byte-level phoneme embeddings (teal). These are concatenated with the previous speech token’s feature vector (blue), L2-normalized, and fed into a decoder-only Transformer to generate the next token. A neural codec (WavTokenizer) decoder (orange) reconstructs speech every n speech tokens predicted.

The multi-queue streaming design plays a critical role, allowing parallel text-to-speech processing and continuous speech output with minimal latency. Two speech synthesis modules alternate between sentences, decoding text from separate FIFO (First In First Out) queues, significantly improving the fluency and responsiveness of speech generation.

For training, the model utilized the VoiceAssistant-400K dataset, comprising over 2,200 hours of single-speaker English speech, and 1,500 hours of synthesized Arabic speech for multilingual support. The training involved a decoder-only Transformer with 4 layers and a neural audio codec for discretizing continuous audio waveforms, facilitating efficient audio tokenization and reconstruction.

Why it's Important

LLMVoX's design fundamentally changes how speech synthesis integrates with conversational AI systems by maintaining linguistic accuracy without compromising real-time performance.

Its capability to adapt to various languages and multimodal contexts without extensive retraining has substantial implications for global accessibility and usability.

In practical terms, LLMVoX can significantly enhance user interactions with AI across voice assistant technologies, accessibility technologies, interactive educational tools, and multilingual applications, where clear communication and responsiveness are essential.

Moreover, the system's independence from LLM modifications safeguards the core reasoning and linguistic richness of existing LLMs. This preservation is essential for maintaining the fidelity of conversational AI applications, particularly those involving critical communication, such as healthcare and customer support.

Enhanced User Experience: By enabling real-time, natural-sounding speech interactions, LLMVoX improves the accessibility and usability of AI systems, making them more intuitive and engaging for users.

Scalability and Flexibility: The LLM-agnostic and plug-and-play nature of LLMVoX allows it to be integrated with various LLMs without extensive retraining, facilitating rapid deployment across different applications and platforms.

Multilingual and Multimodal Capabilities: LLMVoX's ability to adapt to multiple languages and integrate with vision-language models expands its applicability in global and diverse contexts, supporting a wide range of use cases from multilingual customer support to interactive educational tools.

Resource Efficiency: The lightweight architecture of LLMVoX reduces computational overhead, making it suitable for deployment in environments with limited resources, such as mobile devices or edge computing scenarios.

Summary of Results and Experiments

The researchers evaluated LLMVoX across several critical tasks, including general question-answering capabilities, knowledge retention accuracy, speech quality, and alignment between synthesized speech and generated text. Comparisons with prominent models such as XTTS, Moshi, GLM-4-Voice, and Freeze-Omni showed that LLMVoX consistently provides superior or competitive performance, specifically noted for its exceptional balance between quality and low latency.

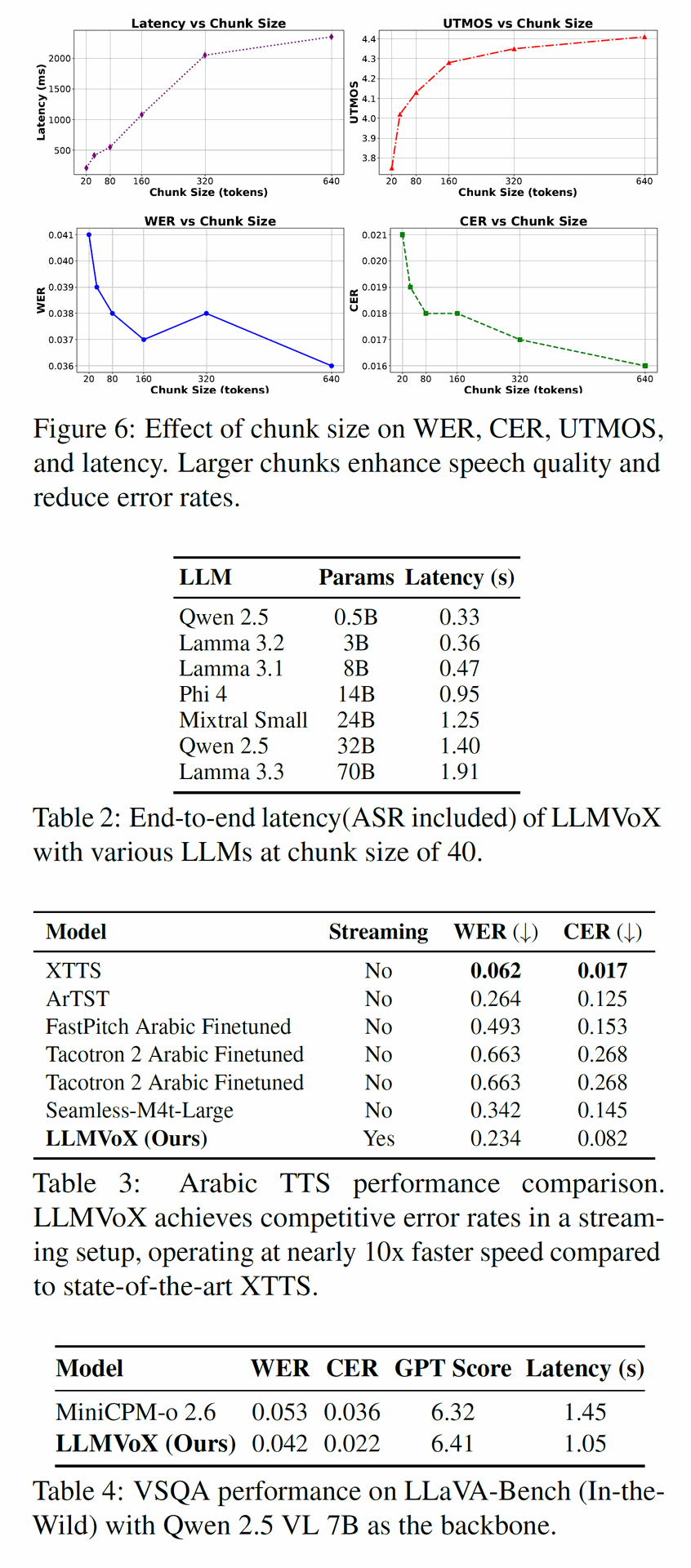

Results indicate that LLMVoX achieves a remarkably low WER of 3.70%, compared to much higher rates from competitors like Freeze-Omni (14.05%) and Llama-Omni (9.18%). Its latency was impressively low (475 ms), starkly contrasting with cascaded TTS methods, such as XTTS (4200 ms). Additionally, its UTMOS, a measure of speech naturalness, was high at 4.05, reinforcing its excellent speech quality.

Experiments demonstrated adaptability across languages, specifically highlighted by robust Arabic speech generation, where LLMVoX achieved a Character Error Rate (CER) of approximately 8.2%, competitive with non-streaming Arabic TTS models, yet significantly faster.

Further experimentation explored its adaptability to Visual Speech Question Answering (VSQA), where LLMVoX outperformed MiniCPM-o 2.6 by showing lower WER and comparable GPT scores, emphasizing its applicability to multimodal tasks.

Conclusion

LLMVoX represents a significant advancement in streaming speech synthesis, demonstrating superior performance in terms of latency, speech quality, and multilingual capabilities. Its plug-and-play nature, coupled with minimal computational demands, positions it ideally for widespread adoption across diverse AI-powered conversational applications, paving the way for a more accessible and interactive AI future.

Streaming Text to Speech for Any LLM