The quest for automated scientific discovery has evolved significantly over the last decade thanks to AI. Yet modern AI, particularly large language models (LLMs), still face challenges with structured reasoning and symbolic abstraction.

While LLMs excel in generating fluent text and recalling information, they often lack the explicit intermediate reasoning process inherent to human scientific thinking, limiting their capacity for self-awareness and critical reflection.

Graph-PRefLexOR (Graph-based Preference-based Recursive Language Modeling for Exploratory Optimization of Reasoning) extends transformer-based LLMs with explicit graph construction, symbolic abstraction, and recursive refinement.

This framework aims to enable interpretable, multistep reasoning, thereby accelerating scientific discovery by mimicking the iterative and reflective nature of scientific inquiry.

Key Takeaways

- Enhanced Reasoning Capabilities: Graph-PRefLexOR extends traditional transformer-based LLMs by integrating explicit graph construction, symbolic abstraction, and recursive refinement during the answer generation process.

- Structured Reasoning: Reasoning is formally defined as a structured mapping from a user task (T) to a knowledge graph (G), abstract patterns (P), and a final answer (A). In this framework, concepts are represented as nodes and relationships as edges, facilitating hierarchical inference and uncovering nuanced relationships.

- Small Model Performance: A 3-billion-parameter Graph-PRefLexOR model has demonstrated superior performance compared to standard LLMs in terms of reasoning depth, structural coherence, and applicability across various tasks.

- Cross-Domain Generalization: The framework exhibits remarkable ability to generalize beyond its training domains, showcasing creative reasoning such as linking mythological concepts (e.g., "thin places") to materials science.

- Knowledge Expansion Strategy: It introduces a "knowledge garden growth" strategy, allowing for the automated, dynamic, and iterative expansion of knowledge graphs and the fostering of interdisciplinary insights.

- Interpretability and Transparency: By explicitly constructing graph-based intermediate representations, Graph-PRefLexOR makes the reasoning process more interpretable and explainable, a crucial feature for trustworthiness and validation in scientific applications.

- Diverse Applications: Potential real-world applications span sustainable materials design, bioinspired robotics, targeted drug delivery, climate-resilient infrastructure, and synthetic biology, reflecting its versatility for complex interdisciplinary problems.

Overview

Graph-PRefLexOR (Graph-based Preference-based Recursive Language Modeling for Exploratory Optimization of Reasoning) addresses the limitations of domain expertise in LLMs by extending transformer-based LLMs with explicit graph construction, symbolic abstraction, and recursive refinement during answer generation.

Graph-PRefLexOR distinguishes itself from existing approaches like Chain-of-Thought (CoT) prompting, Tree-of-Thought, and Self-Refine by explicitly constructing knowledge graphs and symbolic patterns during inference, enabling structured generalization and interpretability.

Unlike prior graph-enhanced models that focus on retrieval or attention, Graph-PRefLexOR integrates recursive symbolic reasoning directly into the language generation pipeline, allowing for native, in situ graph-based thought processes within LLMs. This architecture enables interpretable, multistep reasoning. A crucial step towards general scientific discovery through AI.

The framework draws inspiration from reinforcement learning, a machine learning paradigm where a model learns to make decisions by performing actions to maximize a reward, and category theory, a branch of mathematics that emphasizes how objects relate to each other (morphisms) rather than their internal details.

Category theory is a powerful tool for constructing models of complex phenomena by focusing on relational aspects, revealing recurring patterns across diverse domains like nature, human organizations, and science.

For instance, the hierarchical structure of bone, which combines stiffness and toughness, has inspired materials design in aerospace and architecture. Similarly, Newton's second law (F=ma) and Ohm's law (V=IR) are isomorphic, meaning they share a fundamental structural equivalence, both reducible to the general form y=kx. This ability to identify isomorphisms allows for transferring insights across seemingly unrelated phenomena, simplifying complex systems and aiding in the discovery of universal laws.

To computationally model isomorphisms, the framework leverages Graph Isomorphism Networks (GINs), a type of graph neural network (GNN) well-suited for graph-structured data where nodes represent objects and edges represent relationships.

The authors highlight that recent research suggests transformers implicitly function as GINs, providing a powerful basis for explicitly integrating graph-based reasoning into these architectures. Graph-PRefLexOR utilizes a standard transformer, endowing it with explicit capabilities for in situ graph reasoning and symbolic deduction.

The foundation of Graph-PRefLexOR is its predecessor, PRefLexOR (Preference-based Recursive Language Modeling for Exploratory Optimization of Reasoning). PRefLexOR introduced a "thinking before answering" strategy, leveraging reinforcement learning (specifically Odds Ratio Preference Optimization (ORPO) and Efficient Exact Optimization (EXO)) to refine reasoning through iterative reflection and dynamic feedback loops.

This is achieved through the introduction of special tokens <|thinking|> to mark intermediate reasoning steps, and <|reflect|> for evaluation by a critic model. Graph-PRefLexOR expands this concept by explicitly incorporating graph-based reasoning into the "thinking phase".

The core of Graph-PRefLexOR's approach is formalizing reasoning as a structured mapping. The knowledge graph, G=(V, E), represents concepts as nodes (V) and relationships as directed edges (E), utilizing common relational descriptions such as "IS-A," "RELATES-TO," and "INFLUENCES".

By explicitly constructing and abstracting these relational graphs, the model can encode structural information that standard next-token prediction in LLMs often overlooks or treats implicitly. This graph-driven approach ensures that entities and their relationships are first-class objects in the learned representation, making it easier for the model to discover universal features (like repeated subgraphs or underlying algebraic forms) and apply symbolic abstractions.

Why it’s Important

Graph-PRefLexOR is a significant AI advancement that integrates graph-based reasoning, symbolic abstraction, and recursive reflection into large language models (LLMs). This framework addresses key limitations of current LLMs by enabling explicit, interpretable, and multistep reasoning, which is crucial for complex problem-solving in scientific and creative domains.

It fosters self-awareness and critical reflection in AI, akin to human scientific thinking, and excels at identifying isomorphic patterns across disparate fields, promoting interdisciplinary insights and accelerating scientific discovery.

The framework's "knowledge garden" concept allows for autonomous and dynamic expansion of knowledge graphs, moving beyond isolated responses to build evolving knowledge bases. Unlike other LLM reasoning methods, Graph-PRefLexOR explicitly constructs symbolic graph representations during its "thinking phase," offering transparent and traceable analyses of how conclusions are reached.

Compared to other LLM reasoning methods like Chain-of-Thought (CoT) prompting or Self-Refine, Graph-PRefLexOR's key differentiator is its explicit integration of symbolic graph representations and formal intermediate structures directly into the language generation pipeline. This allows for "in situ graph-based thought processes" rather than merely relying on unstructured token sequences or high-level planning.

This hybrid approach, bridging connectionist and symbolic AI, enables more robust and explainable solutions for tasks like hypothesis generation and causal inference, making AI a more effective collaborative partner in research.

Summary of Results

The research evaluates Graph-PRefLexOR's capacity for structured reasoning, symbolic abstraction, recursive refinement, and generalization beyond its training data, which consisted of approximately 1000 papers on biological and bio-inspired materials.

The model was fine-tuned on top of the meta-llama/Llama-3.2-3B-Instruct LLM using a multistage training process involving Odds Ratio Preference Optimization (ORPO) and Efficient Exact Optimization (EXO). A key aspect of the training was the on-the-fly generation of structured data, teaching the model to explicitly construct knowledge graphs and symbolic representations within its thinking phase.

Key Architectural Components:

Graph-PRefLexOR's core process involves a structured "thinking phase" before generating an answer.

This phase includes:

- Knowledge Graph Construction: Concepts extracted from the input context are represented as nodes (V), and relationships (such as IS-A, RELATES-TO, INFLUENCES) are encoded as directed edges (E).

- Abstract Pattern Generation: Higher-order dependencies within the knowledge graph are summarized into symbolic representations, often as a sequence of transformations with proportional relationships.

- Retrieval-Augmented Generation (RAG): This technique is used during training to enrich the input context by retrieving relevant information from a knowledge index (the raw scientific papers). This ensures that the generated answers and reasoning steps incorporate a global perspective and deep connections to the training corpus.

- Recursive Refinement: The model employs a multi-agent feedback loop, where a "critic" agent evaluates the intermediate reasoning (thinking phase) and provides feedback for improvement, leading to iteratively refined responses.

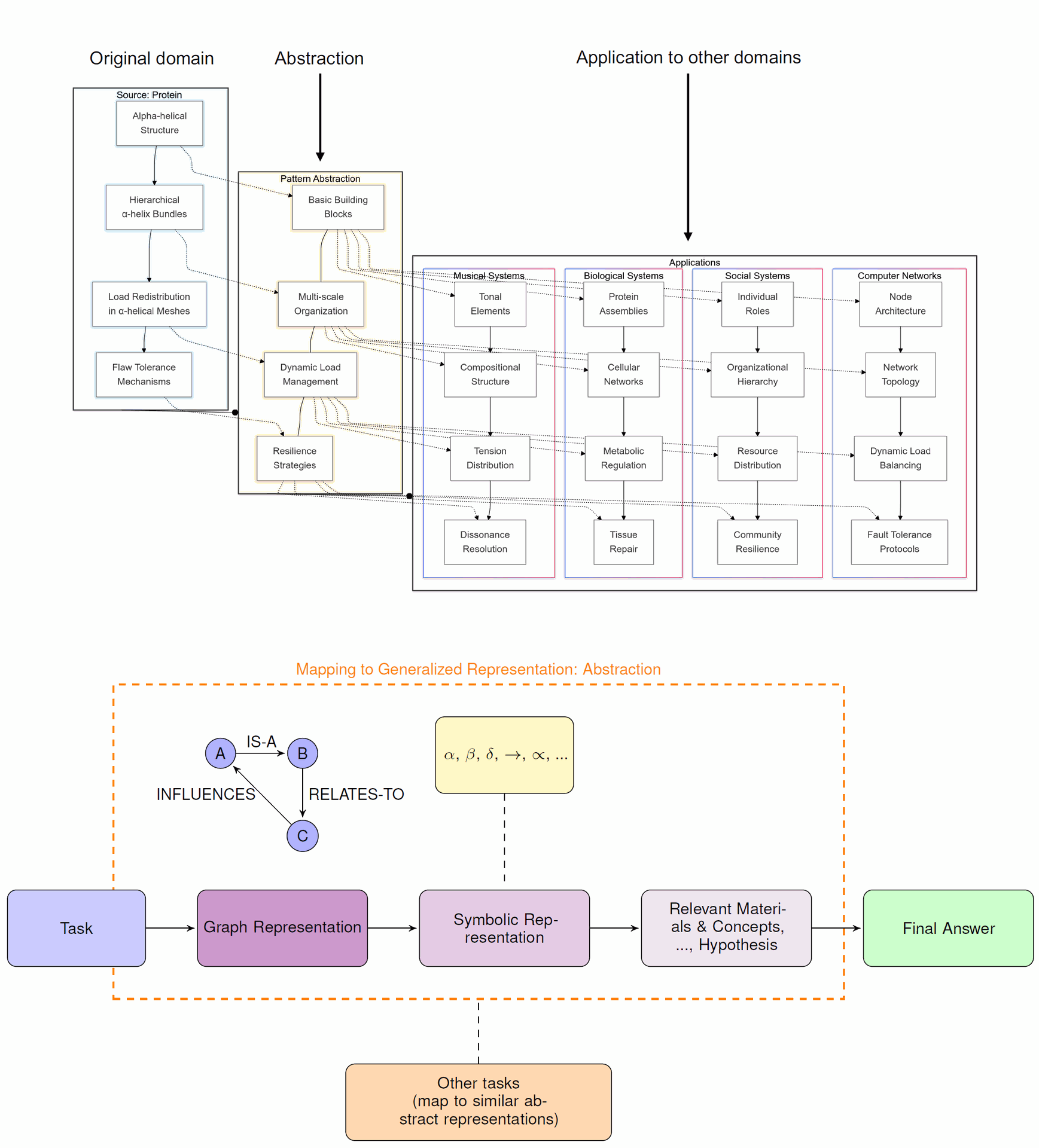

Illustration of Graph-PRefLexOR Framework: Figure 1 provides a high-level visualization of generalization through abstraction, illustrating how a phenomenon in an original domain (like protein materials fracture) is modeled as abstract patterns and then applied to describe phenomena in other domains.

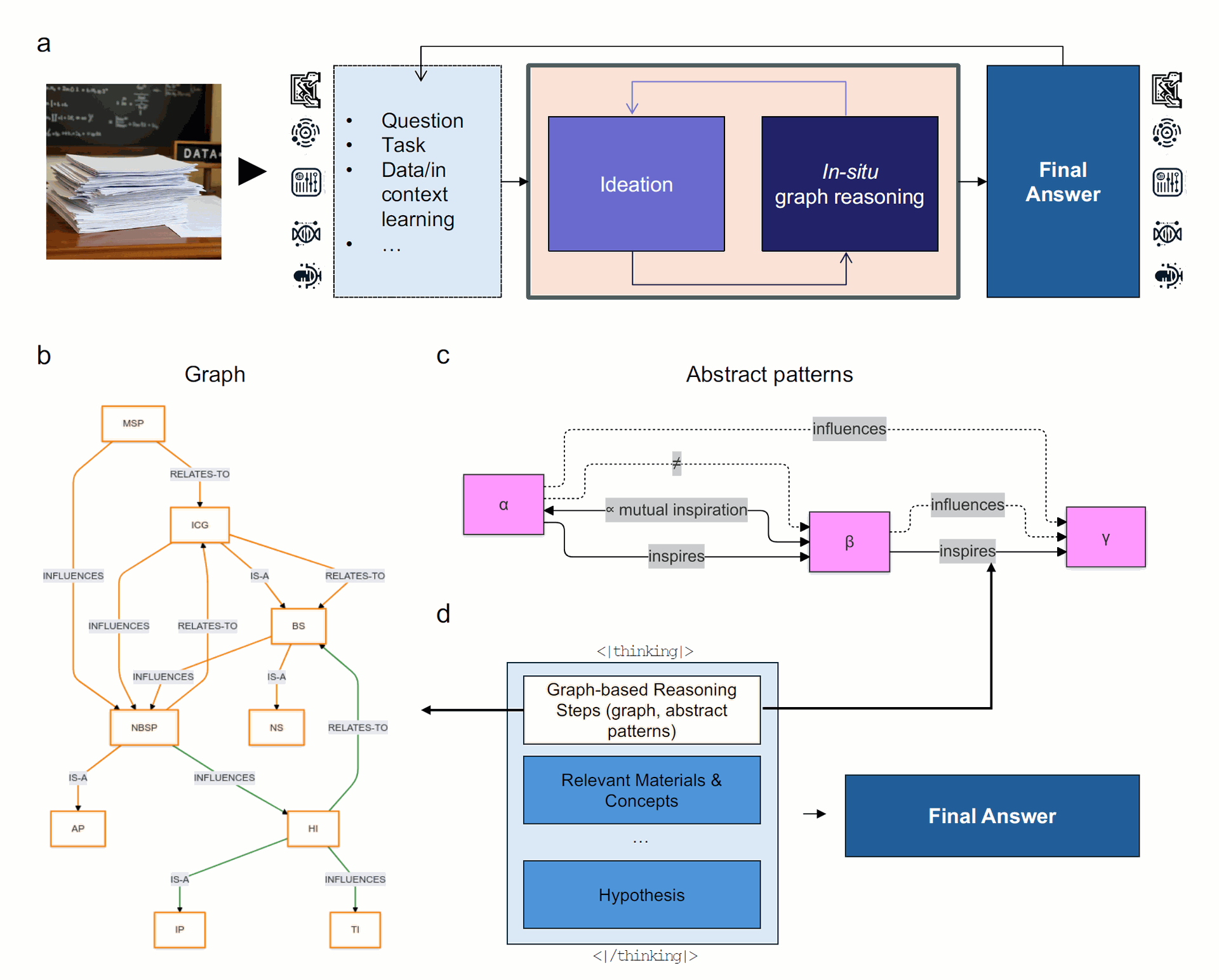

Figure 2 offers an overview of the Graph-PRefLexOR approach, detailing its multistep reflection, graph-based modeling of context and tasks, abstract pattern formulation, and integrated multistage reasoning mechanisms.

Figure 2: Overview of the Graph-PRefLexOR approach. Panels a, b, c, and d: multistep reflection, graph-based context/task modeling, abstract pattern formulation, and integrated reasoning mechanisms.

Experiment 1: Music and Materials Design (Single Task-Response Pair)

The first experiment challenged the model to propose a novel idea relating music and materials, a domain not explicitly covered in its materials science-focused training data.

The user task was:

"Propose a new idea to relate music and materials.".

The model's response initiated with a detailed "thinking phase":

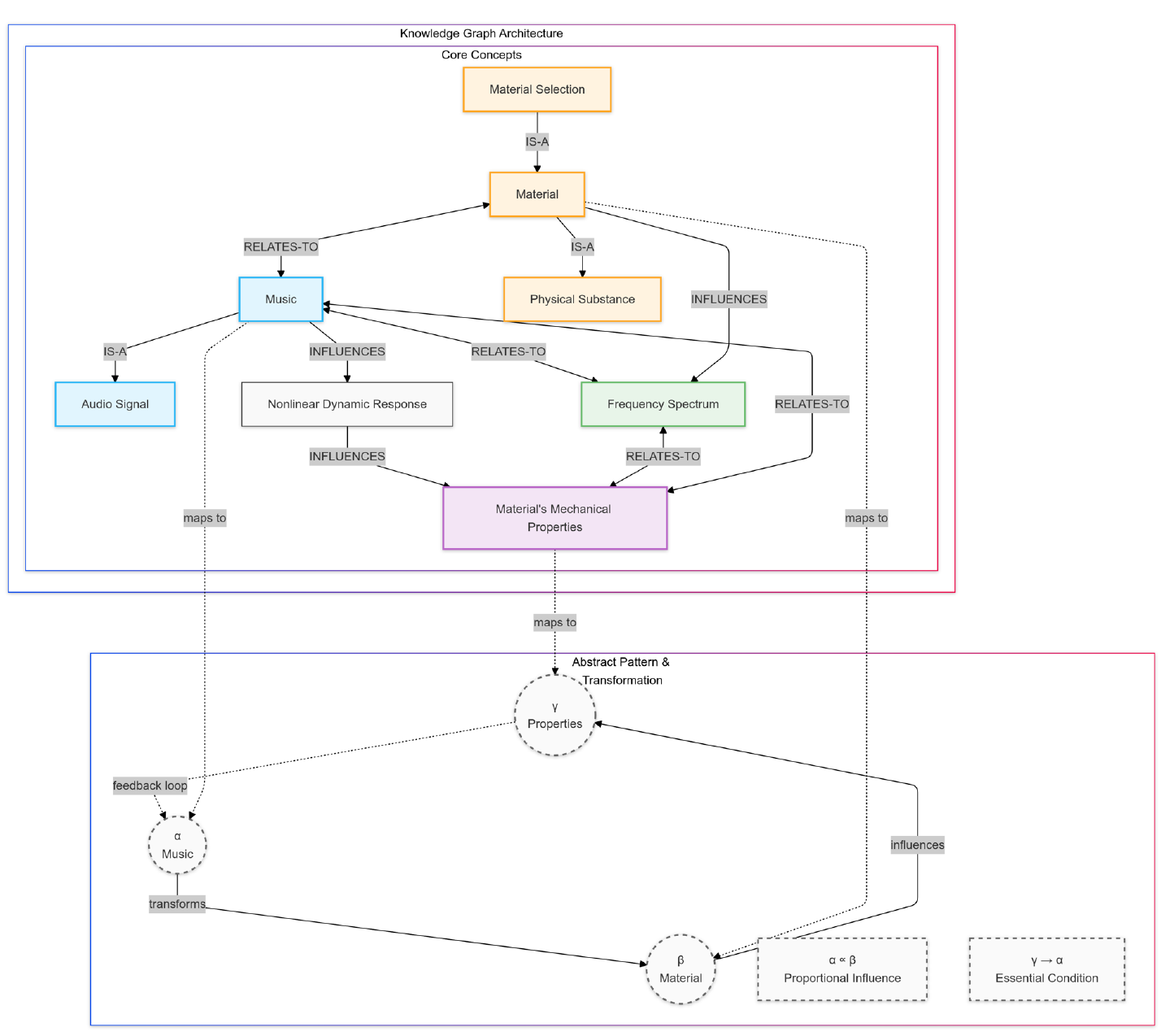

- Knowledge Graph: The model constructed a knowledge graph with concepts like "Music" (IS-A Audio Signal), "Material" (IS-A Physical Substance), "Nonlinear Dynamic Response," and "Frequency Spectrum." Relationships included IS-A, RELATES-TO, and INFLUENCES, such as "Music -[INFLUENCES]-> Nonlinear Dynamic Response -[INFLUENCES]-> Material’s Mechanical Properties".

- Abstract Pattern: It formalized the relationship as α → β → γ, where α represented Music, β Material, and γ Material’s Mechanical Properties. A key transformation rule was (α∝ β) and a feedback loop (γ→α).

- Additional Thinking Steps: This included specific "Reasoning Steps" (e.g., Music has dynamic responses on materials, analyze frequency spectrum), "Relevant Materials or Concepts" (Nonlinear Dynamic Response, Frequency Spectrum), "Design Principles" (Frequency Spectrum Analysis, Material Selection, Frequency Spectrum Manipulation, Tuning and Control), "Material Properties" (Nonlinear Dynamic Response, Frequency Dependence, Mechanical Property Tuning), a "Hypothesis," and "Additional Background".

After this extensive thinking phase, the model produced a final answer proposing "Music-Inspired Material Tuning". This idea suggested tuning and controlling mechanical properties by analyzing and manipulating music's frequency spectrum, leveraging materials' dynamic responses. The proposal highlighted key concepts, design principles, potential applications (including biomedical uses like cancer or neurological disorder monitoring), and acknowledged challenges (material selection, frequency manipulation, scalability, theoretical modeling).

Figure 4: Visualization of the knowledge graph and abstract reasoning pattern for music-materials. Illustrating the knowledge graph (top) and abstract pattern (bottom) for the music and materials problem, with explicit mappings between concrete and abstract elements.

The response demonstrated sophisticated formalization. The relationship between music and materials was conceptualized as a triple system with a cyclic feedback loop and proportional influence. This symmetric conceptualization, where material properties can influence musical response, was noted as a main novelty beyond treating music as a simple input.

The proposal integrates well-known concepts like nonlinear dynamic responses and frequency analysis into a comprehensive framework, suggesting practical implementations like Dynamic Mechanical Analysis (DMA) and specific property targets (damping, stiffness).

Experiment 2: Designing a Tough Protein Material (Recursive Reasoning)

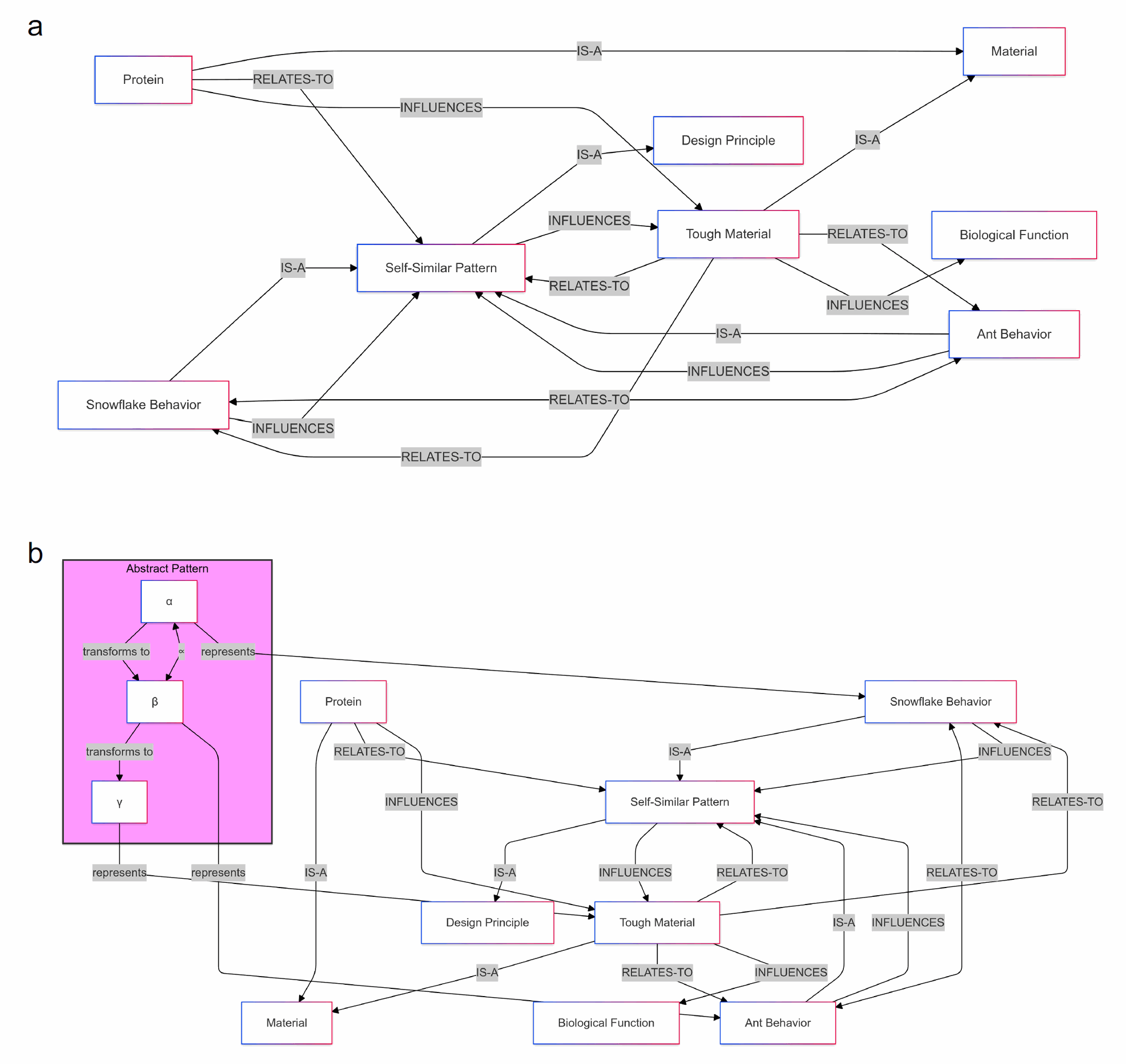

This experiment tasked the model with integrating snowflake and ant behavior to design a new tough material from protein, utilizing a recursive reasoning approach with a two-agent setup.

The user task was:

"Integrate a snowflake and ant behavior to design a new tough material made from protein.".

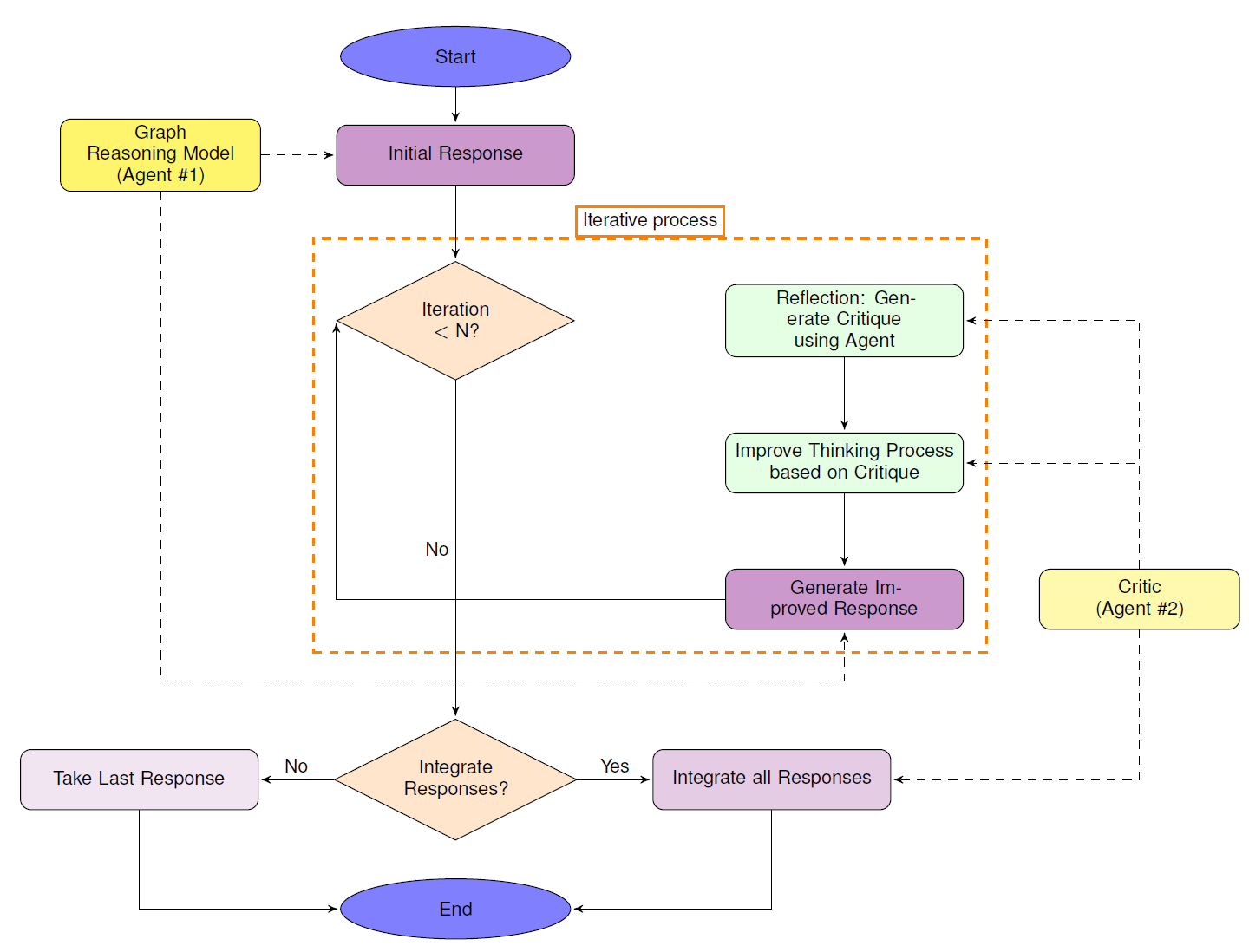

The process involved a "Graph Reasoning model" (Agent #1) and a "general-purpose critic model" (Agent #2). Agent #2 critiqued Agent #1's thinking steps, which were then refined and fed back to Agent #1. This iterative process (N=3 iterations in this example) aimed to develop an integrated, comprehensive response.

Figure 5: Graph-PRefLexOR Recursive Reasoning Algorithm depicting the flowchart of the multiagent recursive reasoning process, including response generation, reflection extraction, thinking process improvement, and final integration.

The final integrated answer described a "Snowflake-Ant Inspired Protein Material" (SAIPM). This material would incorporate fractal patterns and hierarchical structures inspired by snowflakes for toughness and adaptability, and self-organization principles from ant colonies for adaptability and diversity.

The design outlined key components, a design process (designing, testing, refining), material properties (fractal pattern mimicry, hierarchical structure, self-organization, toughness enhancement, adaptability), potential applications (biomedical, biodegradable, soft robotics, biomineralization), challenges (scalability, self-organization, biocompatibility, synthesis methods), and future research directions.

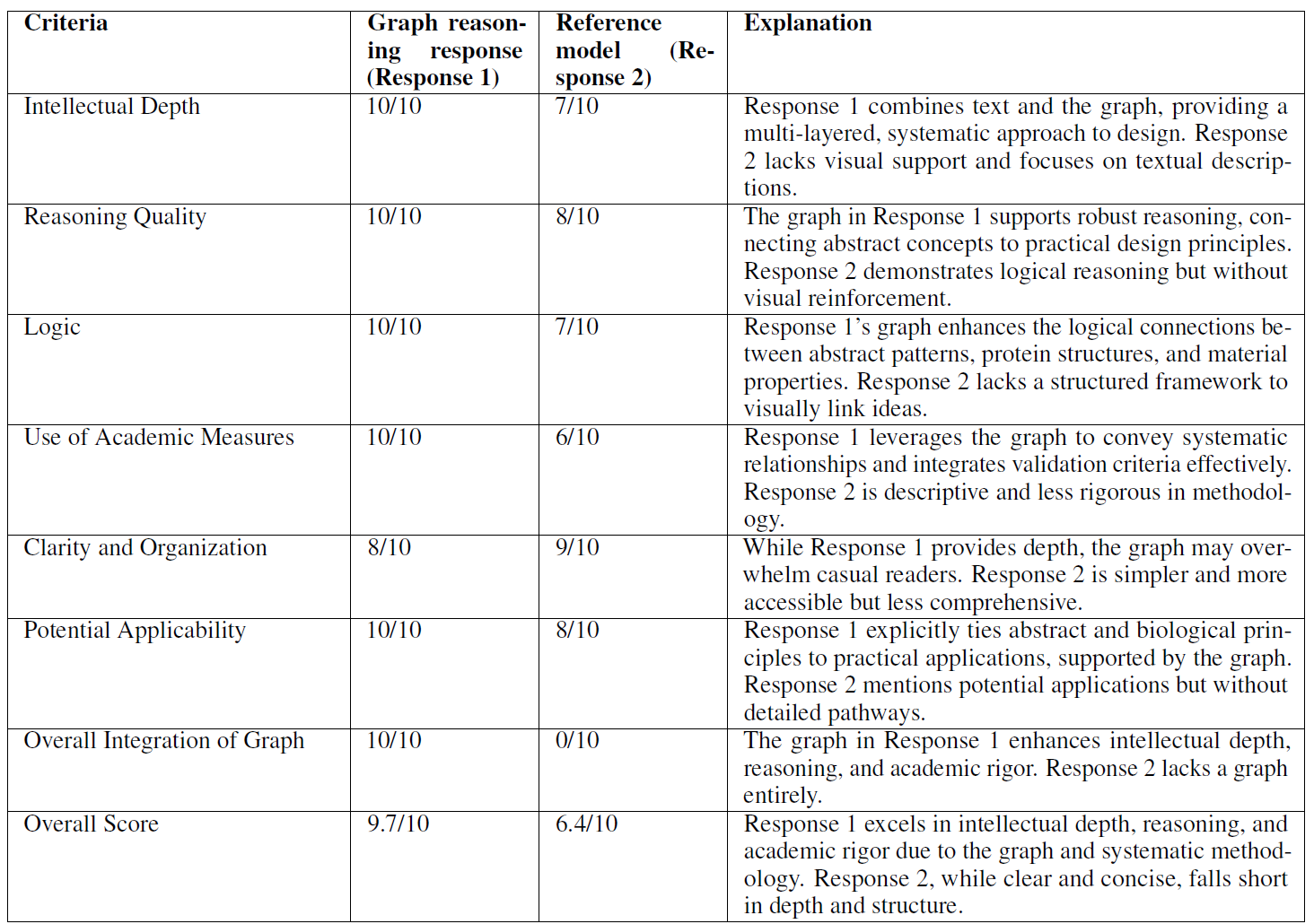

Comparison with a Standard Model (Table 1 & Figure 6): The Graph-PRefLexOR response was benchmarked against a standard (non-fine-tuned) LLM using GPT-4o as an evaluator.

Table 1: Benchmark comparison of Graph-PRefLexOR versus a nonfine-tuned baseline model, evaluated by GPT-4o.

The comparison revealed Graph-PRefLexOR's superior intellectual depth, reasoning quality, logic, and academic rigor, with an average score of 9.7/10 compared to the baseline's 6.4/10.

The graph-reasoning response provided a highly systematic framework, detailing explicit parameters, an iterative validation process, and clear delineation in graph form, aligning with graduate-level research expectations. In contrast, the standard model offered a more conceptual, broader-stroke approach, lacking methodological granularity and deep materials-specific reasoning.

Figure 6: Overview of the graph data generated for snowflake and ant behavior task (first iteration). The knowledge graph alone (panel a) and its integration with the abstract pattern representation (panel b) after the first iteration of the snowflake and ant material design task.)

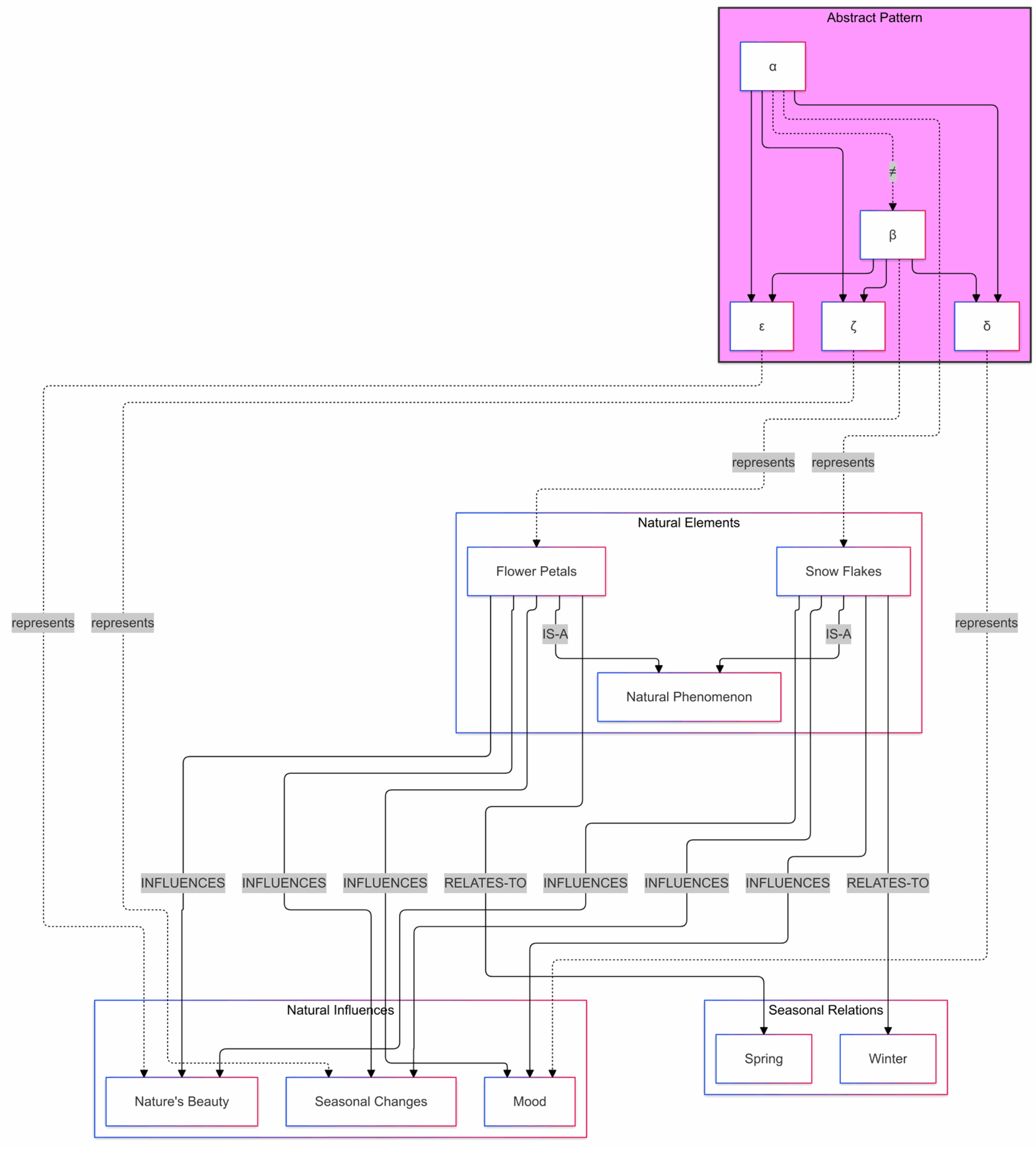

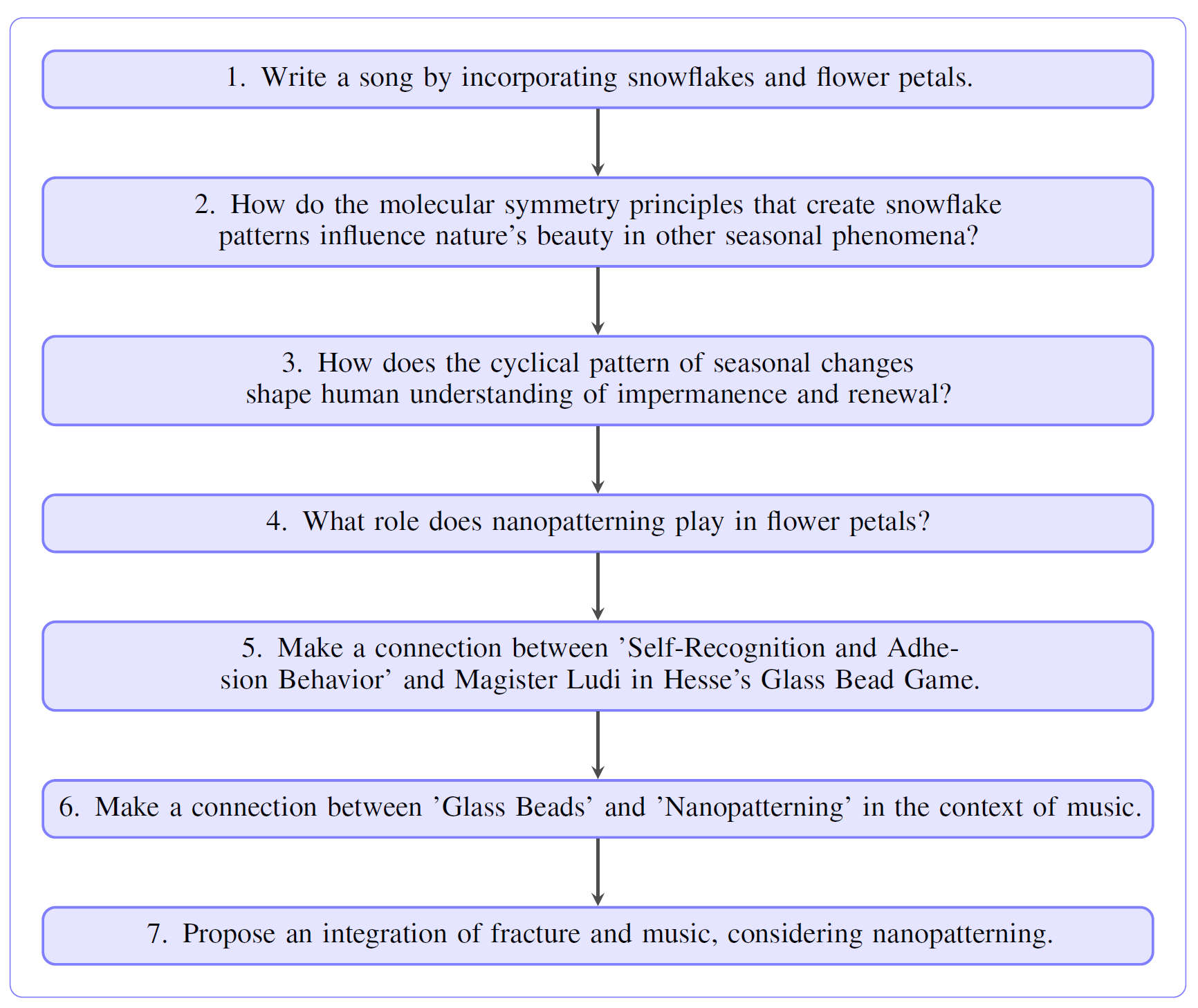

Experiment 3: Growing a Knowledge Garden - Song Composition with Snowflakes and Flower Petals

This experiment explored Graph-PRefLexOR's "knowledge garden growth" capability, where the model iteratively expands graph structures by repeatedly responding to prompts.

The initial task was creative:

Write a song by incorporating snowflakes and flower petals.

The model first generated a knowledge graph connecting "Snow Flakes" and "Flower Petals" to "Natural Phenomenon," "Winter," "Spring," "Mood," "Nature’s Beauty," and "Seasonal Changes" through IS-A and INFLUENCES relationships. The abstract patterns revealed relationships like α → β → γ and parallel influences (e.g., "Snow Flakes" and "Flower Petals" both influencing "Mood" and "Nature’s Beauty"). The song lyrics themselves reflect the contrast and harmony between winter and spring, symbolizing nature's cyclical beauty.

The model demonstrated sophisticated pattern recognition and a blend of creative and analytical reasoning. It recognized symmetrical influence patterns between snowflakes (winter) and flower petals (spring) on mood, nature's beauty, and seasonal changes, despite their temporal opposition.

This revealed a fundamental balance in nature's organization. The abstraction process generated a formal framework for these relationships, demonstrating high-level categorical reasoning while preserving compositional possibilities.

Figure 7: Resulting graph in response to the task: Write a song by incorporating snow flakes and flower petals. Visualizing the knowledge graph integrated with the abstract pattern representation for the song composition task.

Figure 8: Logical sequential flow of prompts used to grow the knowledge graph successively through recursive reasoning. Illustrating the progression of prompts from creative to scientific and philosophical inquiries, highlighting the human-AI collaboration for prompt generation.

The knowledge expansion process yielded unique insights, such as the identification of symmetrical influence patterns between snowflakes and flower petals despite their temporal opposition, revealing a fundamental balance in nature.

The model also demonstrated the ability to abstract natural phenomena into formal frameworks with first and second-order transformations, and to integrate diverse concepts like molecular symmetry, nanopatterning in flower petals, and philosophical ideas of impermanence and renewal, connecting materials science to music, literature, and philosophy.

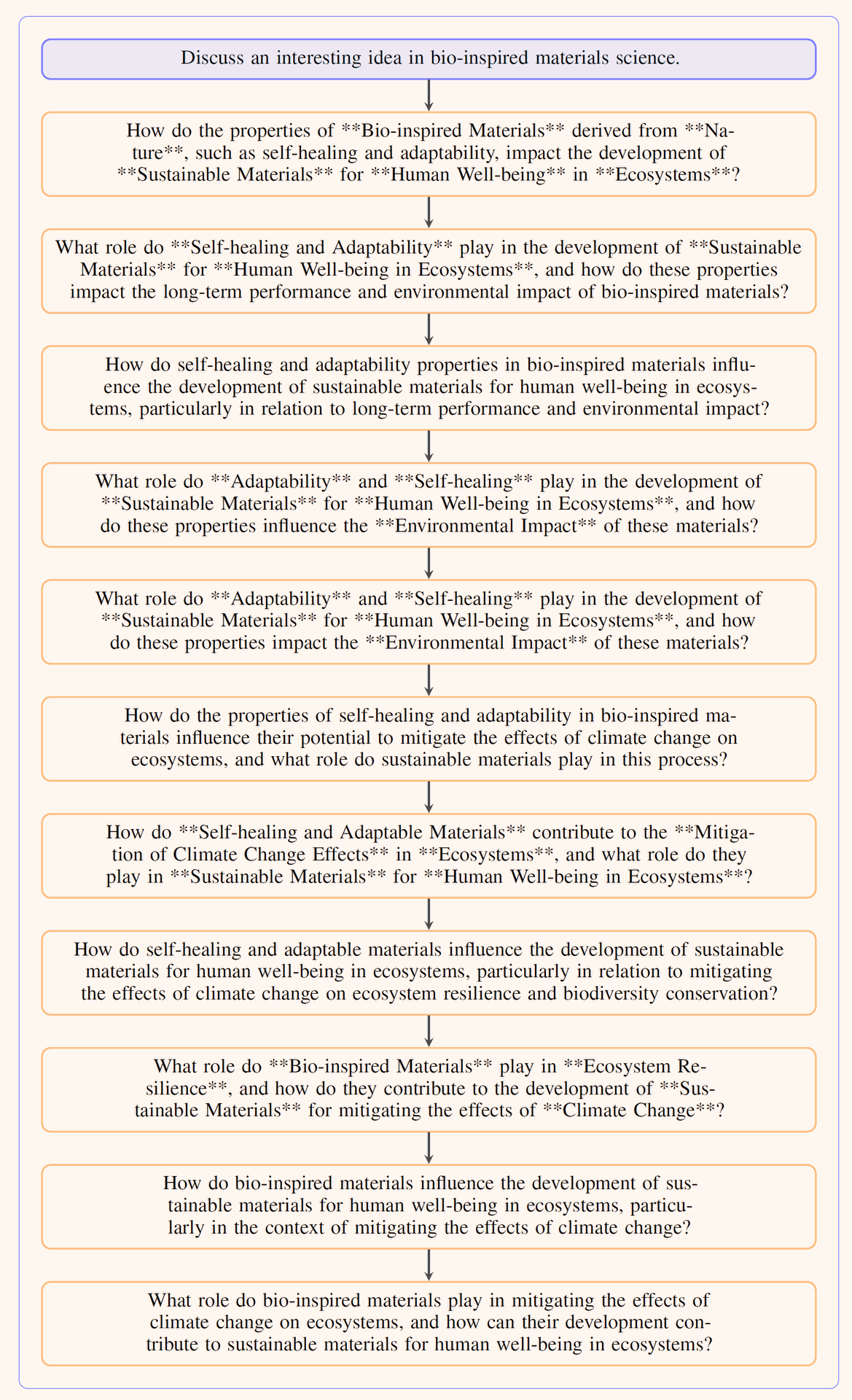

Experiment 4: Autonomously Growing Knowledge Garden

This experiment pushed the boundaries to fully autonomous knowledge generation.

Starting with a human-provided prompt:

"Discuss an interesting idea in bio-inspired materials science"

The LLM was used to autonomously develop 12 subsequent prompts, fostering an ever-expanding abstraction of relationships.

Figure 12: Logical sequential flow of autonomously generated prompts exploring bioinspired materials. The progression of autonomously generated prompts, starting with a broad question and transitioning to detailed inquiries into adaptability, self-healing, climate change, and ecosystem resilience.

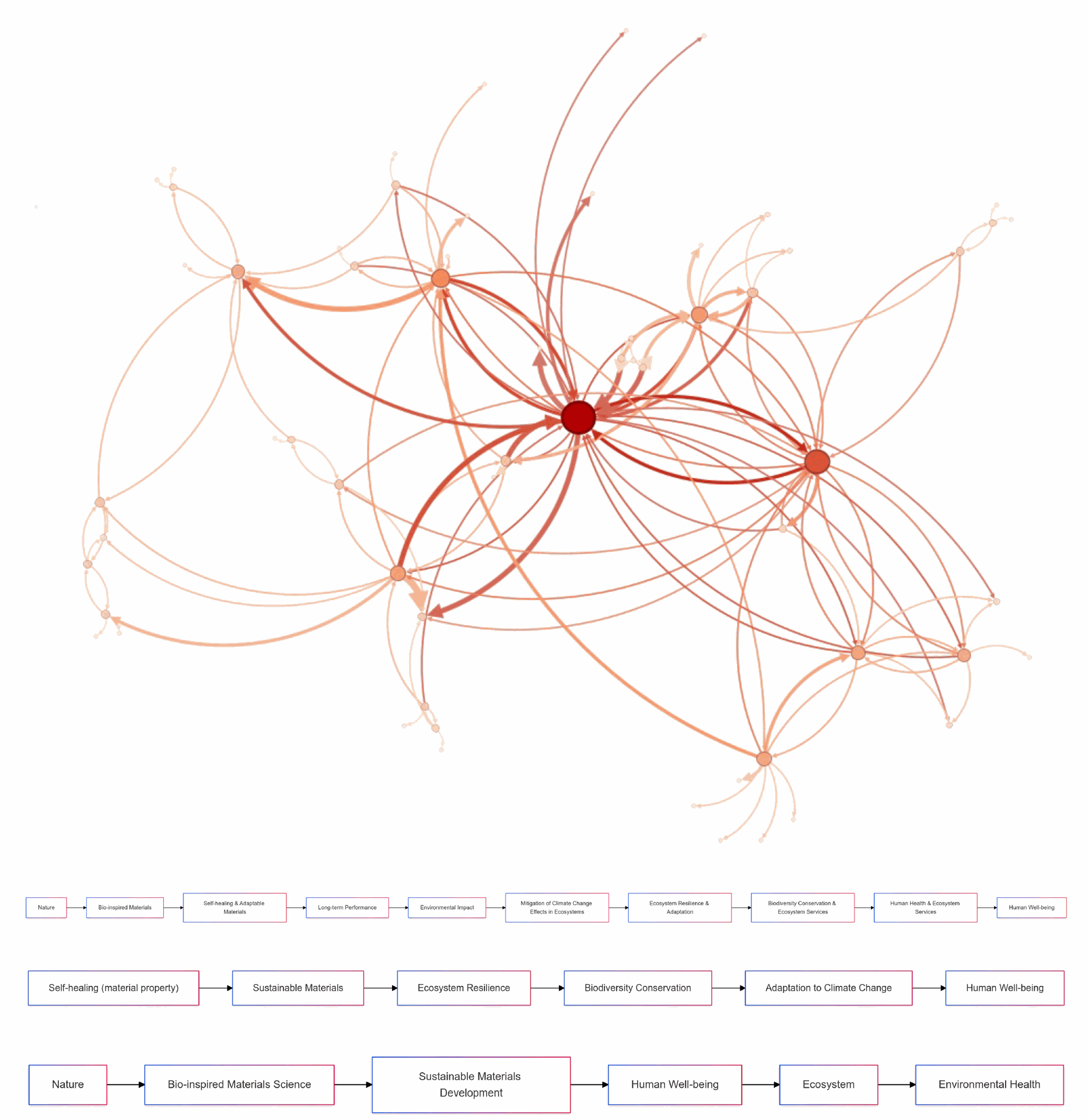

The result was a large autonomously grown knowledge graph (Figure 13), with "Sustainable Materials," "Self-healing and Adaptable Materials," and "Ecosystems" identified as nodes with the highest degrees.

Figure 13: An autonomously grown knowledge graph. Depicting the autonomously grown knowledge graph in the upper panel and selected interesting paths in the lower panel, highlighting nodes with highest degrees.

The largest connected component is modular, with densely connected subregions linked by intermediary nodes. Clustering coefficient distribution (a measure of how interconnected a node's neighbors are) and betweenness centrality distribution (a measure of how often a node lies on the shortest path between other nodes) further illustrate the graph's structural dependencies, highlighting critical connector nodes.

The shortest path length distribution indicates small-world characteristics (where most nodes are separated by only a few connections), enabling rapid information transfer. The community size distribution reflects modular organization, with central nodes acting as local hubs.

Conclusion

The introduction of Graph-PRefLexOR signifies a pivotal advancement in artificial intelligence, seamlessly integrating in situ graph reasoning, symbolic abstraction, and recursive reflection into the generative modeling paradigm.

By embedding explicit graph-based intermediate representations within large language models (LLMs), Graph-PRefLexOR transcends the limitations of purely linguistic systems, enabling it to tackle complex tasks that demand relational reasoning, multistep deduction, and adaptive knowledge synthesis which incorporates domain specific training data.

The experiments vividly demonstrated the model's exceptional capability to generalize, even when tasks extended beyond its science-focused training data into domains like design, music, and philosophy.

The framework consistently adhered to its learned structured reasoning process, constructing highly complex graphs that interface a myriad of disciplines, as evidenced by Figure 9 (showing interconnected subgraphs) and Figure 16 (integrating concepts like "thin places" into protein design).

A particularly compelling innovation is the "knowledge garden" concept, which leverages Graph-PRefLexOR's ability to dynamically and iteratively grow knowledge graphs by adding new relational insights and abstractions.

This capability led to experiments where simple initial tasks blossomed into complex graph structures, themselves serving as foundations for further research and inquiry. The success of autonomously grown graphs further underscores the model's capacity to explore and expand topics, connecting complex ideas without human intervention in prompt generation.

Looking ahead, Graph-PRefLexOR holds immense potential for real-world applications where interpretable, symbolic reasoning is critical. This includes sustainable materials design, bioinspired robotics, targeted drug development, climate-resilient infrastructure, and synthetic biology. These areas benefit from the framework's core strengths: symbolic abstraction, relational generalization, and recursive refinement.

Future work will focus on scaling the framework, integrating it with state-of-the-art architectures like multimodal transformers and graph neural networks, and developing more sophisticated strategies for autonomous knowledge expansion, including incorporating external assessments of feasibility.

Ultimately, Graph-PRefLexOR represents a significant advancement in reasoning frameworks, bridging symbolic and connectionist paradigms. By enabling structured and symbolically focused reasoning within the flexible framework of LLMs, it sets a new benchmark for scientific discovery, unlocking opportunities for transformative research across disciplines and positioning AI not merely as a tool, but as a collaborative partner in human creative and scientific pursuits.

Graph-PRefLexOR and the Dawn of Interpretable AI Reasoning

In-situ Graph Reasoning and Knowledge Expansion Using Graph-PReFLexOR