The increasing reliance on globalized semiconductor supply chains has introduced serious security concerns, particularly the risk of Hardware Trojans (HTs) embedded in integrated circuits (ICs). Machine learning (ML) has emerged as a promising solution for HT detection, but this paper from the University of Florida reveals a critical vulnerability: ML models themselves can be compromised via AI Trojans, enabling malicious actors to bypass even state-of-the-art detection methods.

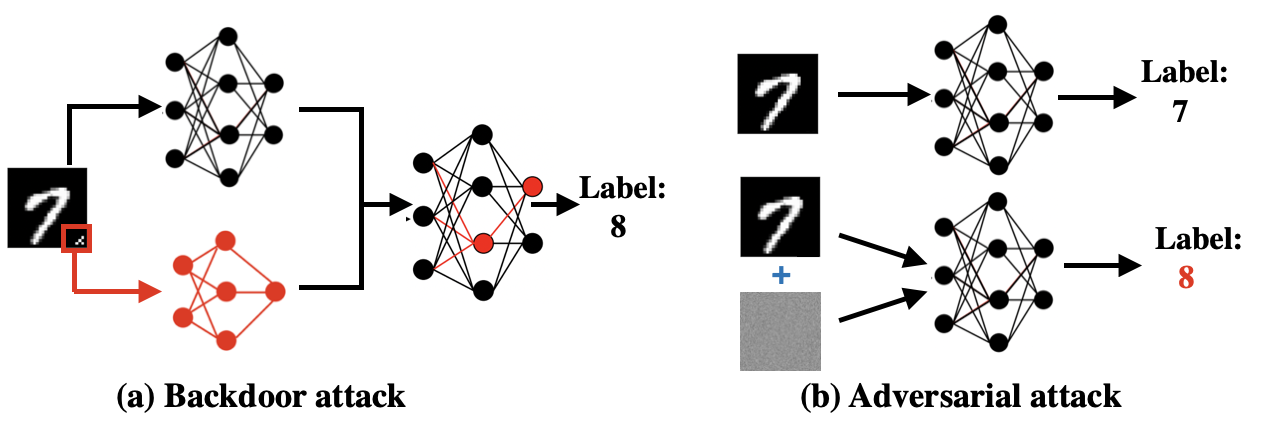

Fig. 2. Comparison between various attacks on ML models. (a) AI Trojan attack in computer vision [10]. (b) Adversarial attacks on the same model.

Key Takeaways

- The research introduces an AI Trojan attack that implants backdoors into ML-based hardware Trojan detection systems. The proposed framework is the first to deploy backdoor attacks on ML-based HT detection.

- The model can be instructed to produce misclassification results when specific triggers are activated.

- The attack is designed to be stealthy and highly effective, achieving a 100% success rate in evading detection.

- The AI Trojan exploits fully outsourced and partially outsourced ML training scenarios.

- The paper systematically evaluates various defense strategies, showing that most state-of-the-art methods fail against this attack.

- The proposed attack outperforms existing adversarial attack techniques, requiring fewer modifications to the ML model while achieving a higher attack success rate.

Overview

Hardware Trojans (HTs) are malicious modifications in integrated circuits (ICs) that can leak sensitive information, degrade performance, or cause denial-of-service. The global semiconductor supply chain's reliance on third-party vendors increases the risk of HT insertion.

Traditional simulation-based validation may not detect these Trojans due to their stealthy nature and the complexity of modern SoCs.

These modifications can be activated under specific conditions to:

- Leak sensitive information.

- Degrade system performance.

- Cause denial-of-service (DoS) attacks.

Given the complexity and stealthy nature of HTs, traditional simulation-based detection is often ineffective. Consequently, machine learning-based detection methods have been increasingly adopted due to their scalability and high accuracy.

While ML models are effective at identifying hardware Trojans, they themselves can be targeted by adversaries. AI Trojan attacks manipulate the ML model in such a way that:

- The model behaves normally on clean input data.

- The model misclassifies specific attacker-chosen inputs, effectively bypassing detection.

This AI Trojan attack exploits the same fundamental weakness that makes deep learning models vulnerable to adversarial attacks: their reliance on data-driven learning, which can be manipulated during training.

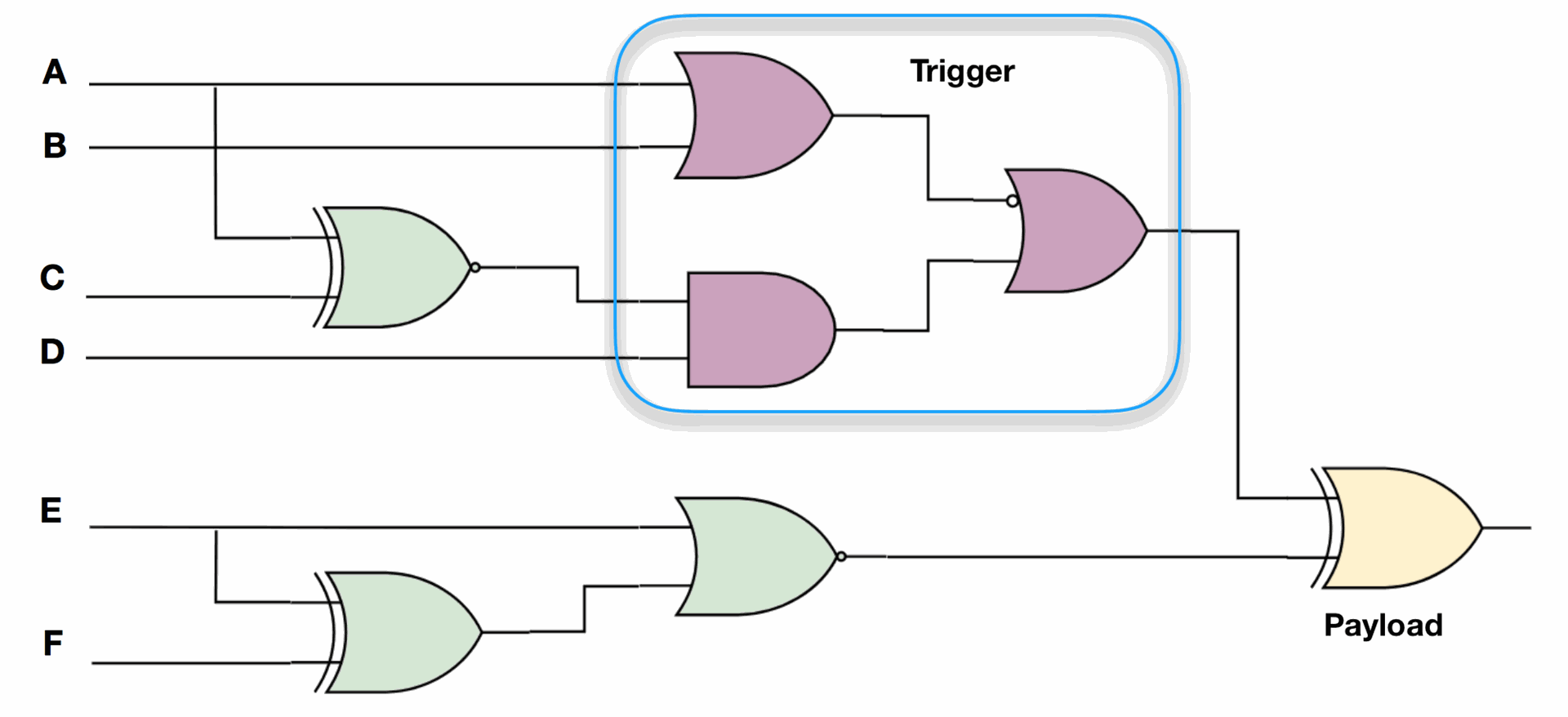

Fig. 1. An example hardware Trojan. Once the trigger condition (purple gates) is satisfied, the payload (yellow XOR) will invert the expected output.

Figure 1 shows an example hardware Trojan. The trigger logic, composed of 3 logic gates, is added to the original circuit. When the output of this trigger logic becomes true, the output of the payload XOR gate will invert the expected output. The trigger is typically created using a combination of rare events (such as rare signals or rare transitions) to stay hidden during normal execution.

Why It’s Important

ML algorithms have emerged as a powerful tool for HT detection due to their ability to learn from data and make accurate predictions. However, these models are computationally expensive and often outsourced to cloud services, known as Machine Learning as a Service (MLaaS).

This outsourcing introduces vulnerabilities, as adversaries can exploit the training process to embed AI Trojans (backdoor attacks) within the ML models.

The attack described in this paper is not limited to hardware security. It raises concerns across various domains where ML-based security mechanisms are used, such as:

- Cybersecurity: AI-powered intrusion detection systems could be tricked into ignoring malware.

- Finance: AI-based fraud detection models could be bypassed.

- Autonomous Systems: AI Trojans could be used to disable safety mechanisms in self-driving cars.

Many industries rely on Machine Learning as a Service (MLaaS), where training is outsourced to third-party vendors. The attack described in the paper exploits two key MLaaS scenarios:

-

Fully-outsourced training – The entire training process is handled by an external provider, giving them full control over the final model. The adversary can craft a malicious model that performs well on the user's dataset but acts maliciously on attacker-chosen inputs.

- Partially-outsourced training – The user fine-tunes a pre-trained model, but key components remain under the control of the third party. The adversary's abilities are limited, but they can still embed AI Trojans that are harder to detect.

This highlights a fundamental security risk that even trusted ML models may have hidden backdoors.

Summary of Results

The experiments were conducted on a host machine with Intel i7 3.70GHz CPU, 32 GB RAM, and RTX 2080 256-bit GPU. The code was developed using Python and PyTorch, and the benchmarks were obtained from Trust-Hub. The normal model structure was based on Lenet-5, and three different payload models were used: MLP, Lenet, and GoogleNet.

The paper addresses several technical challenges, including the design of AI Trojans that can evade detection, the embedding of triggers within circuits, and the development of defense mechanisms that can mitigate these attacks. The proposed framework provides a comprehensive solution to these challenges, demonstrating the feasibility of AI Trojan attacks on ML-based HT detection.

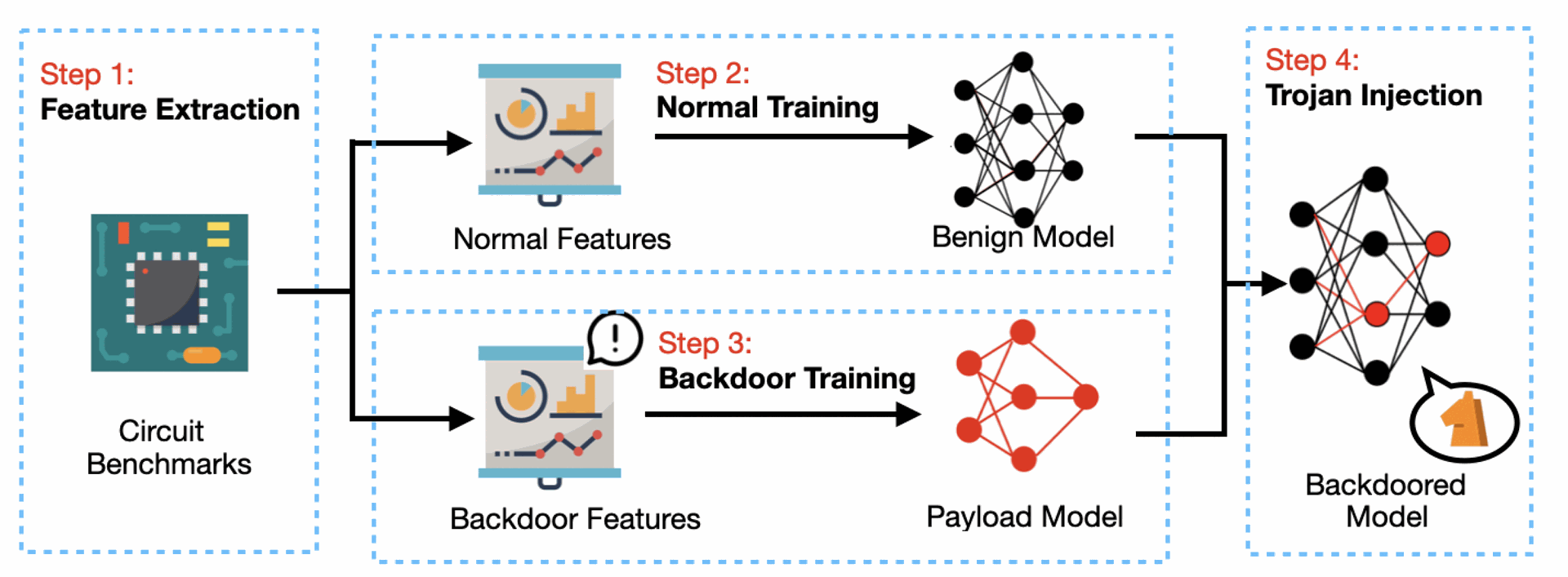

Fig. 4. Overview of our proposed framework that consists of four activities: feature extraction, normal training, backdoor training and Trojan injection.

Fig. 4. Overview of our proposed framework that consists of four activities: feature extraction, normal training, backdoor training and Trojan injection.

The attack follows a four-step approach:

- Feature Extraction: Identify normal features used for hardware Trojan detection and backdoor features used to trigger the AI Trojan.

- Normal Training: Train a conventional ML model for detecting hardware Trojans.

- Backdoor Training: Introduce a payload model that learns to recognize specific backdoor conditions.

- Trojan Injection: Merge the payload model with the normal model, ensuring that misclassification only occurs under attacker-specified conditions.

This method ensures that the ML model appears legitimate, but remains compromised.

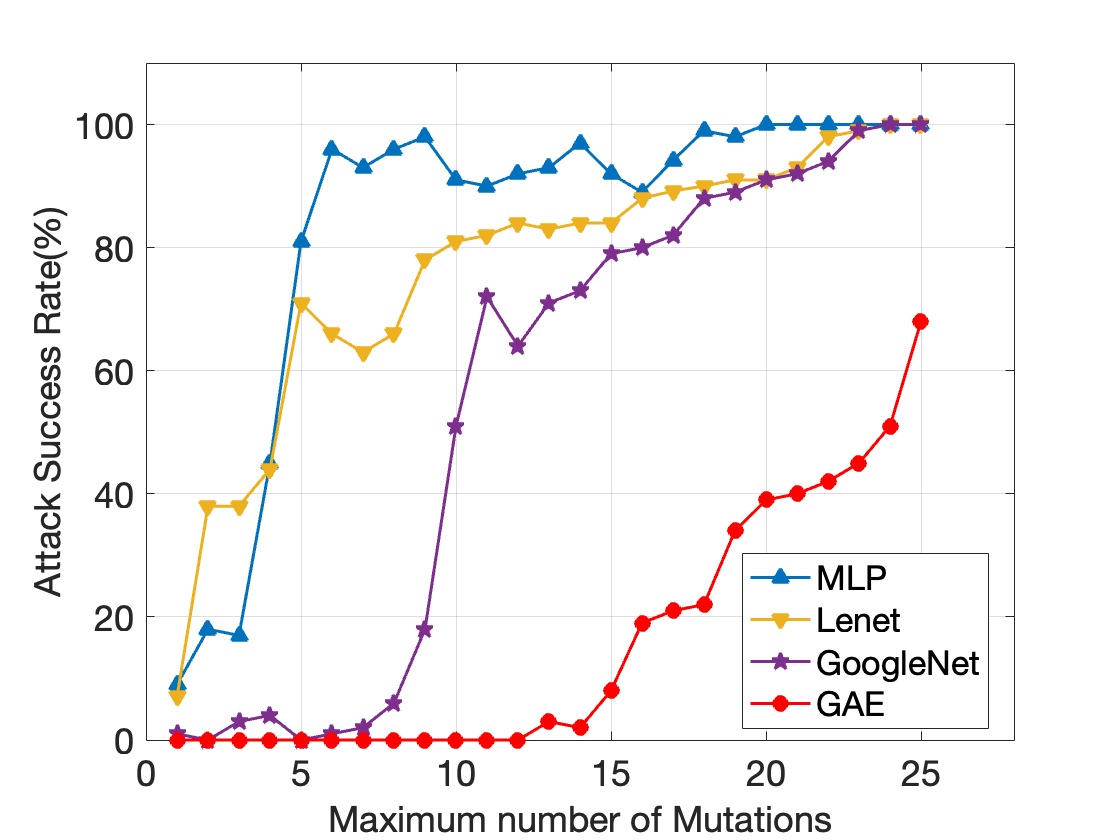

Fig. 12. The attack success rate comparison between proposed algorithm and the state-of-the-art adversarial attack with < 25 mutations.

Attack Performance

The proposed AI Trojan attack was compared against state-of-the-art adversarial attacks.

Key findings:

- 100% attack success rate with fewer modifications than adversarial attacks.

- Highly stealthy – The AI Trojan remains hidden under normal conditions.

- Works in both fully and partially outsourced ML training scenarios.

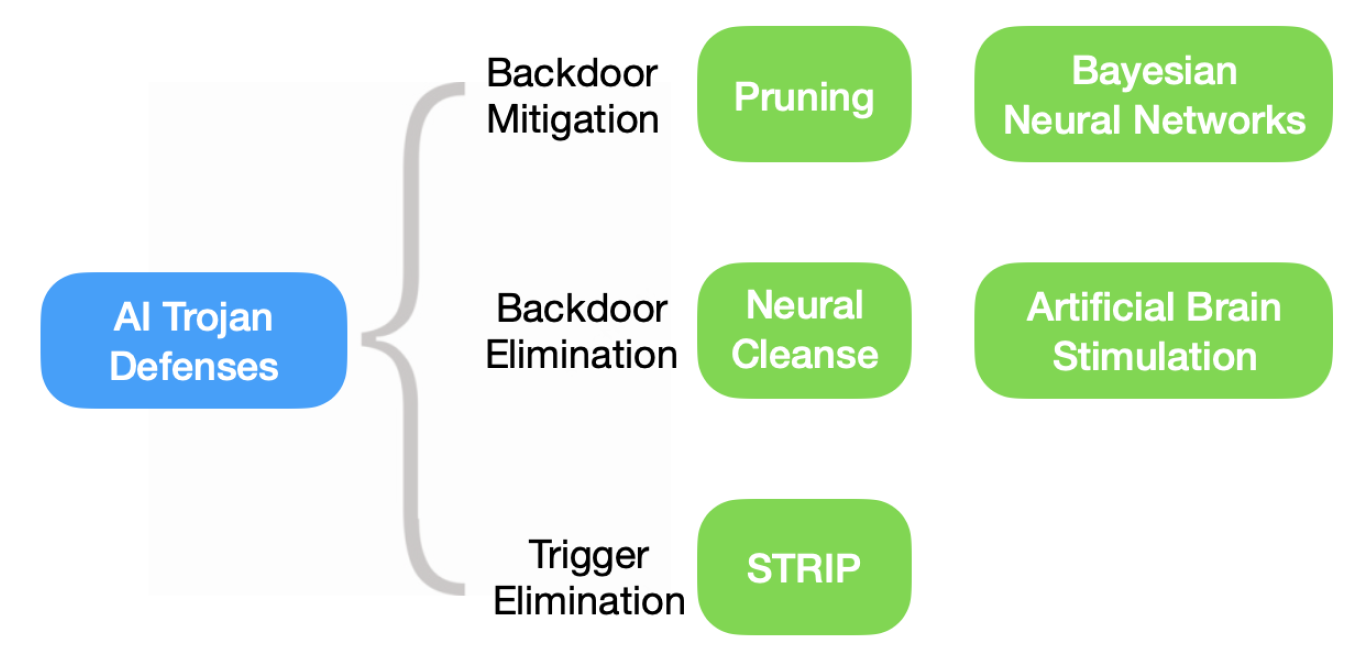

Fig. 7. Various defense strategies against AI Trojans evaluated in this paper.

Defense Mechanisms Evaluated

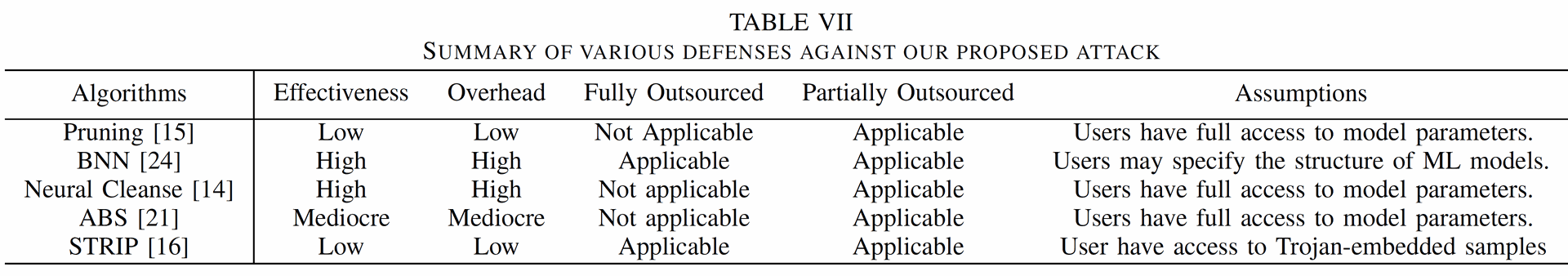

The paper evaluates five state-of-the-art defense techniques and their effectiveness against the proposed attack:

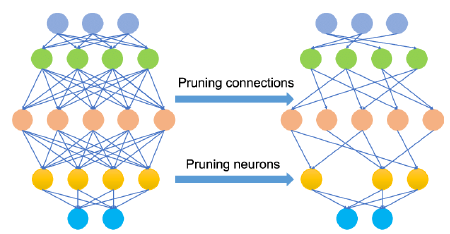

1. Pruning – Removes redundant neural network components.

🡪 Fails when the AI Trojan merges trigger nodes with normal neurons.

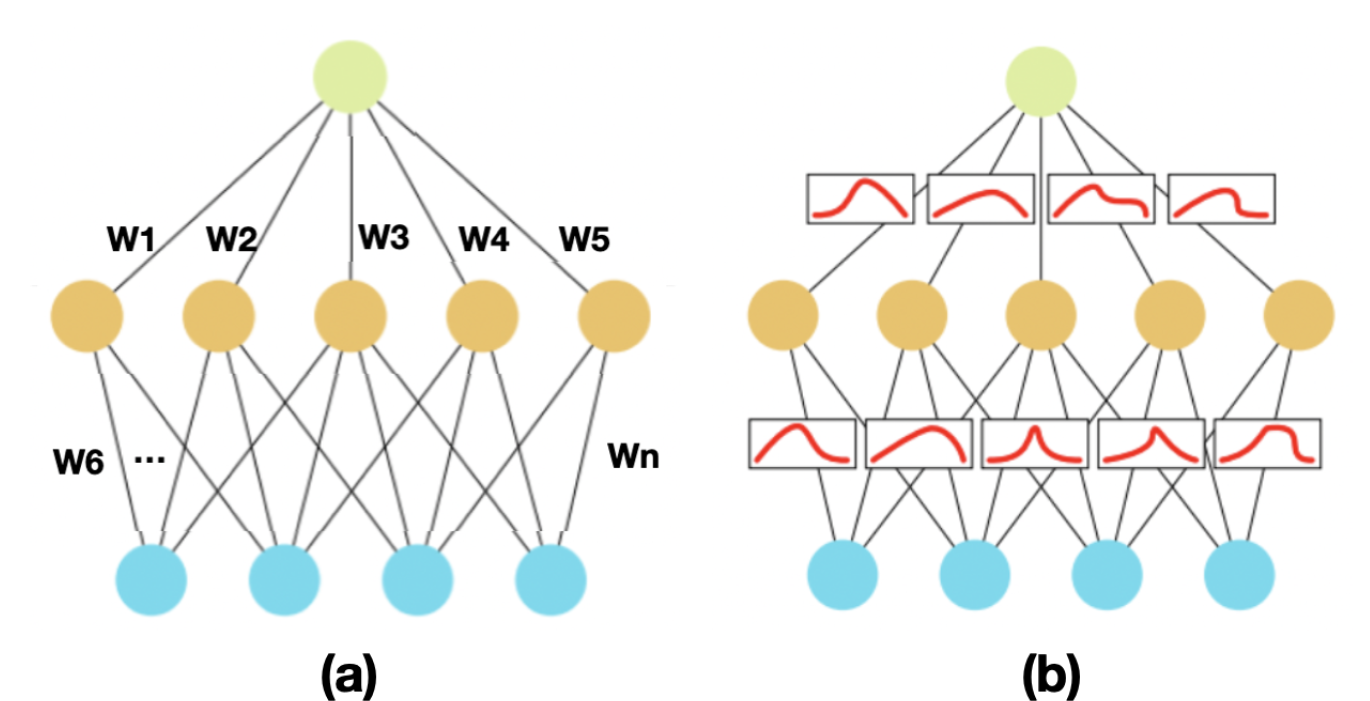

2. Bayesian Neural Networks (BNNs) – Introduces randomness into ML models.

🡪 Partially effective, but expensive and impractical for large-scale systems.

3. Neural Cleanse – Detects backdoors by analyzing input perturbations.

🡪 Effective, but requires significant computational resources.

4. Artificial Brain Stimulation (ABS) – Scans networks for suspicious neurons.

🡪 Partially effective, but suffers from high false positives.

5. STRIP (Strong Entropy-Based Detection) – Identifies triggers based on entropy analysis.

🡪 Fails because the AI Trojan maintains high entropy distributions, avoiding detection.

Key Observations

- No single defense method is fully effective. The AI Trojan can evade most detection strategies.

- Hybrid defenses are needed, combining Bayesian Neural Networks with adversarial training is effective but expensive.

- Security-conscious ML training protocols are required, especially in outsourced ML settings.

Conclusion

This paper demonstrates that ML-based hardware Trojan detection is highly vulnerable to AI Trojan attacks. The proposed attack method achieves 100% evasion rates, outperforming traditional adversarial attacks. Existing defenses, including pruning, Bayesian networks, and neural cleanse, provide partial protection but are not foolproof, underscoring the importance of developing advanced defense mechanisms against these attacks.

Future Directions

- Improving defenses: Developing hybrid techniques that integrate BNNs, adversarial training, and neural cleanse.

- AI security audits: Establishing standards for securing ML models against hidden backdoors.

- Detection robustness: Investigating AI forensic techniques for uncovering malicious model behavior.

This research highlights a critical security challenge in AI-powered cybersecurity solutions and underscores the need for trustworthy AI development.

AI Trojan Attacks: Exploiting ML Vulnerabilities in Hardware Trojan Detection