Artificial intelligence is setting a new standard in medical diagnostics, now outperforming human physicians in both accuracy and cost-effectiveness for complex cases. This isn't a future projection; a recently developed AI system has made it a current reality. The potential to redefine healthcare is immense, offering a path to better medical outcomes in resource-limited settings.

Key Takeaways:

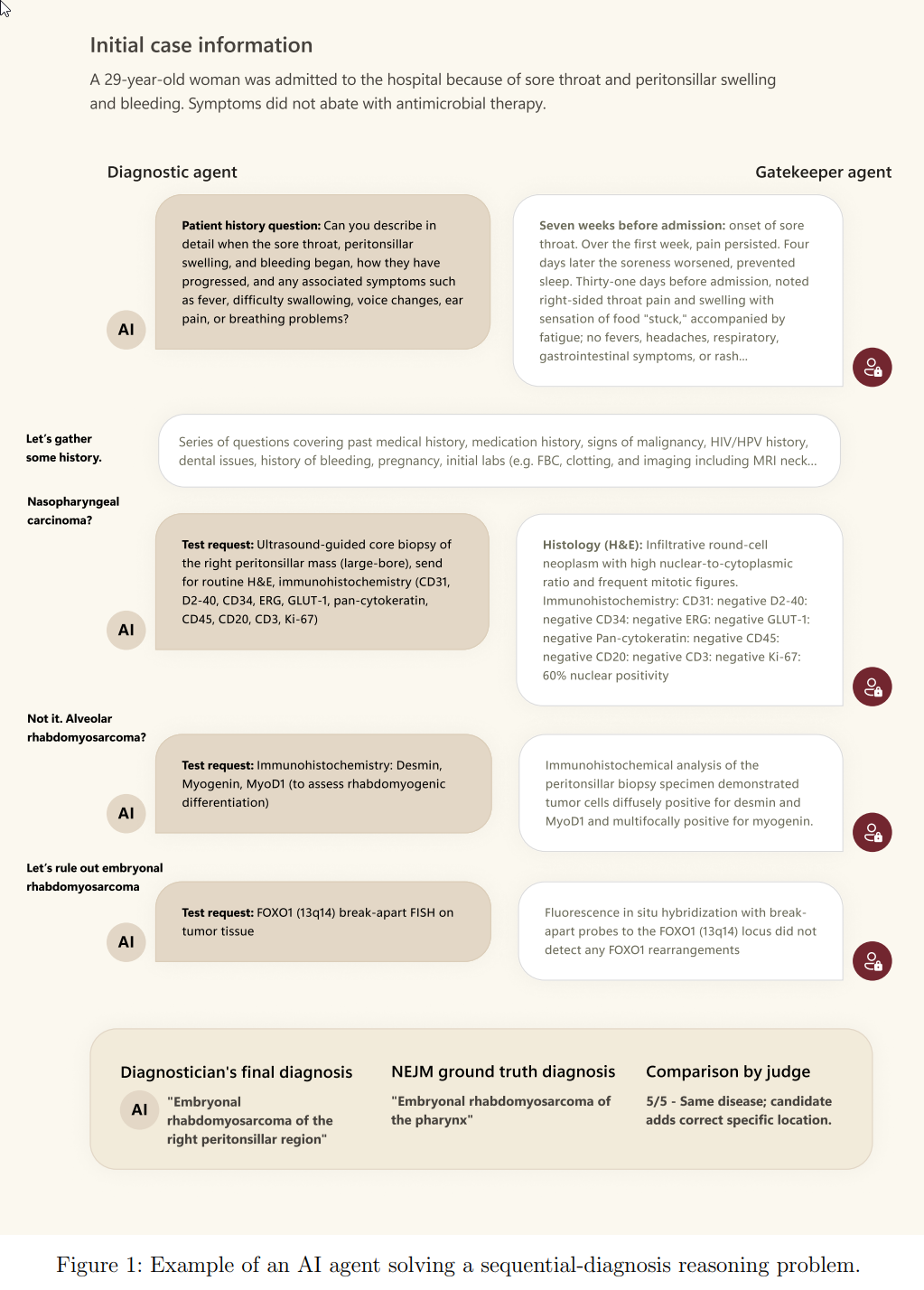

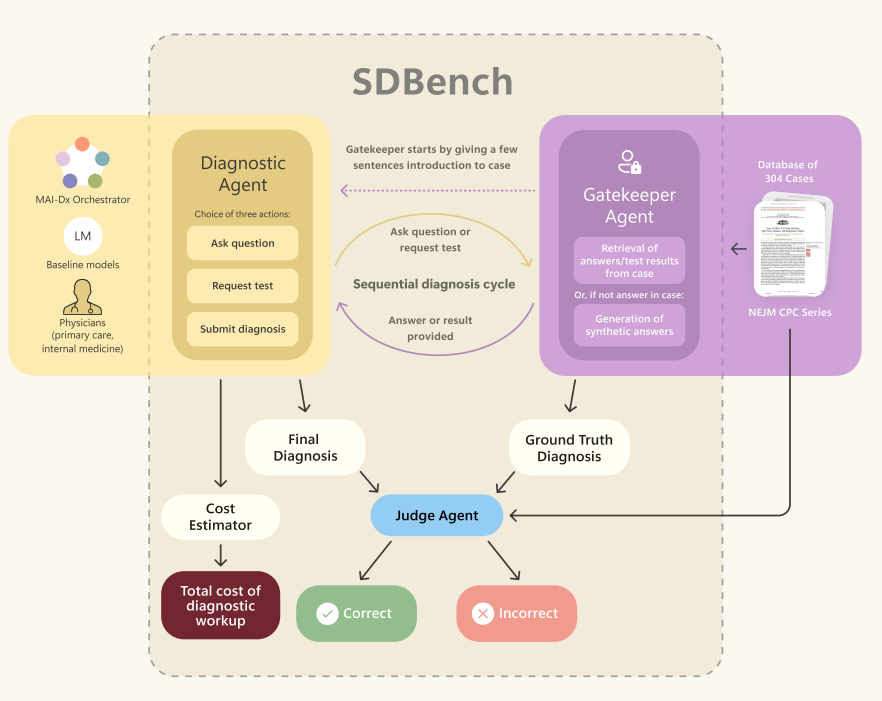

- Sequential Diagnosis Benchmark (SDBench): A new interactive framework that transforms 304 challenging New England Journal of Medicine clinicopathological conference (NEJM-CPC) cases into stepwise diagnostic encounters, allowing evaluation of AI agents and humans on iterative information gathering, cost-conscious decision-making, and diagnostic accuracy.

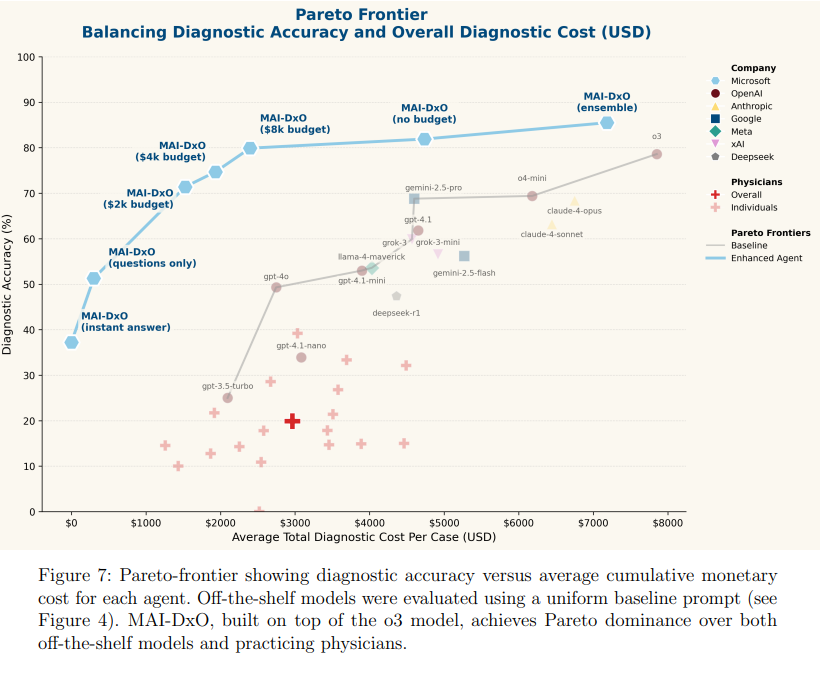

- MAI Diagnostic Orchestrator (MAI-DxO) Performance: When paired with OpenAI’s o3 model, MAI-DxO achieved 80% diagnostic accuracy, which is four times higher than the 20% average accuracy of generalist physicians on the same benchmark.

- Cost Reduction: MAI-DxO reduced diagnostic costs by 20% compared to physicians and a substantial 70% compared to off-the-shelf o3. For instance, a configuration of MAI-DxO achieved 79.9% accuracy at a cost of $2,397, significantly lower than off-the-shelf o3’s 78.6% accuracy at $7,850.

- Model Agnostic Gains: The performance gains from MAI-DxO generalized across various language models, including those from OpenAI, Gemini, Claude, Grok, DeepSeek, and Llama families, demonstrating an average improvement of 11 percentage points in accuracy.

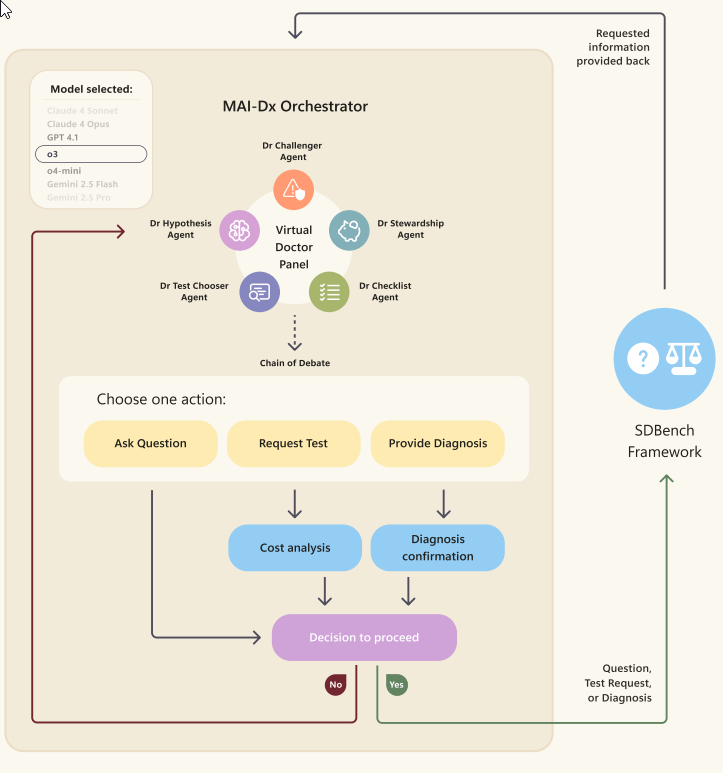

- Simulation of Physician Panel: MAI-DxO's effectiveness stems from simulating a virtual panel of physicians with distinct roles, such as Dr. Hypothesis (for differential diagnosis), Dr. Test-Chooser, Dr. Challenger (for bias detection), Dr. Stewardship (for cost-consciousness), and Dr. Checklist (for quality control).

Real-world Implications: The research highlights AI's potential to expand access to expert medical knowledge, improve diagnostic precision and cost-effectiveness in clinical care, and potentially mitigate clinical workforce shortages, especially in resource-limited settings. The system's robustness on unseen cases suggests its ability to generalize beyond training data.

Innovative Approaches: SDBench and the MAI Diagnostic Orchestrator

At the heart of this breakthrough is the Sequential Diagnosis Benchmark (SDBench), a new framework that closely simulates real clinical reasoning. Unlike traditional tests, SDBench turns more than 300 challenging cases from the New England Journal of Medicine into interactive scenarios. Both human and AI agents must iteratively gather data, order tests, and refine diagnoses, mirroring how real doctors work through uncertainty.

Figure 2: Multiagent orchestration in the SDBench benchmark. A corpus of NEJM CPC cases is transformed into sequential diagnosis challenges through coordination among three agents: the Gatekeeper, Diagnostic and Judge agents. At run-time, the Gatekeeper mediates requests for information from the Diagnostic agent, deciding if and how to respond to the Diagnostic agent’s questions about patient history, examination findings, and test results. The Judge evaluates whether the Diagnostic Agent’s final diagnosis matches the ground truth reported in the original CPC article. Credit: Microsoft

The MAI Diagnostic Orchestrator (MAI-DxO) is the standout innovation, acting as a virtual medical panel. By adopting roles such as Dr. Hypothesis, Dr. Test-Chooser, Dr. Challenger, Dr. Stewardship, and Dr. Checklist, MAI-DxO brings a collaborative, multi-perspective approach to diagnosis. This orchestration enables the AI to tackle bias, reduce costs, and consistently maintain high diagnostic standards.

Figure 5: Overview of the MAI-Dx Orchestrator Credit: microsoft

- Dr. Hypothesis: Maintains a probability-ranked differential diagnosis (a list of possible conditions) and updates probabilities in a Bayesian manner (adjusting beliefs based on new evidence).

- Dr. Test-Chooser: Selects diagnostic tests that maximally discriminate between leading hypotheses.

- Dr. Challenger: Acts as a devil's advocate to identify anchoring bias and contradictory evidence.

- Dr. Stewardship: Enforces cost-conscious care by advocating for cheaper alternatives and vetoing low-yield expensive tests.

Dr. Checklist: Performs quality control to ensure valid test names and internal consistency. This ensembling (combining outputs from multiple models or runs) and orchestrated approach aims to replicate team-based clinical reasoning, mitigate individual cognitive biases, and minimize cost.

Performance: Surpassing Physicians and Traditional AI

The results are remarkable. When paired with OpenAI’s o3 language model, MAI-DxO reached 80% diagnostic accuracy, a fourfold improvement over generalist physicians, who averaged just 20% on the same cases. The system also delivered significant savings, cutting diagnostic costs by 20% compared to doctors and up to 70% versus standard AI models. For example, MAI-DxO achieved 79.9% accuracy for $2,397 per case, compared to $7,850 for other AI solutions.

What sets MAI-DxO apart is its model-agnostic design. It consistently improved accuracy, by an average of 11 percentage points, across diverse AI language models, making it both adaptable and future-ready as language technology evolves.

Implications: A Shift in Healthcare Paradigms

This research questions the traditional division of labor between generalists and specialists in medicine. The orchestrator’s polymathic reasoning shows it can span specialties with both depth and breadth, challenging whether AI should be measured against individual doctors or entire expert teams. This has profound implications for the future roles of clinicians in diagnostics.

MAI-DxO’s cost-aware, strategic approach aligns with the healthcare “Triple Aim”: better care, improved health, and reduced costs. By prioritizing affordable, high-value actions, it narrows diagnostic uncertainty more efficiently than both humans and less advanced AI systems.

Its strong performance on previously unseen cases highlights its potential for scalable adoption. By decoupling from any single language model, it offers resilience against rapid tech changes, a critical feature for resource-limited environments where access to specialist care is scarce. Here, AI-driven diagnosis could democratize expert-level care and improve equity.

Expanding Applications: Education and Consumer Tools

The interactive, realistic nature of SDBench and MAI-DxO holds promise beyond clinical settings. Medical students and practitioners could use these tools for immersive, AI-guided training. Looking to the future, such systems might power direct-to-consumer health tools, like triage apps, provided that safety and privacy issues are addressed.

Study Highlights: Rigorous Testing and Realistic Evaluation

The research evaluated MAI-DxO and other agents using 304 real-world cases, reserving the latest for hidden testing. Experienced human physicians achieved just 19.9% accuracy at an average cost of $2,963 per case. In contrast, MAI-DxO consistently delivered over 80% accuracy with lower costs even on completely new cases.

Alternative orchestrator designs, including budget-focused and ensemble panels, offered further improvements. Notably, ensemble panels pushed accuracy to 85.5% while still reducing costs compared to standard AI models.

The Future of AI-Driven Diagnosis

This study signals a new era for medical AI. By orchestrating an ensemble of virtual physician roles, the MAI Diagnostic Orchestrator delivers “superhuman” diagnostic performance at lower costs, setting a new benchmark for AI-assisted diagnosis. As healthcare systems move forward, innovations like these promise to augment clinical expertise, address critical workforce shortages, and expand access to high-quality care globally.

AI Sets New Standards in Medical Diagnosis: Outperforming Human Physicians in Accuracy and Cost

Sequential Diagnosis with Language Models