Harness the full potential of AI agents, autonomously acting and leveraging real-world tools, directly on your PC, with Model Context Protocol (MCP) agents running on AMD Ryzen AI™ PCs powered by the lightweight, open-source Lemonade Server.

This integration brings cutting-edge local large language models (LLMs) and tool-calling capabilities to your desktop, opening doors for private, efficient, and versatile AI solutions.

What Makes Lemonade Server Special?

Lemonade Server acts as a local docking station for LLMs, allowing you to run powerful AI models natively, no external servers or cloud APIs required. It’s designed for seamless integration with modern AI applications, including those built on the OpenAI standard, and connects effortlessly with open-source frontends like Open WebUI. With its latest update, Lemonade now supports MCP, unlocking dynamic tool-use features for LLMs on AMD hardware.

The Power of Tiny Agents and MCP

Tiny Agents, a project from Hugging Face, leverages MCP to let LLMs autonomously interact with external tools such as web search, memory storage, and filesystem access. By connecting these tools via MCP, Tiny Agents enable the LLM to loop between conversation and tool usage until a task is complete, all on your local machine. This process not only enhances autonomy but also makes applications vastly more context-aware and interactive.

- Autonomous Task Completion: LLMs can reason, fetch information, and act using local resources.

- Minimal Setup: Lemonade and Tiny Agents streamline the integration of advanced tool-calling, lowering barriers for developers and tinkerers alike.

- Flexible Model Support: Use your preferred models, including those accelerated by Vulkan for rapid, efficient inference on AMD GPUs.

How to Get Started

Launching a local Tiny Agent is straightforward. Here’s the basic workflow:

- Install Lemonade Server 7.0.2 (or via installer) or later, and launch it on your PC.

- Set up Node.js

- Install latest huggingface_hub with MCP support.

> lemonade-server serve > pip install "huggingface_hub[mcp]>=0.32.4"

- Save a sample SQLite database (link) in your current folder and save the agent configuration (agent.json) as provided in the below.

# Agent.json

{ "model": "Qwen3-8B-GGUF", "endpointUrl": "http://localhost:8000/api/", "servers": [ { "type": "stdio", "config": { "command": "C:\\Program Files\\nodejs\\npx.cmd", "args": [ "-y", "mcp-server-sqlite-npx", "test.db" ] } } ] }

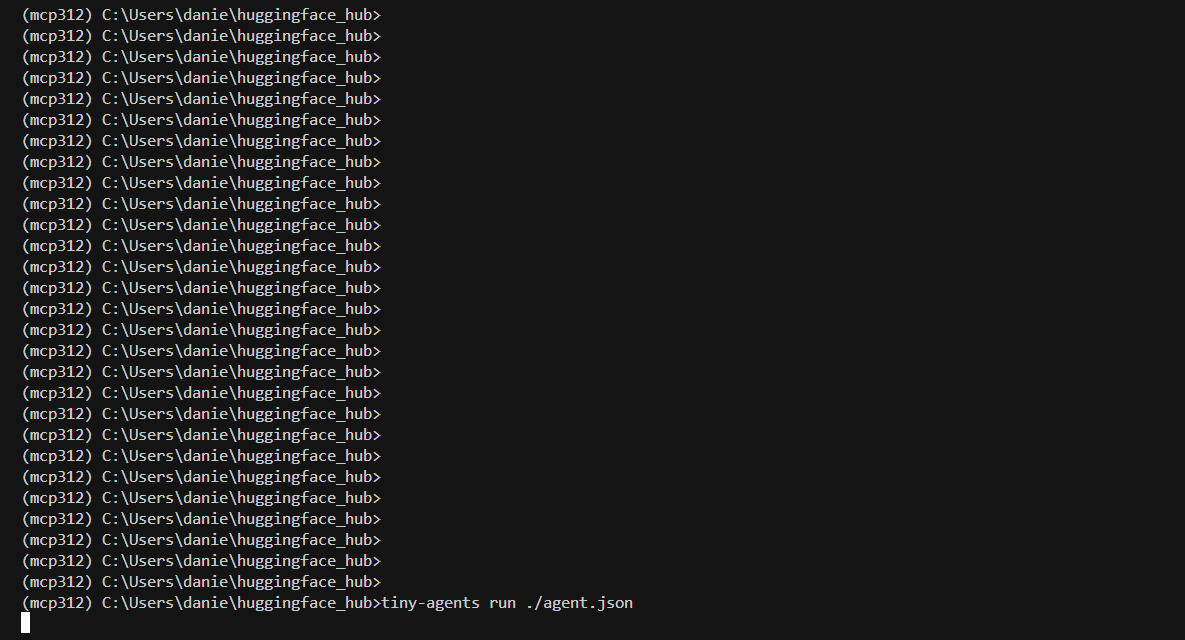

- Run your agent using the Tiny Agents CLI, enabling LLMs to interact with your SQLite database through MCP tool calls.

> tiny-agents run ./agent.json

In this setup, models like Qwen3-8B-GGUF, accelerated with Vulkan, demonstrate how LLMs can deliver fast, responsive inference while autonomously handling data queries and edits - all locally, without sacrificing privacy or incurring cloud costs.

Scaling Up: Advanced MCP Integrations

The possibilities extend far beyond simple database queries. The MCP ecosystem boasts hundreds of available servers, from calculators to image editing frameworks. Resources like awesome-mcp-servers and mcpservers.org make it easy to discover or build custom MCP setups tailored to your workflow.

For developers seeking maximum performance, Lemonade supports hybrid models that leverage both NPU and iGPU acceleration, ideal for the latest AMD Ryzen AI 300 series. These hybrid models, such as Llama-xLAM-2-8b-fc-r-Hybrid, are fine-tuned for tool-calling and deliver exceptionally snappy response times.

Why Local MCP Agents Matter

Running Tiny Agents and MCP-powered tools locally on Lemonade Server redefines what’s possible with AI on personal devices:

- Privacy: All data and processing remain on your device: a crucial advantage for sensitive information.

- No Ongoing Costs: Local execution eliminates API usage fees, making it sustainable for experimentation and production workloads.

- Extensibility: The vast MCP ecosystem lets you augment your agents with a wide array of tools and services as your needs evolve.

This approach empowers developers and researchers to build truly autonomous, practical LLM applications that act, not just respond, ushering in a new era of on-device AI intelligence.

Ready to Build?

Setting up your first local agent is just the beginning. The Lemonade community encourages exploration, feedback, and contributions. Dive into the documentation, experiment with different models and tools, and share your results or ideas via GitHub or by emailing the Lemonade team. With every new agent, you’re bringing the future of private, practical AI closer to reality.

Unlocking Local AI Autonomy: MCP Agents on Ryzen AI with Lemonade Server