Artificial intelligence is advancing at a remarkable pace, but its true leap lies in enhancing reasoning skills. Microsoft Research is at the forefront, developing innovative approaches that empower both small and large language models to reason more logically, solve complex math problems, and generalize across different domains. These advances promise to increase AI's reliability and versatility in fields like education, science, and healthcare.

Empowering Small Language Models to Reason

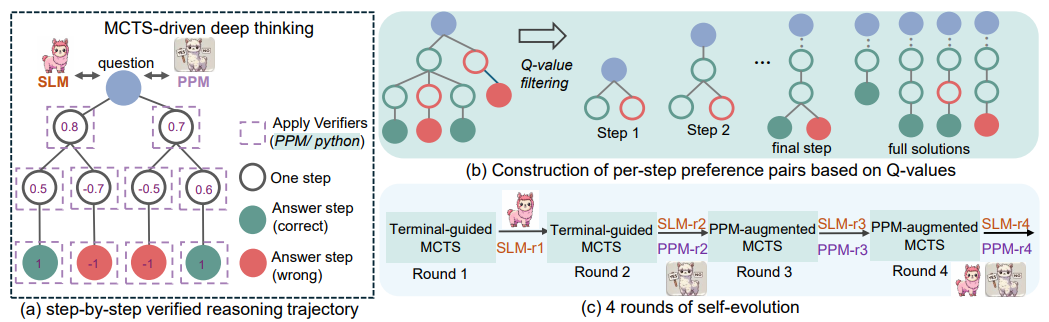

Small language models often fall short in deep reasoning due to their limited capacity and reliance on pattern recognition. To address this, Microsoft introduced rStar-Math, a method leveraging Monte Carlo Tree Search (MCTS) for step-by-step mathematical reasoning.

Figure 1. The rStar-Math framework

rStar-Math stands on three core pillars:

- Problem decomposition: Breaking down challenging problems into manageable steps.

- Process preference modeling (PPM): Training models to assess the quality of each reasoning step based on reward predictions.

- Iterative refinement: Continuously refining both the strategy and supervision models through self-improvement cycles.

This approach has propelled small models (1.5B–7B parameters) to achieve scores in competitive math settings comparable to the top 20% of U.S. high school students.

The Logic-RL framework builds on this by using reinforcement learning with carefully structured prompts and reward systems. Models earn rewards only for correct, logically sound solutions, leading to a disciplined reasoning process and accuracy improvements up to 125% over previous benchmarks.

Achieving Mathematical Rigor with Formal Methods

For AI to excel in mathematics, it must marry pattern recognition with formal precision. The LIPS system (LLM-based Inequality Prover with Symbolic Reasoning) does just that, blending large language models with symbolic solvers.

LIPS intelligently determines when to apply symbolic computation versus natural language reasoning, achieving state-of-the-art results on Olympiad-level problems, all without extra training data.

Figure 2. An overview of LIPS

Translating real-world math problems into formal, machine-readable language is challenging. Microsoft’s new framework evaluates these translations using both symbolic equivalence (logical identity) and semantic consistency (embedding similarity). This method boosts formalization accuracy by up to 1.35 times across diverse datasets.

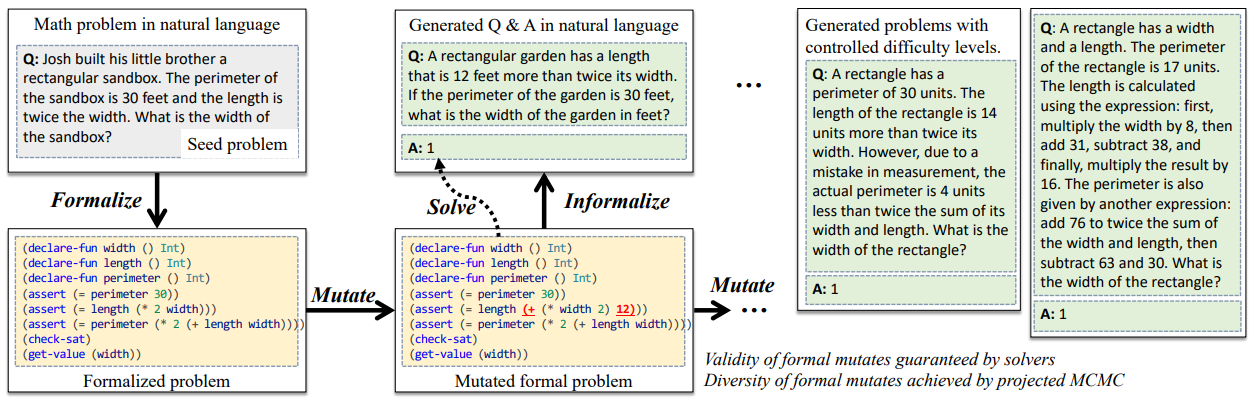

Figure 3. An overview of the neuro-symbolic data generation framework

To combat data scarcity, Microsoft’s neuro-symbolic framework automatically generates high-quality math problems. Symbolic solvers create structured questions, while language models transform them into natural language, enriching training and evaluation resources.

Generalizing Reasoning Skills Across Fields

One of AI’s greatest challenges is generalizing skills across different domains. Microsoft discovered that training models on mathematical problems also elevates their coding and science capabilities.

Their Chain-of-Reasoning (CoR) approach unifies reasoning across language, code, and symbolic forms, dynamically adapting to various problem types and delivering robust results on major math benchmarks.

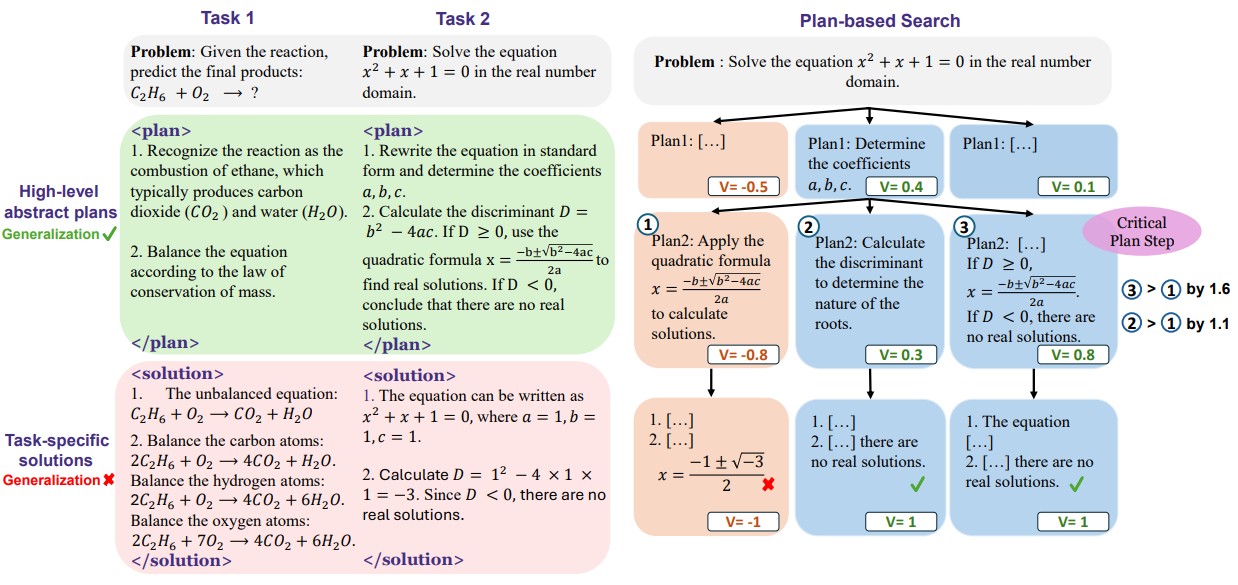

Traditional models often get stuck in domain-specific thinking. The Critical Plan Step Learning (CPL) method counters this by teaching models to approach problems as experts do, breaking down tasks, identifying key knowledge, and making strategic decisions. CPL blends plan-based MCTS with step-level preference learning, enhancing flexibility and depth in AI reasoning.

CPL combines two key components: plan-based MCTS, which searches multi-step solution paths and constructs planning trees, and step-APO, which learns preferences for strong intermediate steps while filtering out weak ones. This combination enhances reasoning and improves generalization across tasks, moving AI systems closer to the flexible thinking that characterizes human intelligence.

Figure 4 Overview of the CPL framework

Building Trustworthy, High-Performing AI

These innovations signal a shift toward more trustworthy and capable AI. By integrating symbolic, formal, and multi-paradigm methods, Microsoft edges language models closer to human-like problem-solving.

The journey isn’t over; challenges like accuracy and preventing hallucinations, especially in high-stakes fields such as healthcare, remain. Ongoing research with tools like AutoVerus (for automated code proof generation) and Alchemy (for neural theorem proving) aims to address these issues.

As these reasoning methods mature, they lay the groundwork for AI systems that can support critical decisions in research, education, and beyond with greater confidence and reliability.

Revolutionizing AI Reasoning: Microsoft Research's Breakthroughs for Language Models