AI integrations are undergoing a significant transformation, with the Model Context Protocol (MCP) at the forefront. As organizations strive for more efficient connections between their tools and models, the challenge of building scalable and high-performing MCP servers has become a central concern for developers.

Vercel And the MCP Ecosystem

Vercel, as a leading cloud platform for frontend development, plays a pivotal role in the evolving MCP ecosystem by offering a streamlined and efficient environment for deploying AI server integrations. Its "Frontend Cloud" provides developers with the tools and infrastructure to build, scale, and secure modern web applications, including those leveraging the Model Context Protocol.

Notably, Vercel's Fluid compute helps manage the overhead associated with persistent connections, while its MCP adapter, specifically designed for Vercel hosting using Vercel Functions, facilitates the adoption of new transport protocols like Streamable HTTP. This enables developers to easily deploy high-performing MCP servers, ultimately contributing to the rapid growth and efficiency of AI model integrations across various tools and clients.

The Importance of MCP and Its Expanding Ecosystem

MCP offers a standardized framework for AI model integrations, simplifying interoperability across tools. Leading companies including Zapier, Composio, Vapi, and Solana have embraced the MCP adapter to deploy their custom servers on Vercel, resulting in rapid ecosystem growth. Additionally, clients such as Cursor, Claude, and Windsurf now support MCP server connections, encouraging broader adoption and compatibility throughout the industry.

Advancing Transport Protocols: Moving Beyond SSE

Initially, the MCP specification provided two options for communication: Standard IO (stdio) for local interactions, and Server-Sent Events (SSE) for remote connections. While SSE enabled remote hosting, it required maintaining persistent connections, even when idle, which led to significant server overhead at scale. Vercel’s Fluid compute helped ease some of this burden, but the inefficiencies of SSE persisted.

To address these challenges, the MCP specification introduced Streamable HTTP in March 2025. This innovative transport protocol eliminates the need for persistent connections by leveraging standard HTTP, making it ideal for scalable, cloud-based MCP servers.

Streamable HTTP accommodates both stateless and stateful architectures, providing greater flexibility for deployments.

Advantages of Streamable HTTP

- Reduces server resource consumption by eliminating idle persistent connections

- Enhances scalability for cloud-hosted MCP servers

- Supports both stateful and stateless models with ease

Maintaining Compatibility: Bridging Old and New

Despite its advantages, Streamable HTTP adoption has been gradual, mainly due to the prevalence of legacy clients that only support stdio and SSE.

To facilitate a smoother transition, the Vercel MCP adapter provides support for both Streamable HTTP and SSE, allowing developers to migrate at their own pace.

The mcp-remote package further bridges this gap by acting as a proxy, enabling stdio clients to communicate with Streamable HTTP servers with minimal changes.

How mcp-remote Supports Migration

- Developers can easily adapt servers to support stdio clients using mcp-remote

- This compatibility layer can be phased out as more clients add support for Streamable HTTP

import { createMcpHandler } from '@vercel/mcp-adapter';

const handler = createMcpHandler(server => {

server.tool(

'roll_dice',

'Rolls an N-sided die',

{ sides: z.number().int().min(2) },

async ({ sides }) => {

const value = 1 + Math.floor(Math.random() * sides);

return { content: [{ type: 'text', text: `🎲 You rolled a ${value}!` }] };

}

);

});

export { handler as GET, handler as POST, handler as DELETE };An example MCP server with a single tool call.

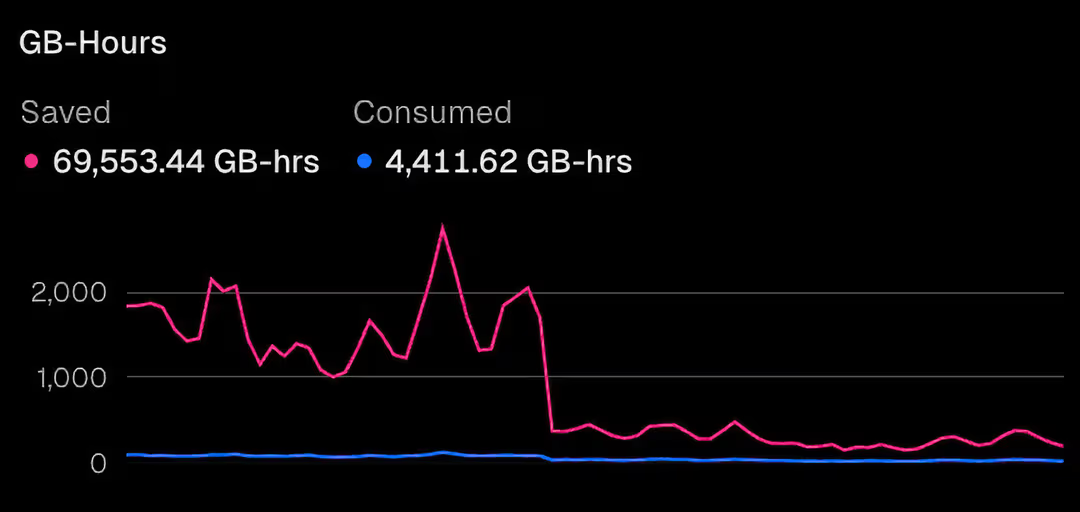

Measurable Gains: Efficiency in Action

The real-world benefits of Streamable HTTP are already evident. One MCP server running on Vercel that fully transitioned to Streamable HTTP recorded a more than 50% reduction in CPU usage, while serving even more users. These results underscore the significant performance and cost advantages of adopting the latest MCP standards.

Migrating to Streamable HTTP reduced CPU consumption by over 50%

Next Steps: Simplifying Modern MCP Server Development

The MCP ecosystem is rapidly advancing. With Vercel’s Fluid compute and the MCP adapter, developers can build servers that are both future-proof and high-performing. The adapter’s multi-transport support and robust templates for frameworks like Next.js streamline the development and deployment process.

- Quickly launch a Next.js-based MCP server using provided templates

- Deploy effortlessly on Vercel’s scalable cloud infrastructure

- Leverage enterprise-grade resources to scale MCP solutions effectively

Takeaway: Embracing the Future of AI Integrations

Developers now have the tools to create efficient, scalable MCP servers more easily than ever. By adopting Streamable HTTP and utilizing solutions like the Vercel MCP adapter, the path to robust, future-ready AI integrations is clear. As the MCP ecosystem grows, these strategies will be key for ensuring high performance and streamlined operations, both now and in the years to come.

New Streamable HTTP Dramatically Improves MCP Server Performance