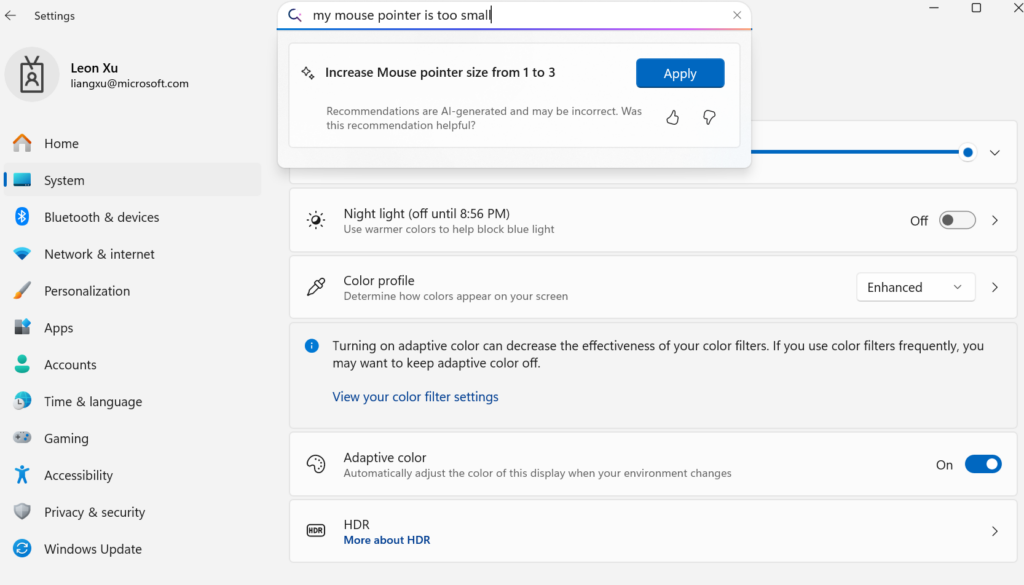

Microsoft’s Mu language model, powering Copilot+ PCs, now allows you to adjust complex Windows settings just by telling your PC what you want. Mu powers the AI agent in Windows Settings, translating natural language commands into direct system actions, all with remarkable speed and efficiency.

Engineered for Fast, Efficient On-Device AI

Mu is no ordinary language model. Purpose-built for Neural Processing Units (NPUs), it uses an encoder–decoder architecture to operate with lower latency and minimal memory consumption. Unlike larger cloud-based models, Mu runs entirely on-device, delivering responses in real time without relying on internet connectivity.

Key Technical Innovations

- Encoder–Decoder Architecture: User input is encoded just once, dramatically reducing computational overhead.

- Hardware Optimization: Every layer and parameter is tailored for NPU acceleration, driving efficient matrix operations.

- Weight Sharing: By tying input and output embeddings, Mu saves memory and ensures consistency across multiple tasks.

- Exclusive Use of NPU-Optimized Operations: All computations are streamlined for maximum speed on supported hardware.

Big Performance in a Tiny Package

Despite a compact 330 million parameter size, Mu leverages state-of-the-art transformer techniques:

- Dual LayerNorm: Delivers stable training with negligible processing cost.

- Rotary Positional Embeddings: Supports better long-context understanding and adaptability.

- Grouped-Query Attention (GQA): Maintains diversity and speed while reducing memory usage.

Mu’s training pipeline incorporated Microsoft’s Phi models, Azure Machine Learning, and extensive fine-tuning with real-world queries. This allowed the model to match or exceed the accuracy of much larger systems, especially in settings and code-related tasks.

Optimized for Devices: Quantization and Hardware Collaboration

To ensure Mu runs efficiently on Copilot+ PCs, Microsoft applied quantization, reducing model weights to 8- and 16-bit integers. This step slashed memory and compute requirements with virtually no loss in accuracy.

Collaboration with major hardware partners like AMD, Intel, and Qualcomm further optimized Mu for their NPUs, resulting in blazing-fast inference speeds (over 200 tokens per second on devices like Surface Laptop 7).

Empowering the Windows Settings Agent

Mu’s real breakthrough is as the intelligence behind the Windows Settings agent. Users can now manage hundreds of system settings using simple, conversational language. Extensive fine-tuning with 3.6 million queries (both real and synthetic) and advanced techniques, like automated labeling and prompt tuning, ensure Mu delivers both speed and pinpoint accuracy.

Enhancing User Experience

- Integrated Search: The AI agent works directly in the Settings search box, distinguishing between direct actions and traditional searches.

- Disambiguation: Advanced training helps the agent handle ambiguous commands, focusing on the most common scenarios for clarity.

- Instant Responses: With response times under 500 ms, users enjoy a smooth, responsive experience.

The Future of On-Device AI with Mu

Microsoft’s Mu model sets a new standard for local AI, combining accuracy, efficiency, and speed on resource-constrained hardware. As feedback from Windows Insiders shapes its evolution, Mu’s integration is poised to expand across more features and devices. This marks a significant step toward more intuitive, AI-driven interactions within the Windows ecosystem.

Microsoft's Mu Language Model Adjusts Windows Settings with On-Device AI