Imagine a world where robots react instantly, adapt to changing tasks, and operate independently of the cloud. Google's DeepMind is turning this vision into reality with Gemini Robotics

On-Device, a breakthrough that embeds advanced, multimodal AI directly within robotic hardware. By leveraging this technology, robots gain dexterity, flexibility, and resilience, even in environments with spotty or no connectivity.

What Sets Gemini Robotics On-Device Apart?

This innovation is built on the Gemini 2.0 VLA (Vision-Language-Action) model, meticulously optimized to run efficiently on physical robots. Unlike traditional AI systems that rely heavily on cloud resources, this model operates entirely on-device, unlocking several crucial advantages:

- Low Latency: Immediate robot responses enhance safety and enable precise control in real time.

- Robustness: Robots function seamlessly regardless of internet connectivity, ideal for unpredictable or remote settings.

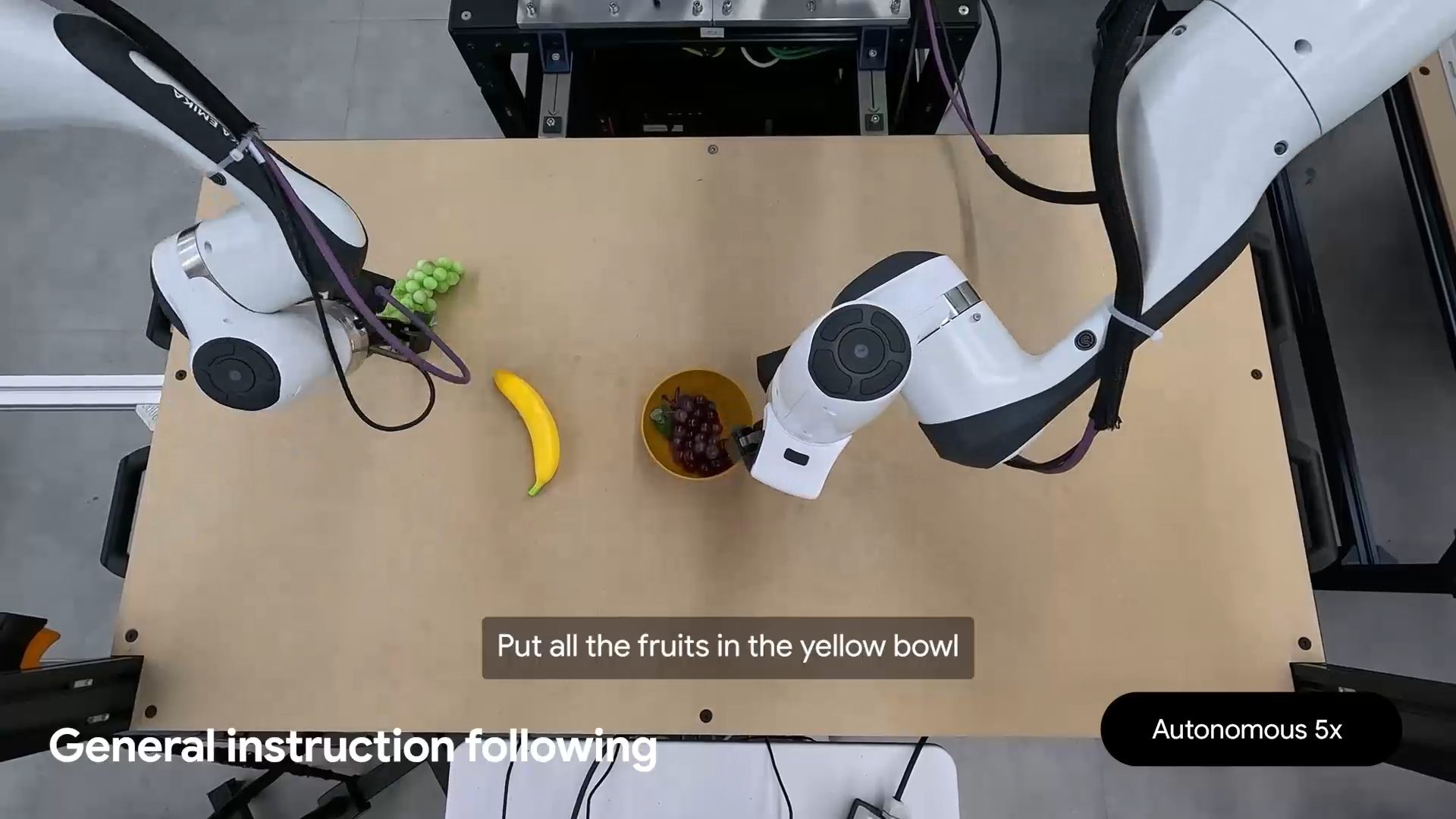

- Generalization: The model excels at adapting to new and varied tasks, such as folding laundry or unzipping bags, without the need for cloud-based computations.

Empowering Developers with the Gemini Robotics SDK

To accelerate progress, DeepMind offers the Gemini Robotics SDK. This comprehensive toolkit enables developers to:

- Evaluate the On-Device model in custom environments and tasks

- Leverage the MuJoCo physics simulator for advanced testing

- Adapt the system to new domains with minimal data, requiring as few as 50-100 demonstrations

Early access through the trusted tester program allows select developers to experiment with both the model and SDK, speeding up innovation and deployment.

Performance, Adaptability, and Natural Language Skills

Gemini Robotics On-Device sets a new benchmark for on-device AI performance by offering:

- Minimal Computational Footprint: Efficiently runs on robotic hardware without reducing capability

- Natural Language Understanding: Follows complex, multi-step instructions communicated in plain language

- Task and Embodiment Adaptation: Adapts to diverse robots, such as ALOHA, Franka FR3, and Apollo humanoids, and quickly learns new skills

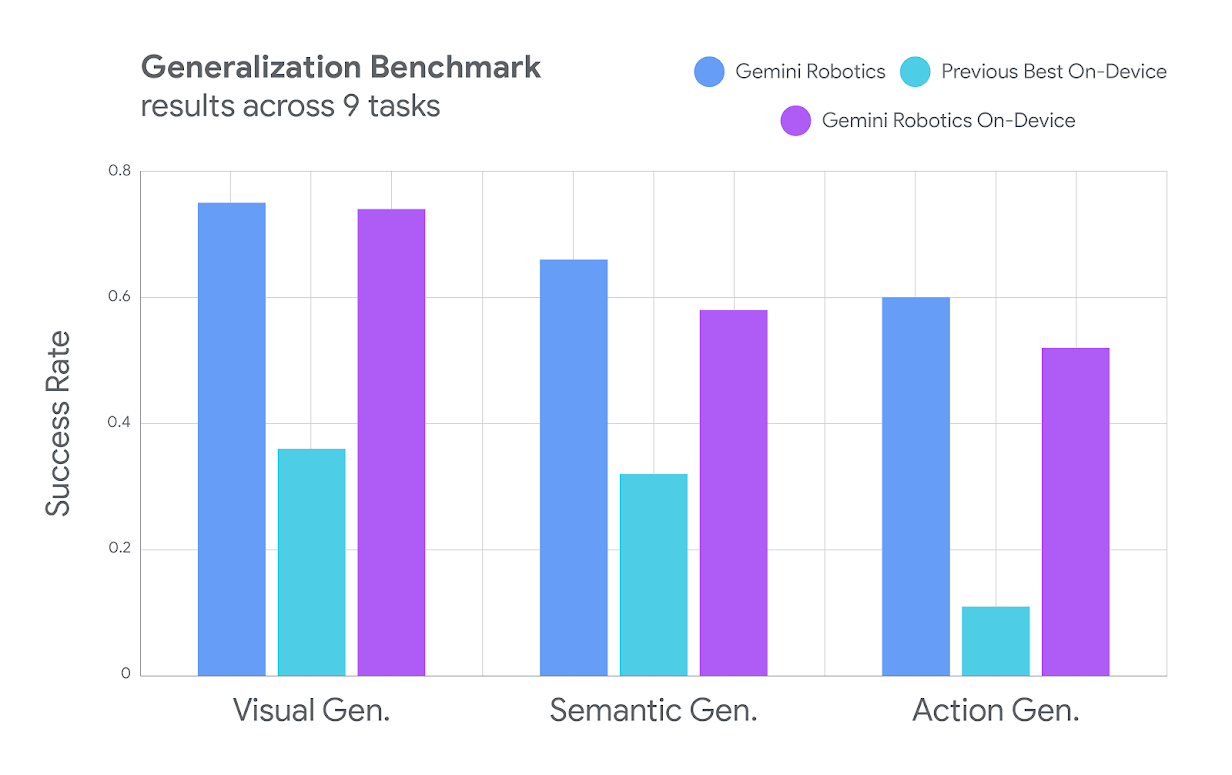

- Superior Generalization: Outperforms previous local models, especially in unfamiliar or novel tasks

Benchmarking shows that on-device Gemini models often rival or surpass larger, cloud-based systems when deployed in real-world scenarios.

Commitment to Responsible and Safe AI

DeepMind prioritizes ethical, responsible development in Gemini Robotics On-Device. Safety features are woven throughout the process, including:

- Adherence to Google’s AI Principles and a comprehensive safety framework

- Active semantic and content safety checks via the Live API

- Integration with low-level physical safety controllers

- Rigorous end-to-end testing with semantic safety benchmarks and red-teaming to uncover vulnerabilities

- Oversight from internal responsibility councils and dedicated teams to assess and mitigate societal risks

Currently, access is limited to trusted testers, ensuring that real-world feedback informs ongoing safety improvements before broader release.

Unlocking New Possibilities in Robotics

By embedding state-of-the-art AI directly onto robots, Gemini Robotics On-Device overcomes longstanding barriers around latency and connectivity.

This advance paves the way for transformative applications in manufacturing, logistics, household assistance, and more. The open SDK and accessible fine-tuning tools empower the robotics community to drive the next wave of intelligent machines.

Leading the Shift Toward Local Robotic Intelligence

Gemini Robotics On-Device marks a pivotal shift in robotic autonomy, delivering adaptable, efficient, and safe AI capabilities where they're needed most. As this technology becomes more widely available, it promises to redefine the boundaries of what robots can do—across industries and environments.

Source: Google DeepMind Blog

Gemini Robotics On-Device: Bringing Advanced AI Directly to Robots