Developers and AI coding tools are now working with live design context, pulled directly from Figma, to create cleaner and more consistent code.

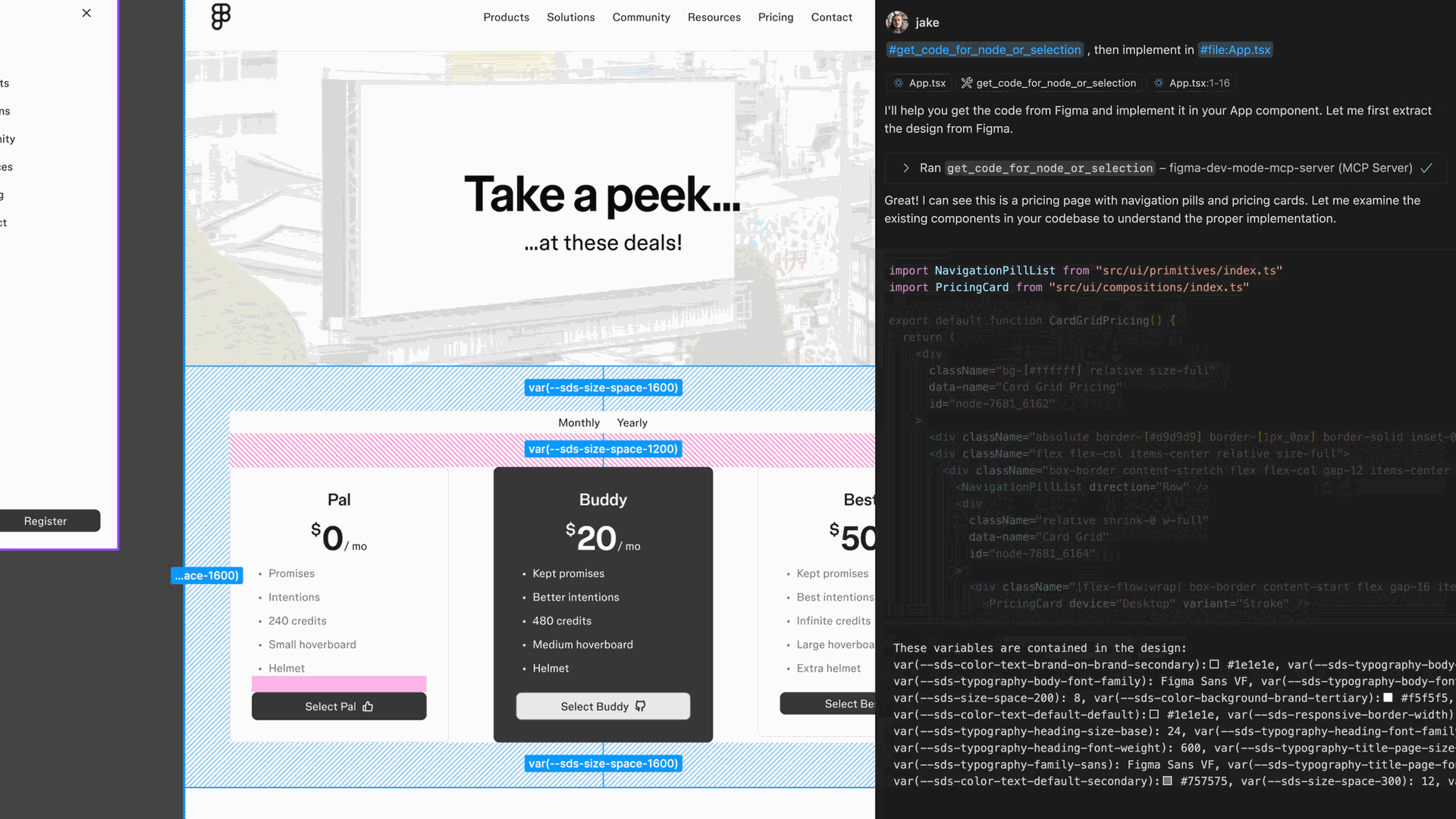

This is the reality with Figma’s new Dev Mode MCP server. By delivering crucial design information to AI-powered development tools, Figma is redefining how teams bridge the gap between design intent and code output.

The Importance of Context in AI Code Generation

Large language models (LLMs) have changed how code is written. However, without the right context, their outputs often miss the mark, struggling to reflect the unique patterns and structures of each engineering team’s codebase.

Each project has its own frameworks, architecture, and naming rules, creating a unique "fingerprint." While agentic coding tools have improved code generation by feeding LLMs with project-specific context, design context has historically been absent from the equation.

The Dev Mode MCP server addresses this by injecting Figma’s design details directly into developer workflows. This ensures that generated code reflects not just codebase patterns, but also the precise intentions and systems defined in design files.

Bringing Design Intent to LLMs

Developers interpret designs with an understanding of layout, logic, data flow, and content. For LLMs to generate code at the same level, they need more than static images or simple metadata.

Figma’s Dev Mode MCP server delivers this comprehensive context, allowing teams to control exactly what information is shared with AI tools for each coding task.

- Pattern Metadata: Variables, components, and style definitions help LLMs leverage existing design systems, saving time and minimizing token use.

- Screenshots: High-level visuals provide understanding of structure and flow, ensuring code matches both design intent and appearance.

- Interactivity: Pseudocode and interactive samples (like React or Tailwind code) help LLMs handle complex behaviors and stateful UI elements.

- Content: Text, images, SVGs, and layer names give LLMs the clues needed to populate interfaces with meaningful, context-aware data.

How the Dev Mode MCP Server Integrates with Workflows

Built on the Model Context Protocol (MCP), this server standardizes how context moves from Figma to LLMs. It integrates with popular agentic tools such as GitHub Copilot, Cursor, Windsurf, and Claude Code.

The server features three primary tools: one for extracting code, another for capturing images, and a third for surfacing variable definitions. Teams can adjust settings to tailor which outputs are returned, aligning context with the specific coding task.

Code Connect integration takes this further by mapping Figma components directly to codebase files. This ensures LLMs know precisely where to find or update code, reducing mismatches and streamlining collaboration.

Beta Access and What’s Next

Currently in beta, the Dev Mode MCP server is only the beginning. Figma plans to introduce remote server capabilities, deeper codebase integrations, and support for features such as annotations and grids.

The company is actively seeking community feedback to refine the tool, with a focus on simplifying onboarding and removing the need for the desktop app.

Access is open to all Dev or Full seat users. Figma’s commitment is clear: accelerate handoffs, improve code quality, and create a smarter, faster route from idea to implementation.

Key Takeaway

The Dev Mode MCP server empowers both developers and AI coding tools to generate design-accurate code, by offering rich, configurable context directly from Figma. This innovation promises to streamline design-to-code workflows, reduce friction, and enable teams to ship with greater confidence ushering in a new era for collaboration among design, engineering, and AI.

Figma’s Dev Mode MCP Server Is Transforming Design-to-Code Integration