Prompt engineering is crucial for maximizing the capabilities of large language models (LLMs), but it has traditionally required significant manual effort and specialized know-how.

As new tasks and model architectures emerge, the need for a scalable and efficient approach to prompt optimization is more pressing than ever. Enter PromptWizard (PW), a research framework that automates prompt creation and refinement, slashing both time and resource costs.

Automating Prompt Optimization

PromptWizard integrates LLM feedback in an iterative, intelligent process. Its core innovation lies in simultaneously refining both the instructions, the core task description, and the in-context examples that guide model outputs.

This holistic, feedback-driven approach enables the generation of high-performing prompts for even the most specialized or complex tasks, often within minutes.

Key Innovations

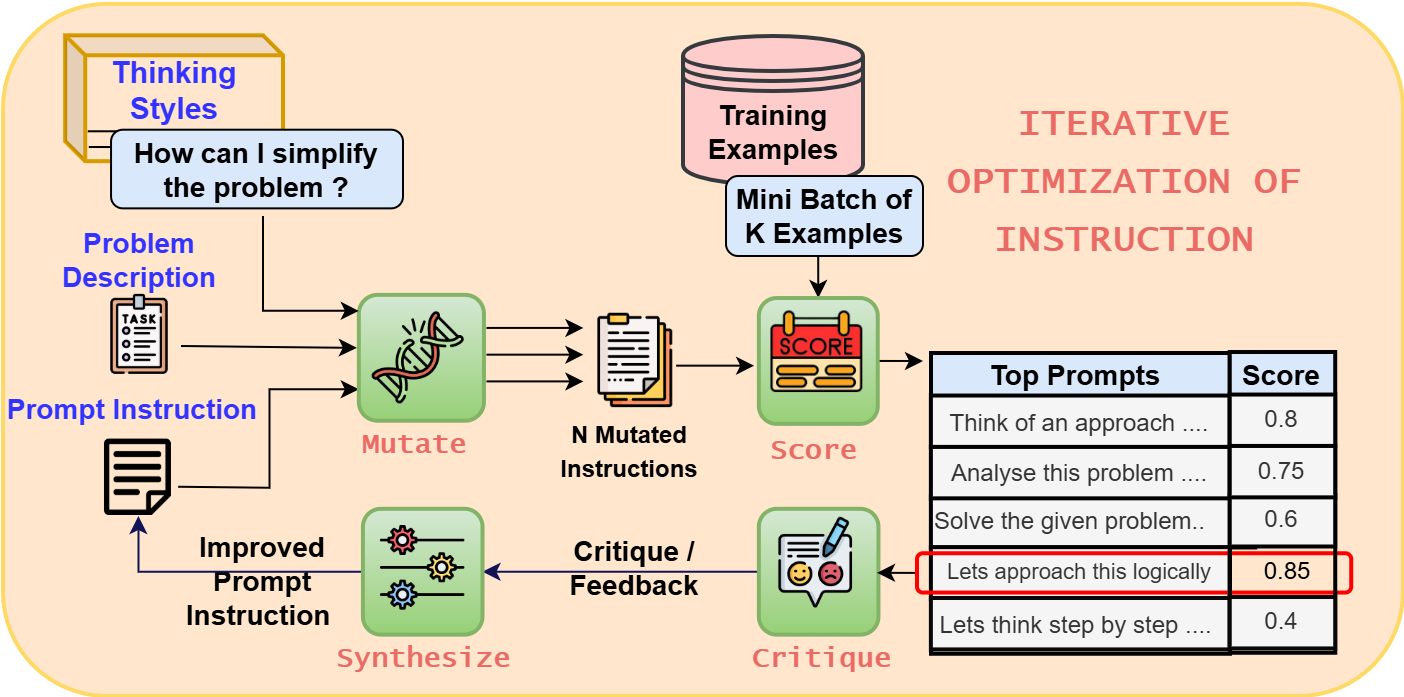

- Feedback-Driven Refinement: PW operates in a loop where the LLM not only generates prompts but also critiques and improves them, continuously raising quality through self-correction.

- Joint Optimization: By optimizing instructions and examples together, PromptWizard ensures that prompts are coherent, robust, and finely tuned to the task at hand.

- Chain-of-Thought Reasoning: PW leverages step-by-step reasoning in its examples, enhancing LLM performance on nuanced or multi-step problems.

An Iterative Two-Stage Process

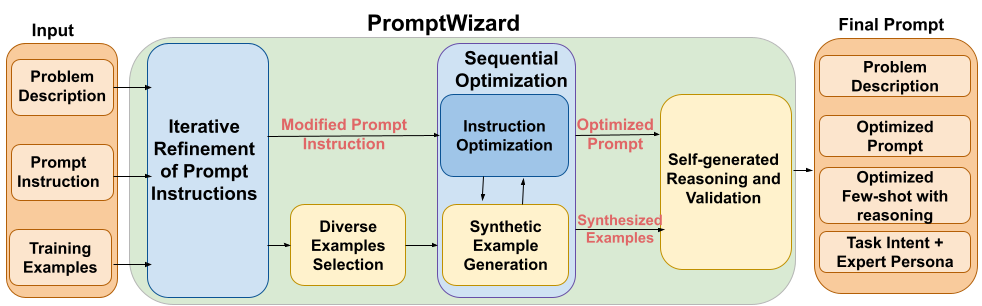

PromptWizard’s workflow starts with a user’s task description, an initial prompt, and a handful of training examples. It outputs optimized prompts with refined instructions and carefully aligned, task-aware examples enriched with reasoning and intent.

The process unfolds in two main stages:

- Instruction Refinement: Multiple prompt variations are generated, evaluated, and synthesized, balancing exploration with the exploitation of promising directions. This ensures rapid convergence to optimal instructions.

- Joint Instruction-Example Optimization: After refining instructions, PW pairs them with curated examples. Both components undergo further critique and synthesis, often resulting in the creation of synthetic examples that address gaps or special challenges.

This flexible, modular approach allows PromptWizard to support a broad range of applications, from mathematical problem-solving to creative writing, while maintaining speed and adaptability.

Performance: Setting New Standards

PromptWizard’s systematic, feedback-driven method yields exceptional accuracy, adaptability, and efficiency compared to leading alternatives.

In competitive benchmarks across 45+ tasks, PW outperformed established frameworks like Instinct, InstructZero, APE, PromptBreeder, EvoPrompt, DSPy, APO, and PromptAgent. Its highlights include:

- Accuracy: Achieves near best-in-class results across tasks, showing robust reliability.

- Efficiency: Reduces computational costs dramatically, needing only a fraction of the API calls and tokens required by other methods.

- Data Efficiency: Maintains high performance even with as few as five training examples, making it ideal for real-world scenarios with limited labeled data.

- Model Efficiency: Delivers strong results using smaller, less resource-intensive LLMs, making advanced prompt optimization accessible to more organizations.

Takeaway: The Future of Prompt Engineering

PromptWizard demonstrates that feedback-driven, self-improving systems are the future of prompt engineering. By automating the optimization process and leveraging iterative critique, PW sets a new benchmark for flexibility, scalability, and cost-effectiveness.

For AI researchers and enterprise teams alike, PromptWizard is paving the way for more accessible and powerful prompt engineering solutions.

Feedback-Driven Methods Are Transforming Prompt Engineering