Local AI development has taken a major step forward with the integration between Docker Model Runner and Hugging Face. This partnership puts powerful AI tools directly into developers’ hands, making it easier than ever to discover, run, and manage models on your own machine. The result? Advanced AI is more accessible and straightforward to use.

Community-Powered Collaboration

Central to this integration is a shared commitment to open, community-driven innovation. Docker Model Runner, an open-source solution, now works natively within the Hugging Face ecosystem, long recognized as a leading platform for AI, machine learning, and data science. This synergy not only streamlines workflows but also fosters a vibrant space for developers to collaborate and push boundaries together.

Streamlined Local Model Inference

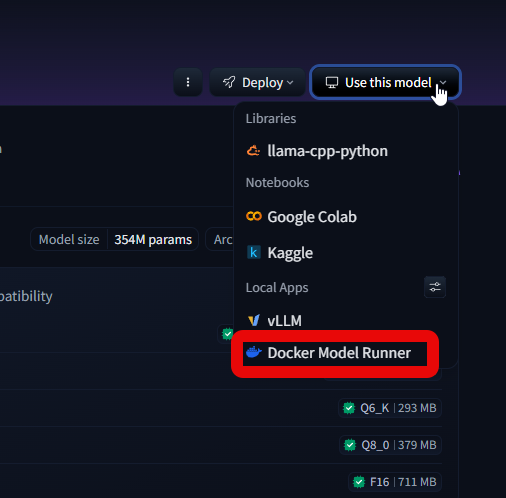

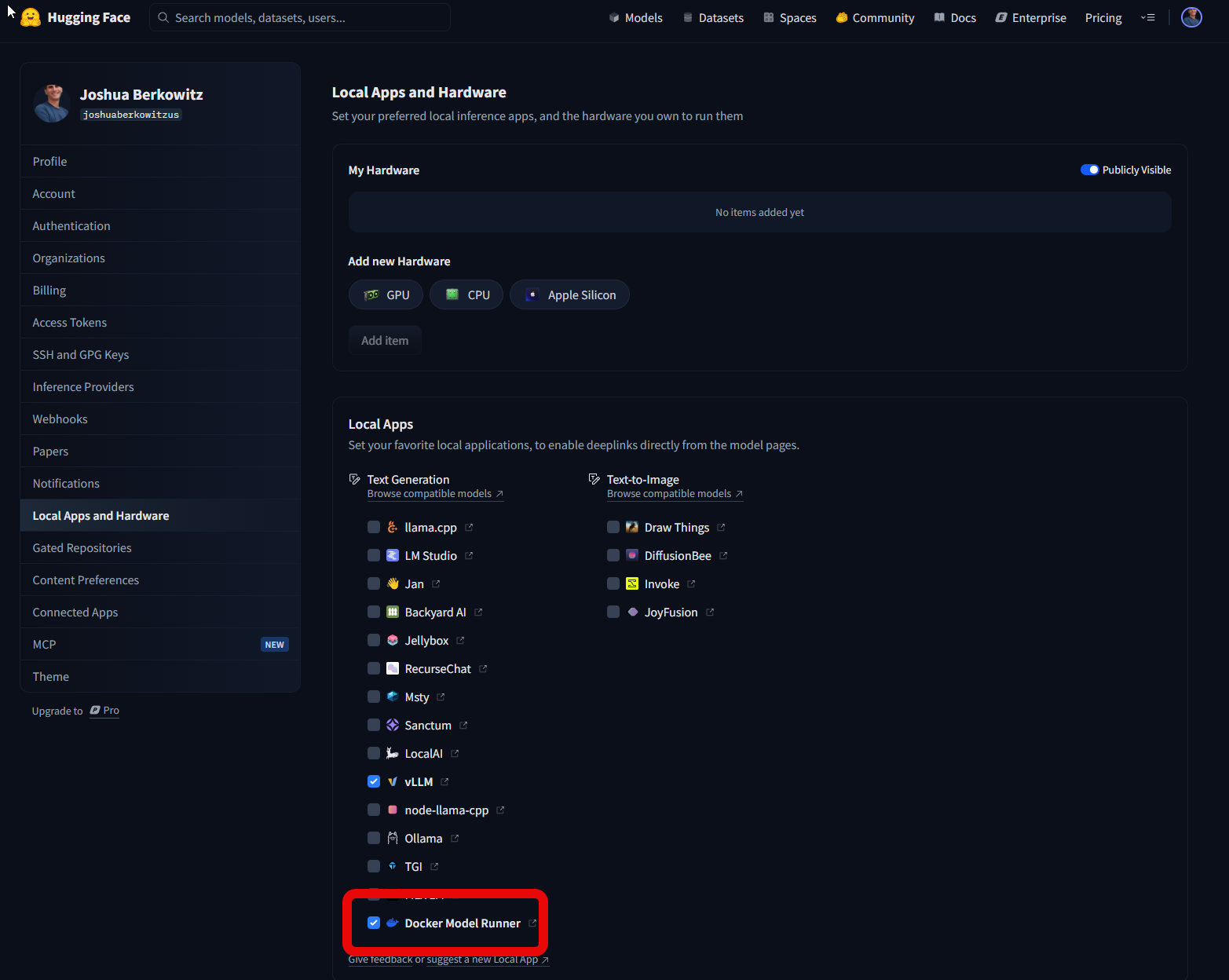

Running models locally used to require a patchwork of manual steps. Now, Hugging Face has made Docker Model Runner a default Local Apps provider, significantly reducing friction. Developers can:

- Select Docker Model Runner directly from any Hugging Face model repository page.

- Run models locally using pre-generated Docker commands right in the interface.

- Filter models to display only those compatible with Docker Model Runner, simplifying the discovery process.

This improved workflow enables you to choose Docker Model Runner as your inference engine and launch models with a single, ready-to-use command. The days of tedious setup are over.

Smarter Model Discovery

Hugging Face now includes a dedicated filter for models in the GGUF format that support Docker Model Runner. This enhancement allows users to:

- Quickly find compatible models without searching through the entire repository.

- Spot trending Docker-ready models using integrated sorting and filtering tools.

- Experience a discovery process as intuitive as browsing Docker Hub for container images.

These features make Hugging Face a premier source for Docker Model Runner-compatible models, bridging the gap between experimental research and practical, production-ready AI deployments.

Frictionless Execution for Developers

The new integration embeds Docker Model Runner directly into Hugging Face’s user interface, making local inference seamless. Developers can:

- Locate models, check compatibility, and access Docker run commands without ever leaving the Hugging Face site.

- Benefit from Docker as the default local app, no additional configuration required.

- Deploy and experiment with new models as easily as pulling and running a container image.

This unified experience leverages the best of both Docker and Hugging Face, delivering efficiency and familiarity to developers everywhere.

Open Source Evolution and Community Engagement

Docker Model Runner’s open-source foundation invites contributions from the global developer community. Developers are encouraged to suggest features, report issues, and actively shape the tool on GitHub. This collaborative approach ensures the platform evolves to meet real-world challenges and remains responsive to user needs.

Getting Started and Additional Resources

Docker provides a wealth of resources to help users maximize the benefits of this integration:

- Sign up for the Docker Offload Beta to seamlessly blend local and cloud-based model inference.

- Access in-depth guides and quickstart tutorials to master Docker Model Runner’s capabilities.

- Stay informed with documentation and updates on Docker’s AI solutions page.

Final Thoughts

This powerful integration represents a significant leap toward frictionless AI development. By combining Docker’s containerization expertise with Hugging Face’s vast model repository, developers can now discover, pull, and run AI models with ease. The open-source spirit behind this collaboration ensures continuous improvement, inviting everyone to participate and innovate in the future of local AI.

Source: Docker Blog

Effortless Local AI: Docker Model Runner and Hugging Face