AI development is moving faster than ever, but managing experiments, tracking quality, and maintaining oversight can be a tangled mess. MLflow 3.0 changes the game by bringing traditional ML, deep learning, and generative AI (GenAI) experimentation under one roof, eliminating tool sprawl and reducing complexity for teams eager to ship reliable AI solutions.

MLflow 3.0 unifies traditional ML, deep learning, and GenAI development in a single platform, eliminating the need for separate specialized tools

What’s New in MLflow 3.0?

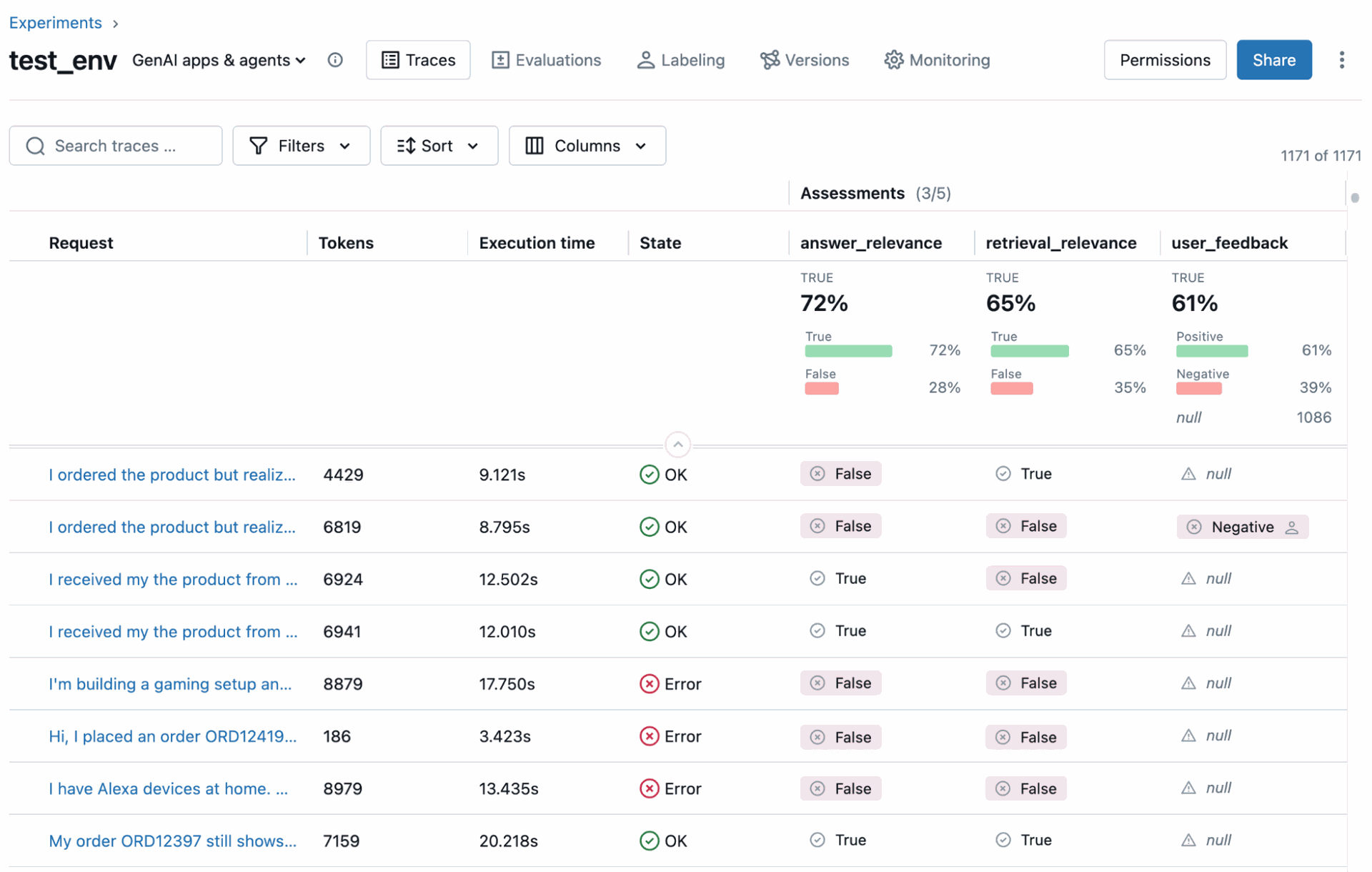

- GenAI at Scale: MLflow’s new tracing capabilities let you see every GenAI request, prompts, outputs, latency, and costs, across 20+ libraries, all mapped directly to your code and data.

- Quality Evaluation: Built-in LLM judges automatically score GenAI outputs. Assessments are grounded in real user conversations, with the flexibility to add custom judges for your domain.

- Unified Workflows: From fine-tuning deep learning models to building GenAI agents, standardized processes and lifecycle management drive consistency and compliance.

- Enterprise Reliability: MLflow 3.0 powers mission-critical AI in thousands of organizations, with both open source and managed Databricks service options.

Solving Tough GenAI Challenges

GenAI brings unique demands: you need observability, objective quality measurement, and fast feedback cycles. MLflow 3.0 delivers with:

- Production-Grade Tracing: Every request and outcome is traceable, making root cause analysis and debugging effortless.

- Automated & Custom Evaluation: LLM judges and custom datasets make large-scale, practical assessments possible.

- Integrated Feedback: The Review App streamlines feedback collection, connecting real user and expert insights directly to your development process.

- Version Tracking: All code, prompts, and configs are versioned, with the Prompt Registry supporting instant rollback and A/B testing backed by visual diffs and performance tracking.

MLflow 3.0 makes quality evaluation simple. Create an evaluation dataset from your production traces, then run research-backed LLM judges powered by Databricks Mosaic AI Agent Evaluation:

# Create evaluation dataset from production traces

production_traces = mlflow.search_traces(

experiment_ids=[experiment_id],

filter_string="tags.customer_tier = 'gold'",

max_results=10000

)

evaluation_dataset = mlflow.genai.create_dataset("main.product_bot.eval_set")

evaluation_dataset.insert(production_traces)

# Run comprehensive quality evaluation on the retrieved traces

results = mlflow.genai.evaluate(

data=evaluation_dataset,

scorers=[

# Are responses hallucinating or grounded in facts?

mlflow.genai.scorers.RetrievalGroundedness(),

# Are we retrieving the right documents?

mlflow.genai.scorers.RetrievalRelevance(),

# Do responses actually answer the question?

mlflow.genai.scorers.RelevanceToQuery(),

# Are responses safe and appropriate?

mlflow.genai.scorers.Safety()

]

)

A Real-World Example: E-Commerce Chatbot

Consider an e-commerce chatbot team using MLflow 3.0:

- Spotting Issues: Production tracing highlights slow or inaccurate responses, guiding code optimizations that cut latency.

- Evaluating Output: LLM judges and expert feedback pinpoint weaknesses, such as irrelevant retrievals.

- Iterative Improvements: Feedback from domain experts shapes prompt and retrieval logic, with full version control for every change.

- Confident Deployment: Automated deployment jobs and Unity Catalog enforce strict quality and governance before models go live.

- Continuous Learning: Ongoing user feedback feeds into performance monitoring and retraining, keeping the chatbot sharp and reliable.

Beyond GenAI: Benefits for All AI Workloads

Not just for GenAI, MLflow 3.0’s new LoggedModel abstraction and version tracking help manage deep learning checkpoints and traditional ML models. Unity Catalog integration ensures consistent governance and deployment across the enterprise.

How to Get Started

MLflow 3.0 on Databricks is ready for teams of any size. Existing users can upgrade instantly, while newcomers benefit from clear documentation and quick-start guides. The platform is designed for reliability, security, and seamless integration into any AI workflow.

The Takeaway

MLflow 3.0 unifies experimentation, observability, and governance for AI, streamlining fragmented workflows and raising the bar for GenAI support. Organizations can now innovate with confidence, knowing their AI initiatives are auditable, scalable, and ready for the future.

Source: Databricks Blog

Databricks MLflow 3.0: AI Experimentation and Governance for Enterprises