Researchers from Google Deepmind have introduced TxGemma, a suite of efficient, generalist large language models (LLMs) designed to address the costly and high-risk nature of therapeutic drug development. These models demonstrate capabilities in therapeutic property prediction, interactive reasoning, and explainability, offering a broad application across the drug development pipeline.

Key Takeaways

- TxGemma, comprising 2B, 9B, and 27B parameter models fine-tuned from Gemma-2, achieves superior or comparable performance to state-of-the-art generalist models on 64 out of 66 therapeutic development tasks.

- Compared to specialist models, TxGemma outperforms or matches them on 50 tasks.

- TxGemma features conversational models that allow scientists to interact in natural language and provide mechanistic reasoning for predictions.

- Agentic-Tx, powered by Gemini 2.0, integrates TxGemma with external tools to reason, act, manage workflows, and acquire external knowledge, surpassing leading models on reasoning benchmarks like Humanity’s Last Exam (Chemistry & Biology) and ChemBench.

- Fine-tuning TxGemma on downstream therapeutic tasks requires less training data compared to base LLMs, making it suitable for data-limited applications.

- The open release of the TxGemma collection empowers researchers to adapt and validate the models on their own datasets.

Overview

The pharmaceutical industry is grappling with significant challenges in bringing new therapeutics to market, characterized by high failure rates and protracted, exceptionally expensive development timelines.

Success in this domain hinges on a drug candidate exhibiting not only efficacy but also favorable safety profiles, metabolic stability, pharmacokinetic and pharmacodynamic properties, and developability.

Determining these diverse characteristics traditionally involves a multitude of intricate and costly experimental procedures carried out by seasoned experts, underscoring the pressing need for more efficient methodologies.

Recent advancements in large language models (LLMs) present a compelling use case to harness available data and mitigate these inherent limitations. LLMs have demonstrated a remarkable capacity to integrate and learn from disparate data sources across a spectrum of domains, including scientific applications.

Their potential to bridge seemingly unconnected facets of drug development, such as chemical structure, biological activity, and clinical trial outcomes, is particularly promising.

Building upon prior work with Tx-LLM, which lacked conversational capabilities, the researchers introduce TxGemma, a suite of efficient, generalist LLMs specifically trained for therapeutic research.

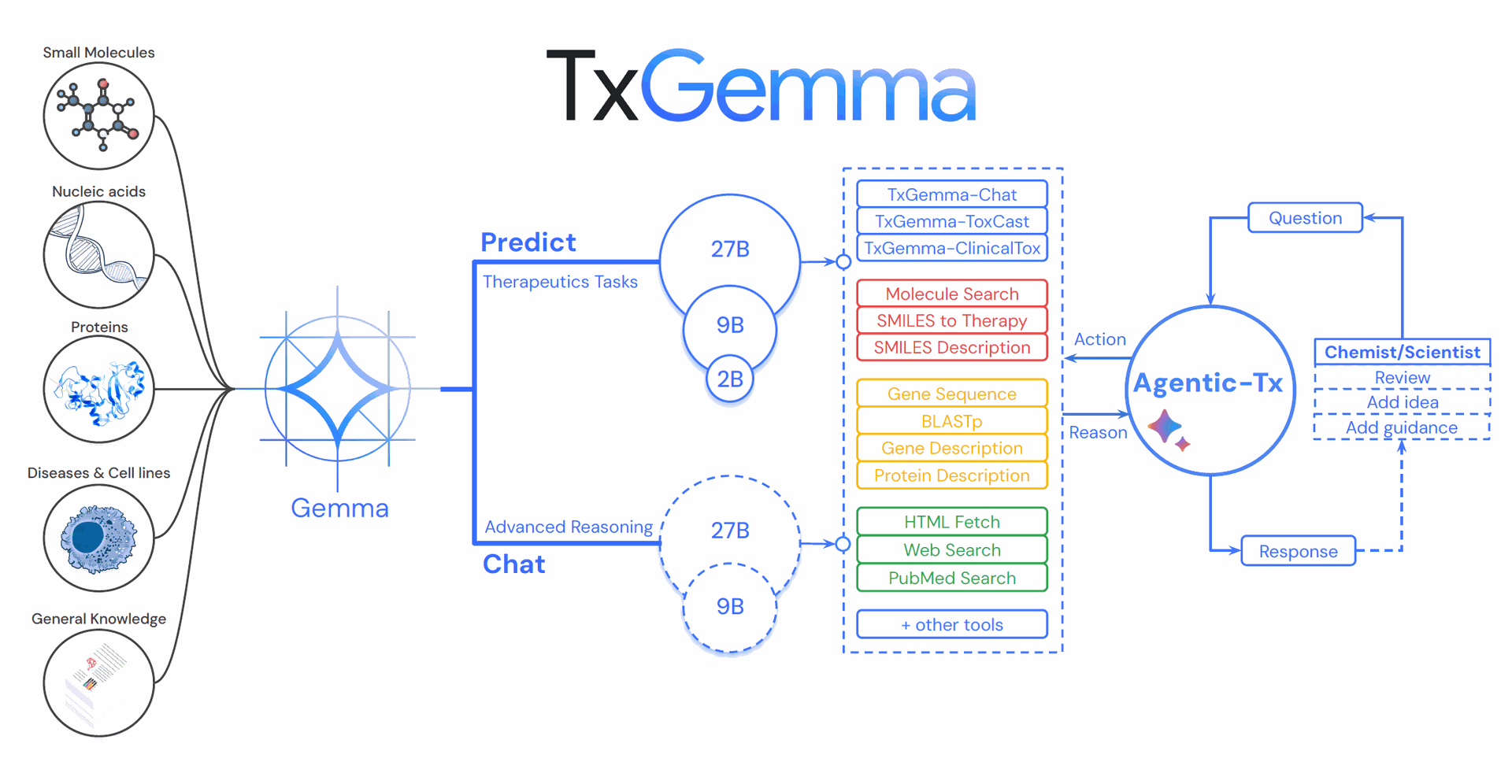

All TxGemma variants are trained on diverse data sources of the Therapeutic Data Commons (TDC). TxGemma-Predict comes in three size variants (2B, 9B, and 27B) and is trained for high-performance predictions on a broad set of therapeutic development tasks. TxGemma-Chat features two variants (9B and 27B) and is trained on a combination of TDC data with general Gemma-2 instruction tuning data to retain conversational and reasoning capabilities. Agentic-Tx, a therapeutics-focused agentic system powered by Gemini 2.0, has access to 18 tools including TxGemma-Predict and TxGemma-Chat to collect external knowledge and manages complex tasks in either autonomous or interactive settings.

Introducing TXGemma

TxGemma is a suite of models with 2 billion, 9 billion, and 27 billion parameters, fine-tuned from the Gemma-2 family of open LLMs.

The fine-tuning process utilized a comprehensive collection of therapeutic instruction-tuning datasets derived from the Therapeutics Data Commons (TDC). TDC is a rich repository of 66 AI-ready datasets spanning the drug discovery and development pipeline, encompassing over 15 million data points across various biomedical entities and task types.

For the first time in therapeutic AI, TxGemma incorporates conversational counterparts capable of reasoning and providing explanations, moving beyond traditional "black-box" predictions to foster mechanistic understanding and facilitate scientific discussions.

Furthermore, Agentic-Tx, a generalist therapeutic agentic system powered by Gemini 2.0, integrates TxGemma's capabilities with access to 18 tools, enabling it to reason, act, manage complex workflows, and acquire external domain knowledge.

Why It’s Important

TxGemma's development signifies a potential paradigm shift in therapeutic AI development, demonstrating the viability of generalist LLMs in a field often dominated by task-specific models. Despite the established efficacy of specialist models in niche areas, TxGemma, as a relatively efficient generalist, achieves competitive results across a broad spectrum of therapeutic tasks.

This underscores the potential for broadly trained LLMs, leveraging comprehensive datasets like TDC, to serve as powerful initial tools for hypothesis generation, information synthesis, and candidate prioritization in drug discovery.

The introduction of conversational capabilities in TxGemma addresses a key limitation of previous therapeutic LLMs like Tx-LLM, enabling more nuanced interaction with scientists and facilitating a deeper understanding of model predictions through chain of thought reasoning.

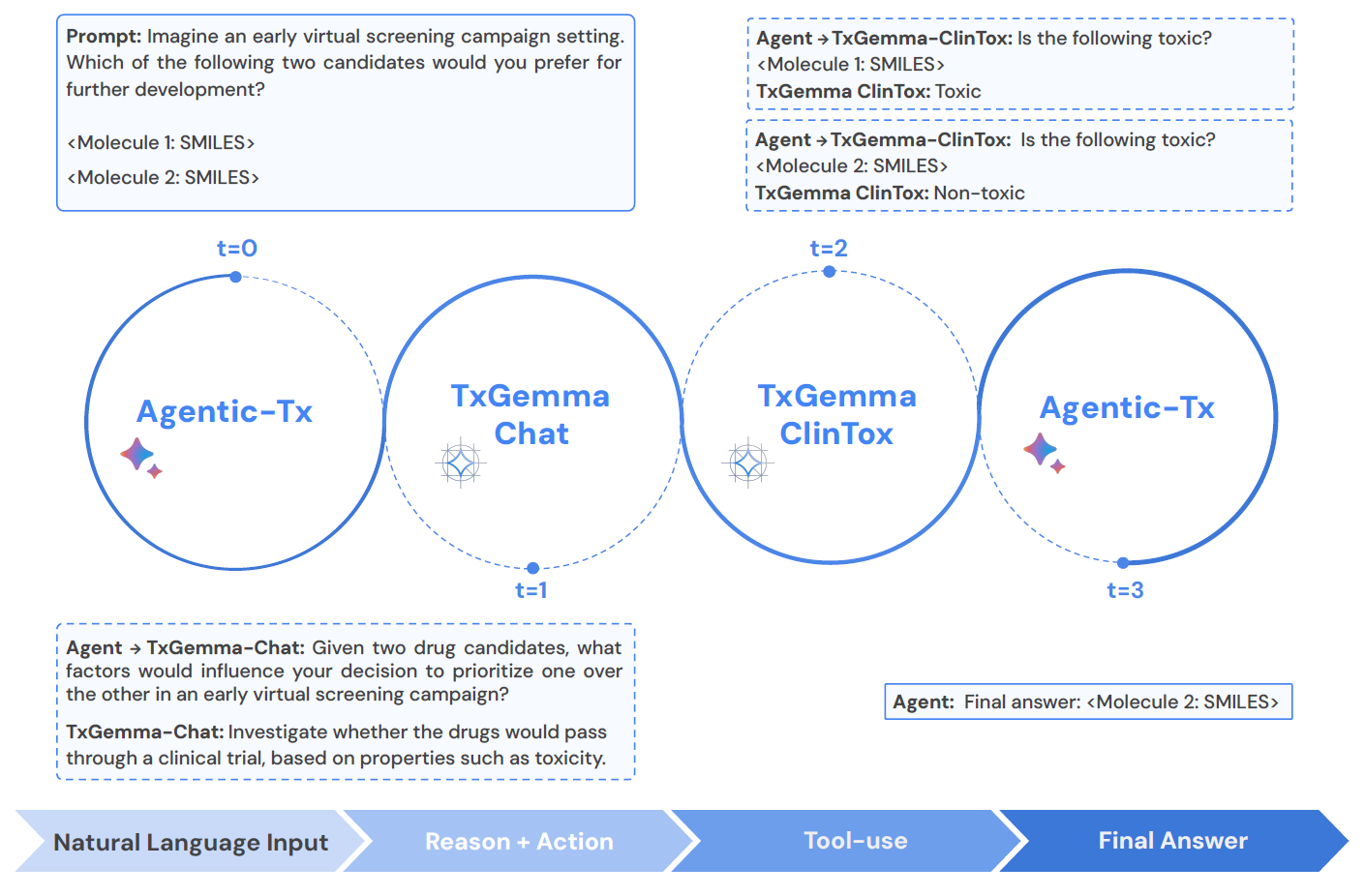

Figure 2 j Example workflow of agentic planning and execution with Agentic-Tx.

Agentic-Tx further expands the potential of LLMs in this domain by orchestrating complex, multi-step workflows. By integrating TxGemma with a suite of external tools, including PubMed, Wikipedia, and chemical databases, Agentic-Tx can tackle reasoning-intensive tasks that are often beyond the scope of standalone LLMs.

Its strong performance on challenging benchmarks like ChemBench and Humanity’s Last Exam highlights the synergistic value of combining domain-specific knowledge from TxGemma with general reasoning and information retrieval capabilities. This ability to access up-to-date external knowledge also addresses the inherent "knowledge cut-off" limitation of static LLMs.

Crucially, the open release of the TxGemma collection empowers the broader research community to adapt and refine these models on their own proprietary datasets, fostering validation and potential performance improvements tailored to specific research needs.

Given the prevalence of proprietary data in therapeutic research, this collaborative, community-driven approach is essential for translating TxGemma's potential into tangible therapeutic applications.

Summary of Results

The researchers evaluated TxGemma's predictive performance across 66 therapeutic development tasks from the TDC. As illustrated in Figure 1, TxGemma-Predict comes in three size variants (2B, 9B, and 27B) and is trained for high-performance predictions. TxGemma-Chat features two variants (9B and 27B) with added conversational and reasoning capabilities.

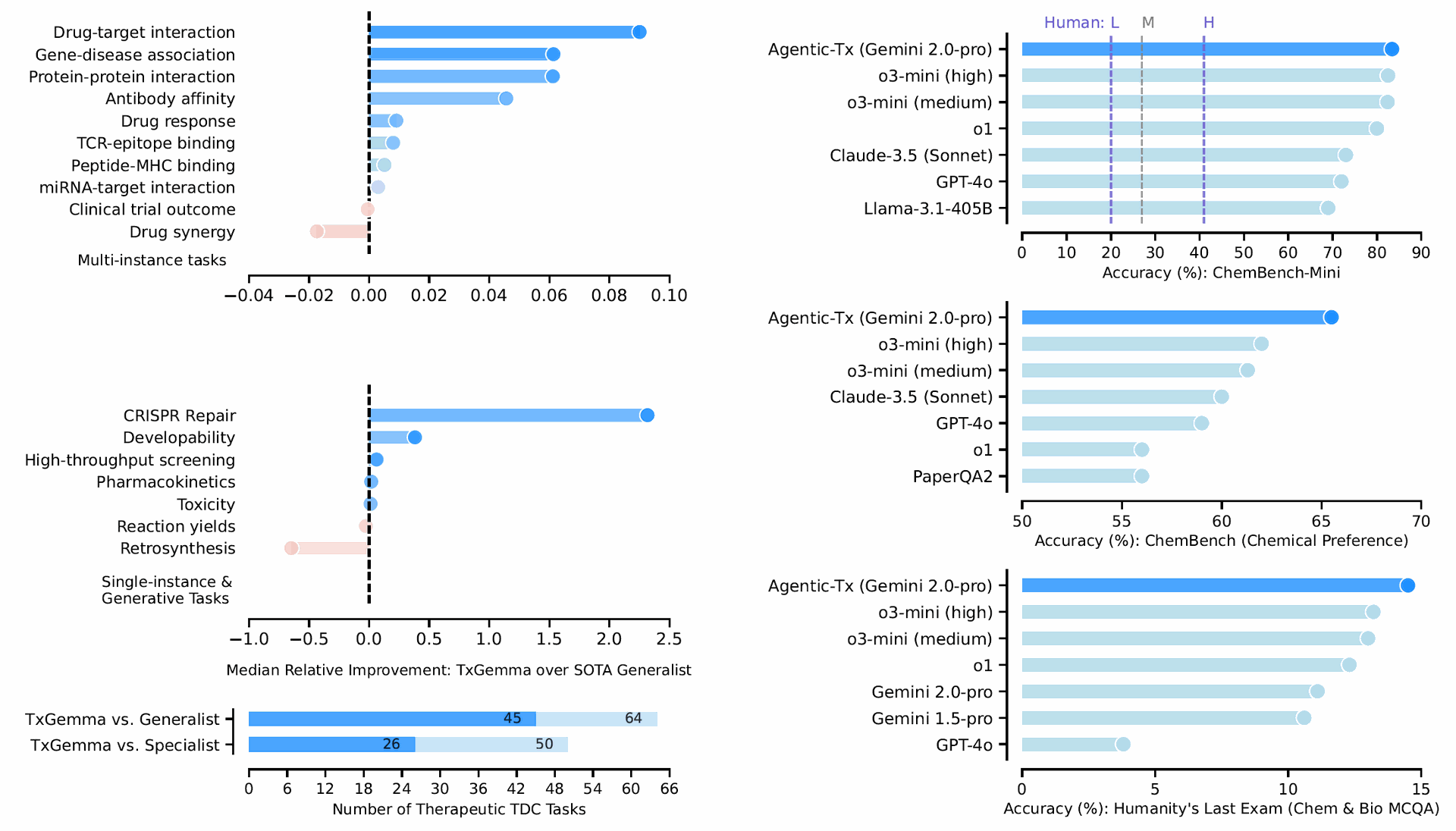

TxGemma-Predict demonstrated superior or comparable performance to the state-of-the-art generalist model on 64 out of the 66 tasks. Specifically, it achieved superior performance on 45 tasks. When compared against state-of-the-art specialist models, TxGemma achieved superior or comparable performance on 50 tasks, with superior performance on 26.

Figure 1 (bottom-right) Absolute performance of Agentic-Tx compared to best-in-class models on three complex therapeutic-related reasoning benchmarks. The state-of-the-art (SOTA) values are obtained from [1, 2] and details are listed in Table 3. Dashed lines: L=lowest, M=mean, H=highest human scores. (bottom-left) Relative performance changes of TxGemma-Predict compared to the SOTA generalist model for each task type. The assignment of the 66 evaluated TDC tasks to task types is shown in Tables S.2 and S.3. The bottom bar chart shows a summary of results where TxGemma-Predict outperforms or nearly matches SOTA (light blue), and outperforms SOTA (darker blue).

Figure 1 (bottom-left) visually summarizes these results, showing the percentage of tasks where TxGemma-Predict outperforms or nearly matches, and outperforms, the SOTA generalist model.

Agentic-Tx's performance was evaluated on complex therapeutic-related reasoning benchmarks, including Humanity’s Last Exam (Chemistry & Biology) and ChemBench. Figure 1 (bottom-right) shows the absolute performance of Agentic-Tx compared to other leading models on these benchmarks. Notably, Agentic-Tx (Gemini 2.0-pro) achieved a 9.8% relative improvement over o3-mini (high) on Humanity’s Last Exam and 5.6% and 1.1% relative improvements on ChemBench-Preference and ChemBench-Mini, respectively.

This highlights Agentic-Tx's advanced reasoning capabilities and its ability to leverage external tools effectively. Figure S.14 provides a breakdown of tool usage frequency within Agentic-Tx across different benchmarks, demonstrating its adaptive nature in utilizing tools relevant to the specific task.

The study also investigated the data efficiency of TxGemma, finding that fine-tuning TxGemma models on therapeutic downstream tasks requires less training data than fine-tuning base LLMs. This suggests that TxGemma has learned useful representations during its pre-training and therapeutic fine-tuning, making it more adaptable to data-limited scenarios.

Conclusion

This research introduces TxGemma, a suite of efficient, generalist LLMs that significantly advance the field of AI for therapeutic development. By leveraging extensive therapeutic instruction-tuning datasets and building upon the robust foundation of Gemma-2, TxGemma demonstrates exceptional performance across a wide array of predictive and generative therapeutic tasks, often surpassing or matching both generalist and specialist state-of-the-art models.

The inclusion of conversational capabilities in TxGemma-Chat represents a significant step towards more interactive and explainable AI in this domain. Furthermore, the integration of TxGemma into the agentic system Agentic-Tx showcases the potential for LLMs to tackle complex, multi-step problems, achieving state-of-the-art results on challenging reasoning benchmarks.

The open release of TxGemma is a critical contribution that empowers the research community to further explore, validate, and adapt these models, paving the way for more efficient, transparent, and collaborative AI-driven therapeutic research.

Google Deepmind Launches Open Source TxGemma for Drug Development

TxGemma: Efficient and Agentic LLMs for Therapeutics